Sebastian Herzog

One-shot path planning for multi-agent systems using fully convolutional neural network

Apr 01, 2020

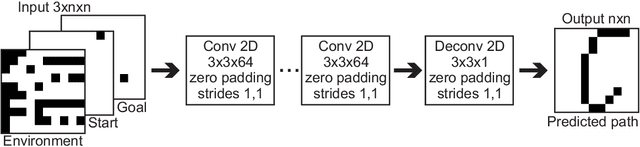

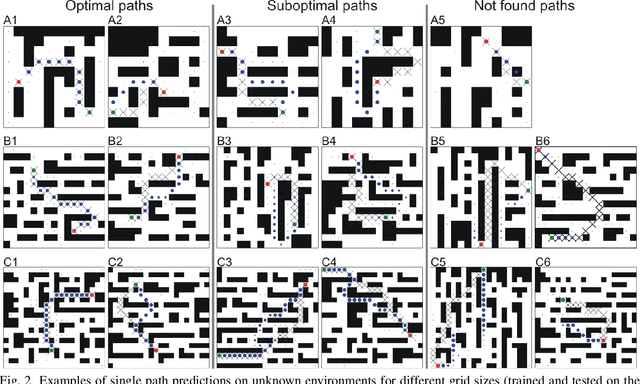

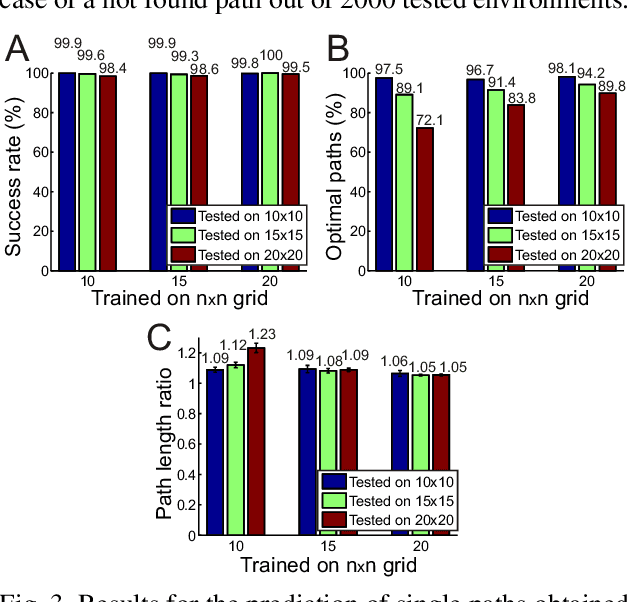

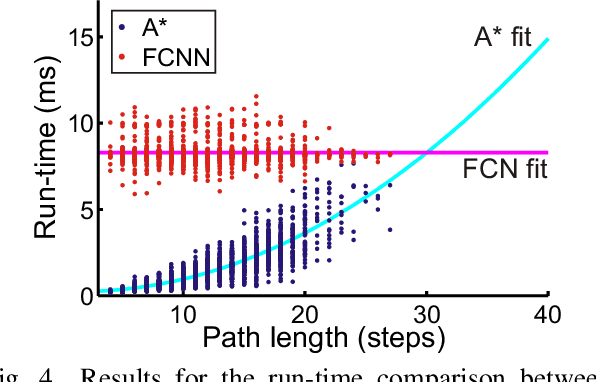

Abstract:Path planning plays a crucial role in robot action execution, since a path or a motion trajectory for a particular action has to be defined first before the action can be executed. Most of the current approaches are iterative methods where the trajectory is generated iteratively by predicting the next state based on the current state. Moreover, in case of multi-agent systems, paths are planned for each agent separately. In contrast to that, we propose a novel method by utilising fully convolutional neural network, which allows generation of complete paths, even for more than one agent, in one-shot, i.e., with a single prediction step. We demonstrate that our method is able to successfully generate optimal or close to optimal paths in more than 98\% of the cases for single path predictions. Moreover, we show that although the network has never been trained on multi-path planning it is also able to generate optimal or close to optimal paths in 85.7\% and 65.4\% of the cases when generating two and three paths, respectively.

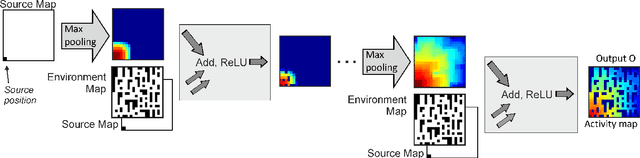

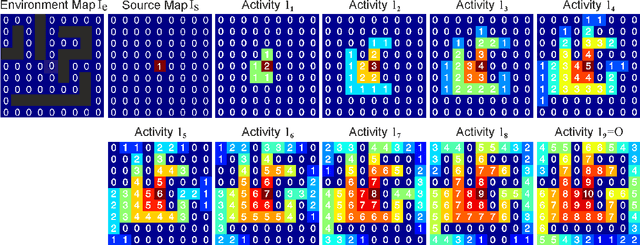

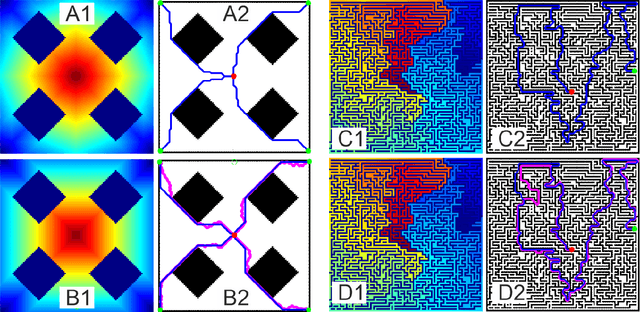

Generation of Paths in a Maze using a Deep Network without Learning

Apr 01, 2020

Abstract:Trajectory- or path-planning is a fundamental issue in a wide variety of applications. Here we show that it is possible to solve path planning for multiple start- and end-points highly efficiently with a network that consists only of max pooling layers, for which no network training is needed. Different from competing approaches, very large mazes containing more than half a billion nodes with dense obstacle configuration and several thousand path end-points can this way be solved in very short time on parallel hardware.

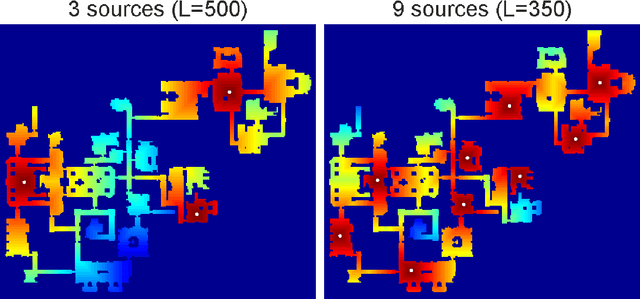

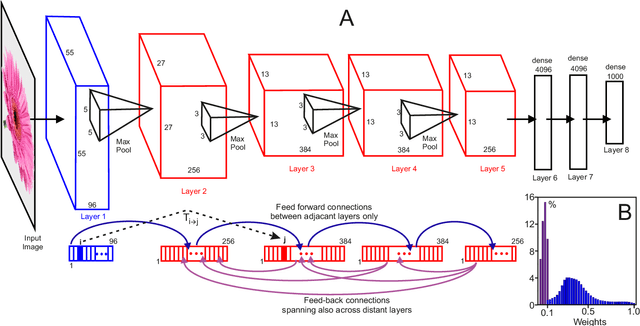

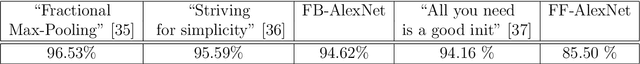

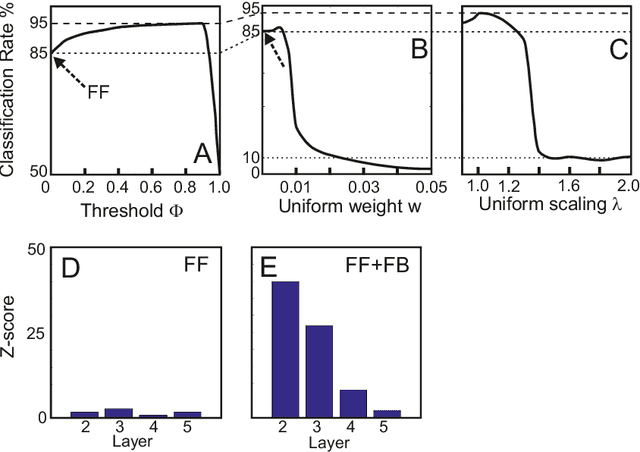

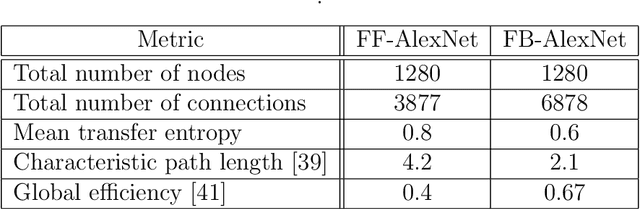

Transfer entropy-based feedback improves performance in artificial neural networks

Jun 22, 2017

Abstract:The structure of the majority of modern deep neural networks is characterized by uni- directional feed-forward connectivity across a very large number of layers. By contrast, the architecture of the cortex of vertebrates contains fewer hierarchical levels but many recurrent and feedback connections. Here we show that a small, few-layer artificial neural network that employs feedback will reach top level performance on a standard benchmark task, otherwise only obtained by large feed-forward structures. To achieve this we use feed-forward transfer entropy between neurons to structure feedback connectivity. Transfer entropy can here intuitively be understood as a measure for the relevance of certain pathways in the network, which are then amplified by feedback. Feedback may therefore be key for high network performance in small brain-like architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge