Sara Casao

SpectralWaste Dataset: Multimodal Data for Waste Sorting Automation

Mar 26, 2024Abstract:The increase in non-biodegradable waste is a worldwide concern. Recycling facilities play a crucial role, but their automation is hindered by the complex characteristics of waste recycling lines like clutter or object deformation. In addition, the lack of publicly available labeled data for these environments makes developing robust perception systems challenging. Our work explores the benefits of multimodal perception for object segmentation in real waste management scenarios. First, we present SpectralWaste, the first dataset collected from an operational plastic waste sorting facility that provides synchronized hyperspectral and conventional RGB images. This dataset contains labels for several categories of objects that commonly appear in sorting plants and need to be detected and separated from the main trash flow for several reasons, such as security in the management line or reuse. Additionally, we propose a pipeline employing different object segmentation architectures and evaluate the alternatives on our dataset, conducting an extensive analysis for both multimodal and unimodal alternatives. Our evaluation pays special attention to efficiency and suitability for real-time processing and demonstrates how HSI can bring a boost to RGB-only perception in these realistic industrial settings without much computational overhead.

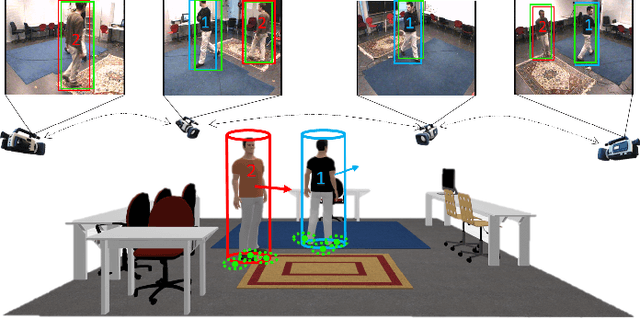

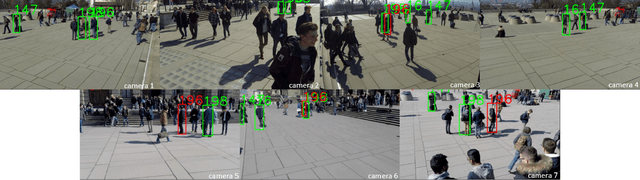

A Framework for Fast Prototyping of Photo-realistic Environments with Multiple Pedestrians

Apr 14, 2023

Abstract:Robotic applications involving people often require advanced perception systems to better understand complex real-world scenarios. To address this challenge, photo-realistic and physics simulators are gaining popularity as a means of generating accurate data labeling and designing scenarios for evaluating generalization capabilities, e.g., lighting changes, camera movements or different weather conditions. We develop a photo-realistic framework built on Unreal Engine and AirSim to generate easily scenarios with pedestrians and mobile robots. The framework is capable to generate random and customized trajectories for each person and provides up to 50 ready-to-use people models along with an API for their metadata retrieval. We demonstrate the usefulness of the proposed framework with a use case of multi-target tracking, a popular problem in real pedestrian scenarios. The notable feature variability in the obtained perception data is presented and evaluated.

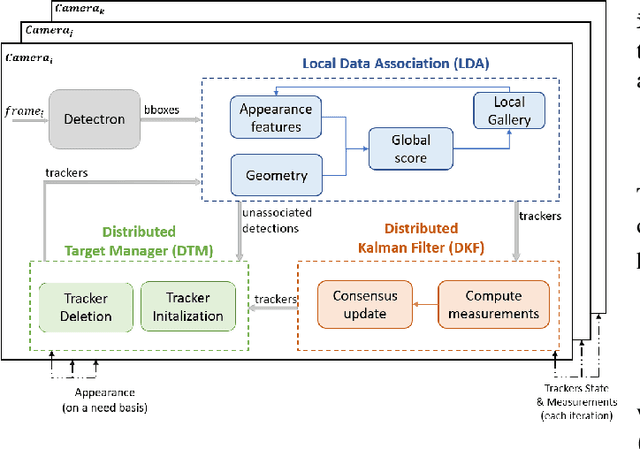

Distributed Multi-Target Tracking in Camera Networks

Oct 26, 2020

Abstract:Most recent works on multi-target tracking with multiple cameras focus on centralized systems. In contrast, this paper presents a multi-target tracking approach implemented in a distributed camera network. The advantages of distributed systems lie in lighter communication management, greater robustness to failures and local decision making. On the other hand, data association and information fusion are more challenging than in a centralized setup, mostly due to the lack of global and complete information. The proposed algorithm boosts the benefits of the Distributed-Consensus Kalman Filter with the support of a re-identification network and a distributed tracker manager module to facilitate consistent information. These techniques complement each other and facilitate the cross-camera data association in a simple and effective manner. We evaluate the whole system with known public data sets under different conditions demonstrating the advantages of combining all the modules. In addition, we compare our algorithm to some existing centralized tracking methods, outperforming their behavior in terms of accuracy and bandwidth usage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge