Saqib Ali Khan

Exploring Deep 3D Spatial Encodings for Large-Scale 3D Scene Understanding

Nov 29, 2020

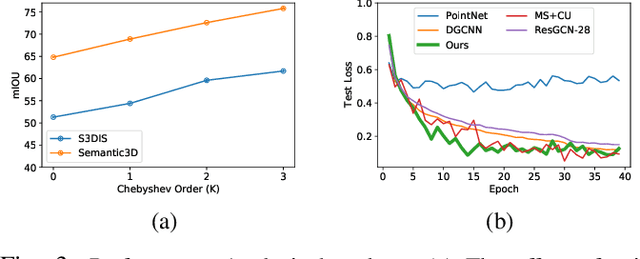

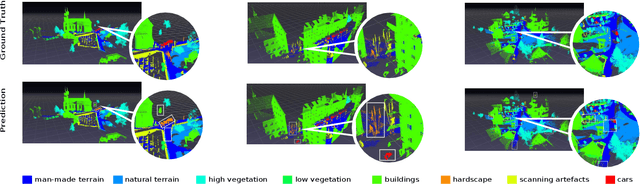

Abstract:Semantic segmentation of raw 3D point clouds is an essential component in 3D scene analysis, but it poses several challenges, primarily due to the non-Euclidean nature of 3D point clouds. Although, several deep learning based approaches have been proposed to address this task, but almost all of them emphasized on using the latent (global) feature representations from traditional convolutional neural networks (CNN), resulting in severe loss of spatial information, thus failing to model the geometry of the underlying 3D objects, that plays an important role in remote sensing 3D scenes. In this letter, we have proposed an alternative approach to overcome the limitations of CNN based approaches by encoding the spatial features of raw 3D point clouds into undirected symmetrical graph models. These encodings are then combined with a high-dimensional feature vector extracted from a traditional CNN into a localized graph convolution operator that outputs the required 3D segmentation map. We have performed experiments on two standard benchmark datasets (including an outdoor aerial remote sensing dataset and an indoor synthetic dataset). The proposed method achieves on par state-of-the-art accuracy with improved training time and model stability thus indicating strong potential for further research towards a generalized state-of-the-art method for 3D scene understanding.

Table Structure Extraction with Bi-directional Gated Recurrent Unit Networks

Jan 08, 2020

Abstract:Tables present summarized and structured information to the reader, which makes table structure extraction an important part of document understanding applications. However, table structure identification is a hard problem not only because of the large variation in the table layouts and styles, but also owing to the variations in the page layouts and the noise contamination levels. A lot of research has been done to identify table structure, most of which is based on applying heuristics with the aid of optical character recognition (OCR) to hand pick layout features of the tables. These methods fail to generalize well because of the variations in the table layouts and the errors generated by OCR. In this paper, we have proposed a robust deep learning based approach to extract rows and columns from a detected table in document images with a high precision. In the proposed solution, the table images are first pre-processed and then fed to a bi-directional Recurrent Neural Network with Gated Recurrent Units (GRU) followed by a fully-connected layer with soft max activation. The network scans the images from top-to-bottom as well as left-to-right and classifies each input as either a row-separator or a column-separator. We have benchmarked our system on publicly available UNLV as well as ICDAR 2013 datasets on which it outperformed the state-of-the-art table structure extraction systems by a significant margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge