Sanket Tavarageri

PolyDL: Polyhedral Optimizations for Creation of High Performance DL primitives

Jun 02, 2020

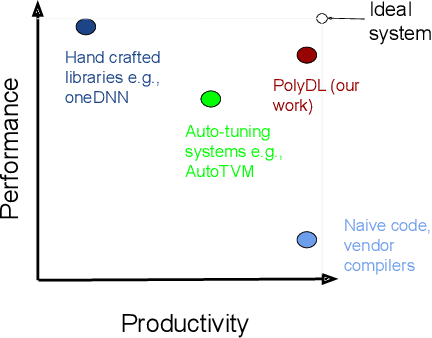

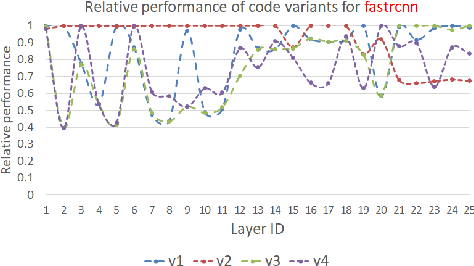

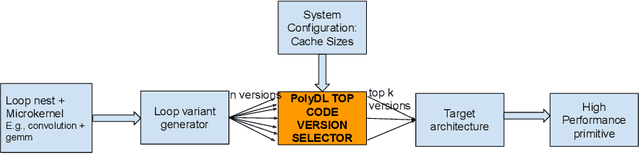

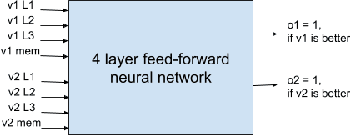

Abstract:Deep Neural Networks (DNNs) have revolutionized many aspects of our lives. The use of DNNs is becoming ubiquitous including in softwares for image recognition, speech recognition, speech synthesis, language translation, to name a few. he training of DNN architectures however is computationally expensive. Once the model is created, its use in the intended application - the inference task, is computationally heavy too and the inference needs to be fast for real time use. For obtaining high performance today, the code of Deep Learning (DL) primitives optimized for specific architectures by expert programmers exposed via libraries is the norm. However, given the constant emergence of new DNN architectures, creating hand optimized code is expensive, slow and is not scalable. To address this performance-productivity challenge, in this paper we present compiler algorithms to automatically generate high performance implementations of DL primitives that closely match the performance of hand optimized libraries. We develop novel data reuse analysis algorithms using the polyhedral model to derive efficient execution schedules automatically. In addition, because most DL primitives use some variant of matrix multiplication at their core, we develop a flexible framework where it is possible to plug in library implementations of the same in lieu of a subset of the loops. We show that such a hybrid compiler plus a minimal library-use approach results in state-of-the-art performance. We develop compiler algorithms to also perform operator fusions that reduce data movement through the memory hierarchy of the computer system.

PolyScientist: Automatic Loop Transformations Combined with Microkernels for Optimization of Deep Learning Primitives

Feb 06, 2020

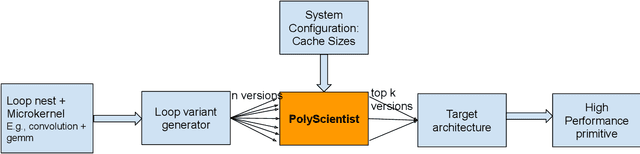

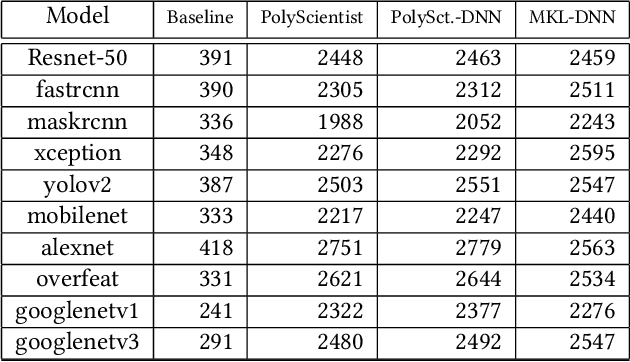

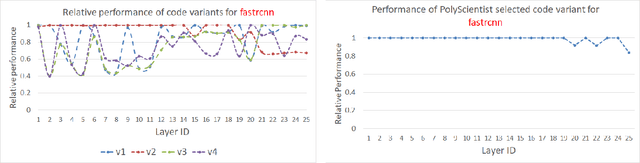

Abstract:At the heart of deep learning training and inferencing are computationally intensive primitives such as convolutions which form the building blocks of deep neural networks. Researchers have taken two distinct approaches to creating high performance implementations of deep learning kernels, namely, 1) library development exemplified by Intel MKL-DNN for CPUs, 2) automatic compilation represented by the TensorFlow XLA compiler. The two approaches have their drawbacks: even though a custom built library can deliver very good performance, the cost and time of development of the library can be high. Automatic compilation of kernels is attractive but in practice, till date, automatically generated implementations lag expert coded kernels in performance by orders of magnitude. In this paper, we develop a hybrid solution to the development of deep learning kernels that achieves the best of both worlds: the expert coded microkernels are utilized for the innermost loops of kernels and we use the advanced polyhedral technology to automatically tune the outer loops for performance. We design a novel polyhedral model based data reuse algorithm to optimize the outer loops of the kernel. Through experimental evaluation on an important class of deep learning primitives namely convolutions, we demonstrate that the approach we develop attains the same levels of performance as Intel MKL-DNN, a hand coded deep learning library.

Automatic Model Parallelism for Deep Neural Networks with Compiler and Hardware Support

Jun 11, 2019

Abstract:The deep neural networks (DNNs) have been enormously successful in tasks that were hitherto in the human-only realm such as image recognition, and language translation. Owing to their success the DNNs are being explored for use in ever more sophisticated tasks. One of the ways that the DNNs are made to scale for the complex undertakings is by increasing their size -- deeper and wider networks can model well the additional complexity. Such large models are trained using model parallelism on multiple compute devices such as multi-GPUs and multi-node systems. In this paper, we develop a compiler-driven approach to achieve model parallelism. We model the computation and communication costs of a dataflow graph that embodies the neural network training process and then, partition the graph using heuristics in such a manner that the communication between compute devices is minimal and we have a good load balance. The hardware scheduling assistants are proposed to assist the compiler in fine tuning the distribution of work at runtime.

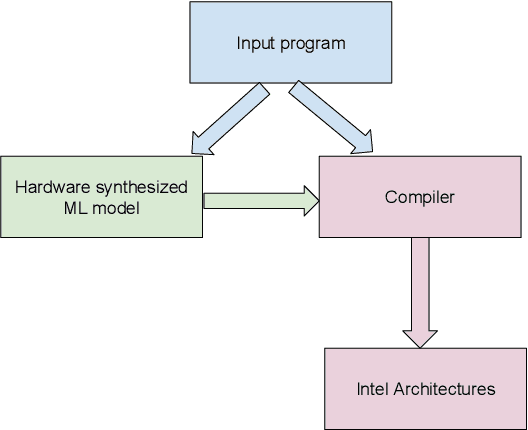

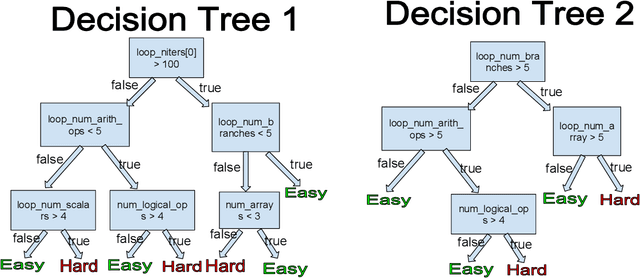

Categorization of Program Regions for Agile Compilation using Machine Learning and Hardware Support

May 29, 2019

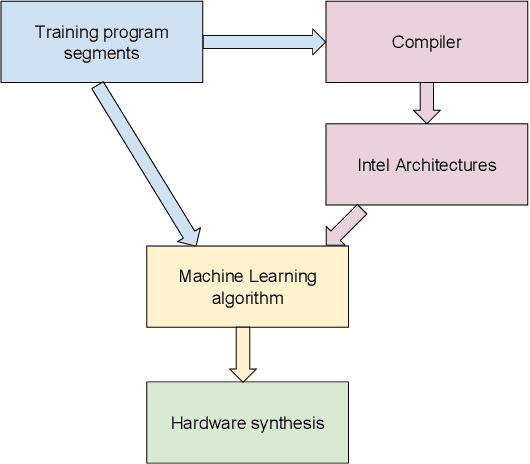

Abstract:A compiler processes the code written in a high level language and produces machine executable code. The compiler writers often face the challenge of keeping the compilation times reasonable. That is because aggressive optimization passes which potentially will give rise to high performance are often expensive in terms of running time and memory footprint. Consequently the compiler designers arrive at a compromise where they either simplify the optimization algorithm which may decrease the performance of the produced code, or they will restrict the optimization to the subset of the overall input program in which case large parts of the input application will go un-optimized. The problem we address in this paper is that of keeping the compilation times reasonable, and at the same time optimizing the input program to the fullest extent possible. Consequently, the performance of the produced code will match the performance when all the aggressive optimization passes are applied over the entire input program.

A Data Analytics Framework for Aggregate Data Analysis

Sep 16, 2018

Abstract:In many contexts, we have access to aggregate data, but individual level data is unavailable. For example, medical studies sometimes report only aggregate statistics about disease prevalence because of privacy concerns. Even so, many a time it is desirable, and in fact could be necessary to infer individual level characteristics from aggregate data. For instance, other researchers who want to perform more detailed analysis of disease characteristics would require individual level data. Similar challenges arise in other fields too including politics, and marketing. In this paper, we present an end-to-end pipeline for processing of aggregate data to derive individual level statistics, and then using the inferred data to train machine learning models to answer questions of interest. We describe a novel algorithm for reconstructing fine-grained data from summary statistics. This step will create multiple candidate datasets which will form the input to the machine learning models. The advantage of the highly parallel architecture we propose is that uncertainty in the generated fine-grained data will be compensated by the use of multiple candidate fine-grained datasets. Consequently, the answers derived from the machine learning models will be more valid and usable. We validate our approach using data from a challenging medical problem called Acute Traumatic Coagulopathy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge