Sang-ho Lee

Asynchronous Edge Learning using Cloned Knowledge Distillation

Oct 22, 2020

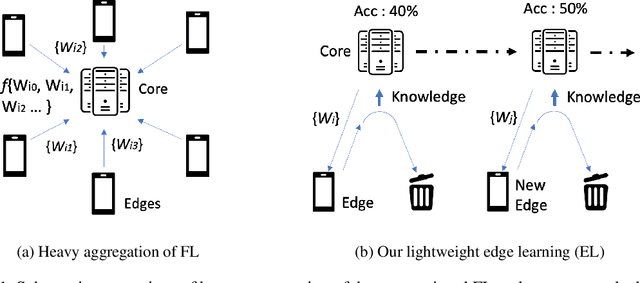

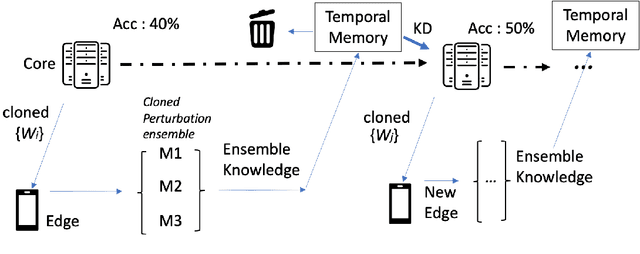

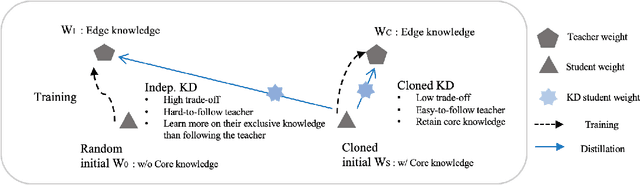

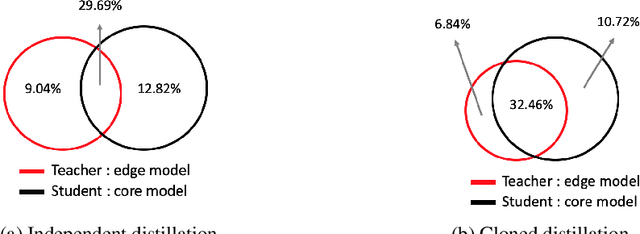

Abstract:With the increasing demand for more and more data, the federated learning (FL) methods, which try to utilize highly distributed on-device local data in the training process, have been proposed.However, fledgling services provided by startup companies not only have limited number of clients, but also have minimal resources for constant communications between the server and multiple clients. In addition, in a real-world environment where the user pool changes dynamically, the FL system must be able to efficiently utilize rapid inflow and outflow of users, while at the same time experience minimal bottleneck due to network delays of multiple users. In this respect, we amend the federated learning scenario to a more flexible asynchronous edge learning. To solve the aforementioned learning problems, we propose an asynchronous model-based communication method with knowledge distillation. In particular, we dub our knowledge distillation scheme as "cloned distillation" and explain how it is different from other knowledge distillation method. In brief, we found that in knowledge distillation between the teacher and the student there exist two contesting traits in the student: to attend to the teacher's knowledge or to retain its own knowledge exclusive to the teacher. And in this edge learning scenario, the attending property should be amplified rather than the retaining property, because teachers are dispatched to the users to learn from them and recollected at the server to teach the core model. Our asynchronous edge learning method can elastically handle the dynamic inflow and outflow of users in a service with minimal communication cost, operate with essentially no bottleneck due to user delay, and protect user's privacy. Also we found that it is robust to users who behave abnormally or maliciously.

URNet : User-Resizable Residual Networks with Conditional Gating Module

Jan 15, 2019

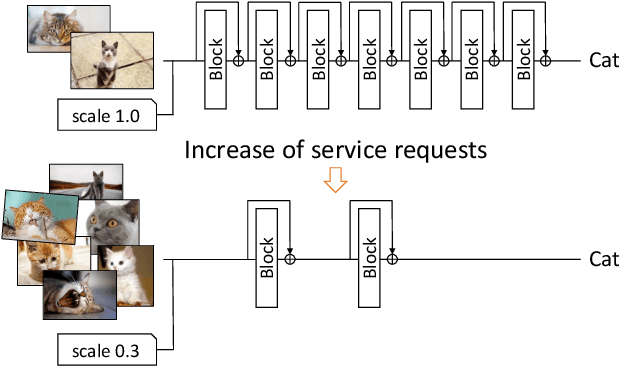

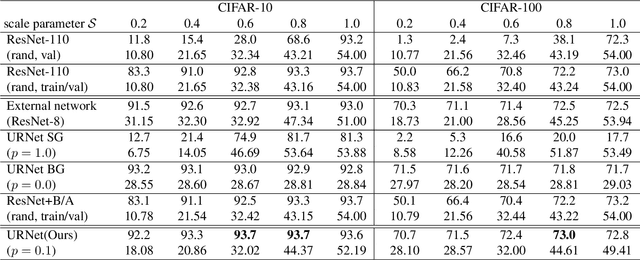

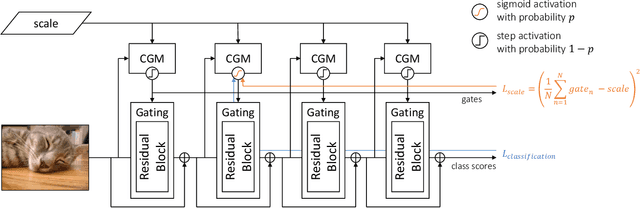

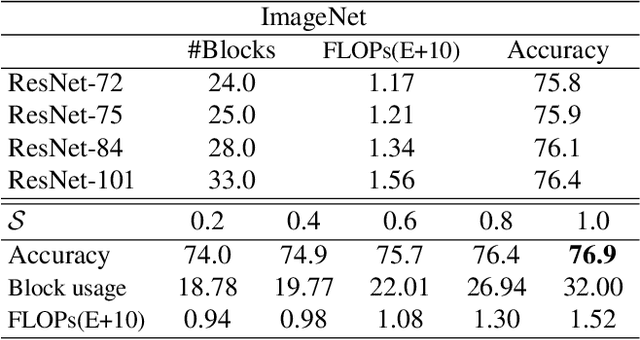

Abstract:Convolutional Neural Networks are widely used to process spatial scenes, but their computational cost is fixed and depends on the structure of the network used. There are methods to reduce the cost by compressing networks or varying its computational path dynamically according to the input image. However, since the user can not control the size of the learned model, it is difficult to respond dynamically if the amount of service requests suddenly increases. We propose User-Resizable Residual Networks (URNet), which allows the user to adjust the scale of the network as needed during evaluation. URNet includes Conditional Gating Module (CGM) that determines the use of each residual block according to the input image and the desired scale. CGM is trained in a supervised manner using the newly proposed scale loss and its corresponding training methods. URNet can control the amount of computation according to user's demand without degrading the accuracy significantly. It can also be used as a general compression method by fixing the scale size during training. In the experiments on ImageNet, URNet based on ResNet-101 maintains the accuracy of the baseline even when resizing it to approximately 80% of the original network, and demonstrates only about 1% accuracy degradation when using about 65% of the computation.

Image Restoration by Estimating Frequency Distribution of Local Patches

May 23, 2018

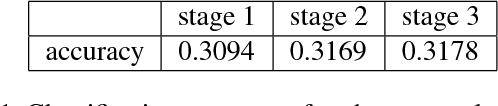

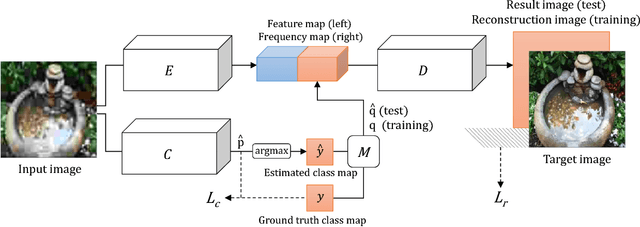

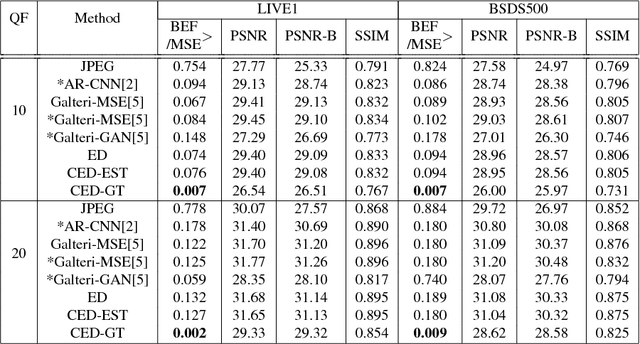

Abstract:In this paper, we propose a method to solve the image restoration problem, which tries to restore the details of a corrupted image, especially due to the loss caused by JPEG compression. We have treated an image in the frequency domain to explicitly restore the frequency components lost during image compression. In doing so, the distribution in the frequency domain is learned using the cross entropy loss. Unlike recent approaches, we have reconstructed the details of an image without using the scheme of adversarial training. Rather, the image restoration problem is treated as a classification problem to determine the frequency coefficient for each frequency band in an image patch. In this paper, we show that the proposed method effectively restores a JPEG-compressed image with more detailed high frequency components, making the restored image more vivid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge