Sandro Papais

ForeSight: Multi-View Streaming Joint Object Detection and Trajectory Forecasting

Aug 09, 2025

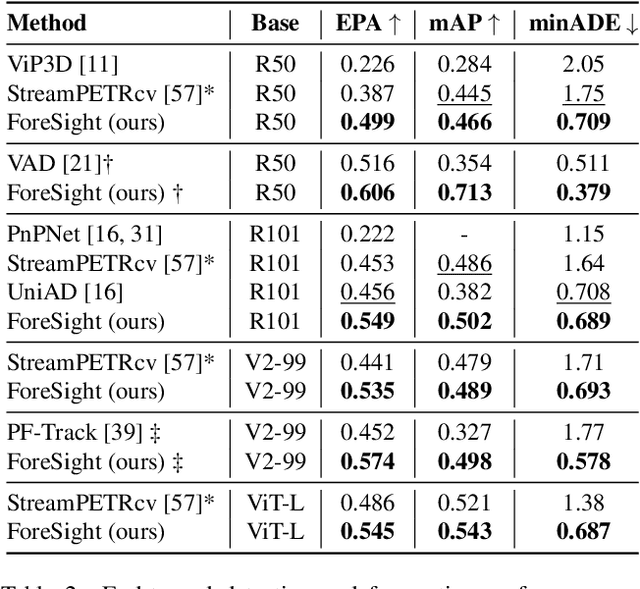

Abstract:We introduce ForeSight, a novel joint detection and forecasting framework for vision-based 3D perception in autonomous vehicles. Traditional approaches treat detection and forecasting as separate sequential tasks, limiting their ability to leverage temporal cues. ForeSight addresses this limitation with a multi-task streaming and bidirectional learning approach, allowing detection and forecasting to share query memory and propagate information seamlessly. The forecast-aware detection transformer enhances spatial reasoning by integrating trajectory predictions from a multiple hypothesis forecast memory queue, while the streaming forecast transformer improves temporal consistency using past forecasts and refined detections. Unlike tracking-based methods, ForeSight eliminates the need for explicit object association, reducing error propagation with a tracking-free model that efficiently scales across multi-frame sequences. Experiments on the nuScenes dataset show that ForeSight achieves state-of-the-art performance, achieving an EPA of 54.9%, surpassing previous methods by 9.3%, while also attaining the best mAP and minADE among multi-view detection and forecasting models.

Deployable and Generalizable Motion Prediction: Taxonomy, Open Challenges and Future Directions

May 14, 2025

Abstract:Motion prediction, the anticipation of future agent states or scene evolution, is rooted in human cognition, bridging perception and decision-making. It enables intelligent systems, such as robots and self-driving cars, to act safely in dynamic, human-involved environments, and informs broader time-series reasoning challenges. With advances in methods, representations, and datasets, the field has seen rapid progress, reflected in quickly evolving benchmark results. Yet, when state-of-the-art methods are deployed in the real world, they often struggle to generalize to open-world conditions and fall short of deployment standards. This reveals a gap between research benchmarks, which are often idealized or ill-posed, and real-world complexity. To address this gap, this survey revisits the generalization and deployability of motion prediction models, with an emphasis on the applications of robotics, autonomous driving, and human motion. We first offer a comprehensive taxonomy of motion prediction methods, covering representations, modeling strategies, application domains, and evaluation protocols. We then study two key challenges: (1) how to push motion prediction models to be deployable to realistic deployment standards, where motion prediction does not act in a vacuum, but functions as one module of closed-loop autonomy stacks - it takes input from the localization and perception, and informs downstream planning and control. 2) how to generalize motion prediction models from limited seen scenarios/datasets to the open-world settings. Throughout the paper, we highlight critical open challenges to guide future work, aiming to recalibrate the community's efforts, fostering progress that is not only measurable but also meaningful for real-world applications.

SWTrack: Multiple Hypothesis Sliding Window 3D Multi-Object Tracking

Feb 27, 2024Abstract:Modern robotic systems are required to operate in dense dynamic environments, requiring highly accurate real-time track identification and estimation. For 3D multi-object tracking, recent approaches process a single measurement frame recursively with greedy association and are prone to errors in ambiguous association decisions. Our method, Sliding Window Tracker (SWTrack), yields more accurate association and state estimation by batch processing many frames of sensor data while being capable of running online in real-time. The most probable track associations are identified by evaluating all possible track hypotheses across the temporal sliding window. A novel graph optimization approach is formulated to solve the multidimensional assignment problem with lifted graph edges introduced to account for missed detections and graph sparsity enforced to retain real-time efficiency. We evaluate our SWTrack implementation$^{2}$ on the NuScenes autonomous driving dataset to demonstrate improved tracking performance.

aUToLights: A Robust Multi-Camera Traffic Light Detection and Tracking System

May 15, 2023Abstract:Following four successful years in the SAE AutoDrive Challenge Series I, the University of Toronto is participating in the Series II competition to develop a Level 4 autonomous passenger vehicle capable of handling various urban driving scenarios by 2025. Accurate detection of traffic lights and correct identification of their states is essential for safe autonomous operation in cities. Herein, we describe our recently-redesigned traffic light perception system for autonomous vehicles like the University of Toronto's self-driving car, Artemis. Similar to most traffic light perception systems, we rely primarily on camera-based object detectors. We deploy the YOLOv5 detector for bounding box regression and traffic light classification across multiple cameras and fuse the observations. To improve robustness, we incorporate priors from high-definition semantic maps and perform state filtering using hidden Markov models. We demonstrate a multi-camera, real time-capable traffic light perception pipeline that handles complex situations including multiple visible intersections, traffic light variations, temporary occlusion, and flashing light states. To validate our system, we collected and annotated a varied dataset incorporating flashing states and a range of occlusion types. Our results show superior performance in challenging real-world scenarios compared to single-frame, single-camera object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge