Sandra Smith

Human-level Performance On Automatic Head Biometrics In Fetal Ultrasound Using Fully Convolutional Neural Networks

Apr 24, 2018

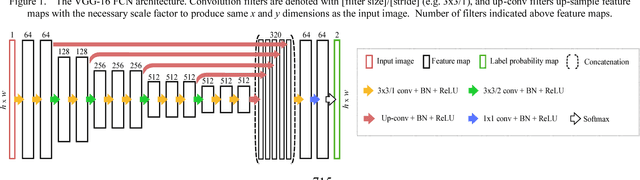

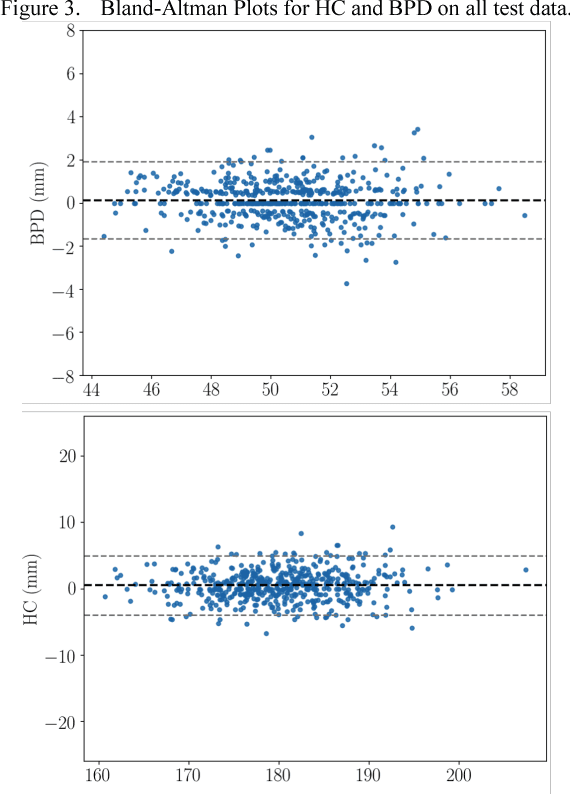

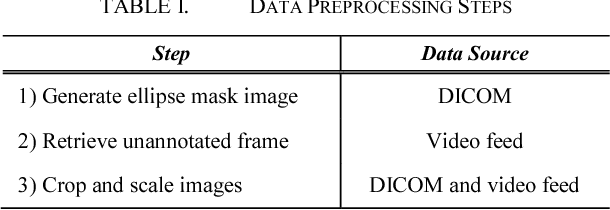

Abstract:Measurement of head biometrics from fetal ultrasonography images is of key importance in monitoring the healthy development of fetuses. However, the accurate measurement of relevant anatomical structures is subject to large inter-observer variability in the clinic. To address this issue, an automated method utilizing Fully Convolutional Networks (FCN) is proposed to determine measurements of fetal head circumference (HC) and biparietal diameter (BPD). An FCN was trained on approximately 2000 2D ultrasound images of the head with annotations provided by 45 different sonographers during routine screening examinations to perform semantic segmentation of the head. An ellipse is fitted to the resulting segmentation contours to mimic the annotation typically produced by a sonographer. The model's performance was compared with inter-observer variability, where two experts manually annotated 100 test images. Mean absolute model-expert error was slightly better than inter-observer error for HC (1.99mm vs 2.16mm), and comparable for BPD (0.61mm vs 0.59mm), as well as Dice coefficient (0.980 vs 0.980). Our results demonstrate that the model performs at a level similar to a human expert, and learns to produce accurate predictions from a large dataset annotated by many sonographers. Additionally, measurements are generated in near real-time at 15fps on a GPU, which could speed up clinical workflow for both skilled and trainee sonographers.

SonoNet: Real-Time Detection and Localisation of Fetal Standard Scan Planes in Freehand Ultrasound

Jul 25, 2017

Abstract:Identifying and interpreting fetal standard scan planes during 2D ultrasound mid-pregnancy examinations are highly complex tasks which require years of training. Apart from guiding the probe to the correct location, it can be equally difficult for a non-expert to identify relevant structures within the image. Automatic image processing can provide tools to help experienced as well as inexperienced operators with these tasks. In this paper, we propose a novel method based on convolutional neural networks which can automatically detect 13 fetal standard views in freehand 2D ultrasound data as well as provide a localisation of the fetal structures via a bounding box. An important contribution is that the network learns to localise the target anatomy using weak supervision based on image-level labels only. The network architecture is designed to operate in real-time while providing optimal output for the localisation task. We present results for real-time annotation, retrospective frame retrieval from saved videos, and localisation on a very large and challenging dataset consisting of images and video recordings of full clinical anomaly screenings. We found that the proposed method achieved an average F1-score of 0.798 in a realistic classification experiment modelling real-time detection, and obtained a 90.09% accuracy for retrospective frame retrieval. Moreover, an accuracy of 77.8% was achieved on the localisation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge