Sandra Milligan

TopicResponse: A Marriage of Topic Modelling and Rasch Modelling for Automatic Measurement in MOOCs

Mar 20, 2017

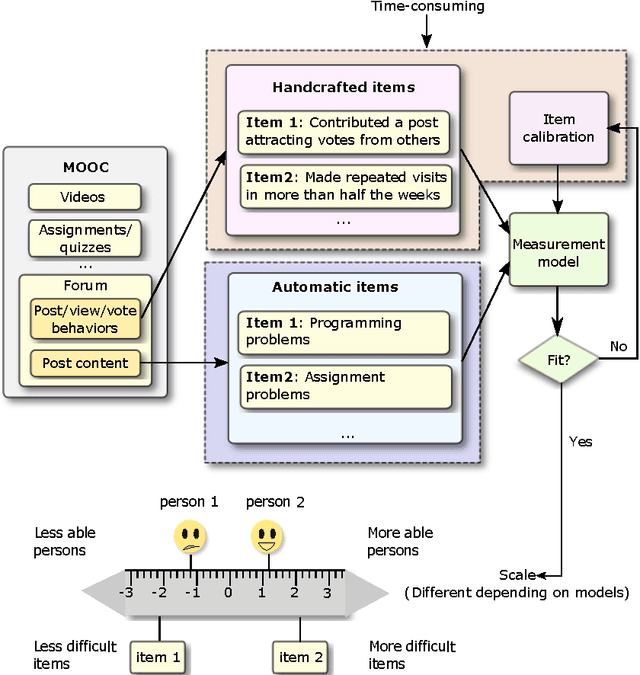

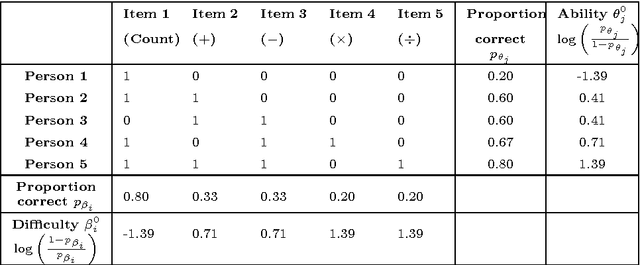

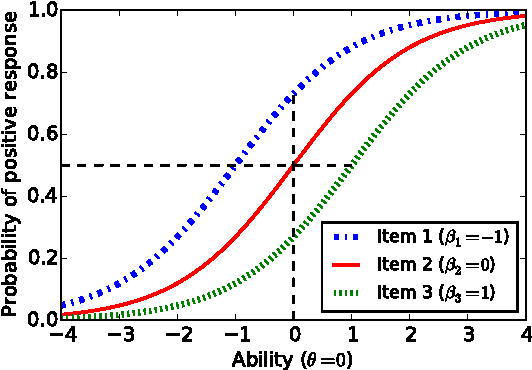

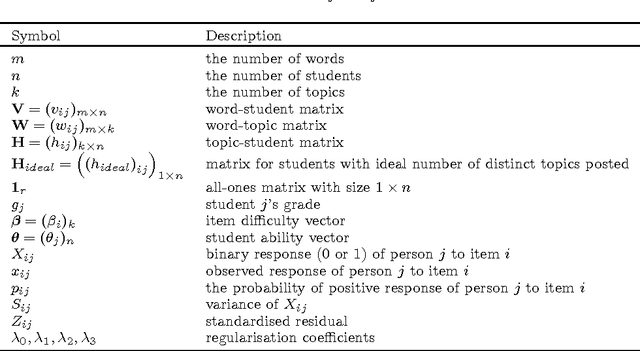

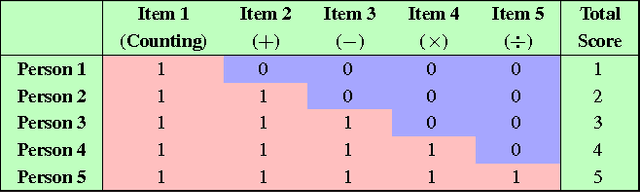

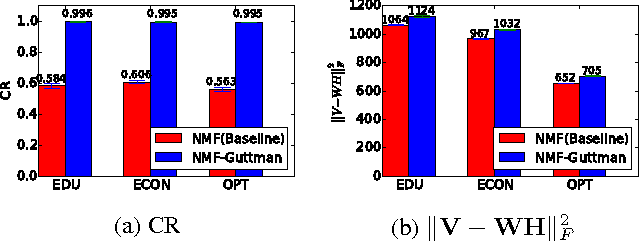

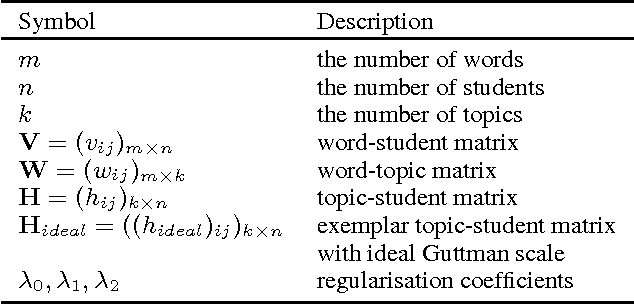

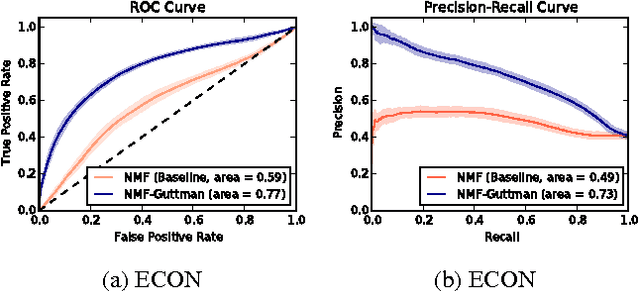

Abstract:This paper explores the suitability of using automatically discovered topics from MOOC discussion forums for modelling students' academic abilities. The Rasch model from psychometrics is a popular generative probabilistic model that relates latent student skill, latent item difficulty, and observed student-item responses within a principled, unified framework. According to scholarly educational theory, discovered topics can be regarded as appropriate measurement items if (1) students' participation across the discovered topics is well fit by the Rasch model, and if (2) the topics are interpretable to subject-matter experts as being educationally meaningful. Such Rasch-scaled topics, with associated difficulty levels, could be of potential benefit to curriculum refinement, student assessment and personalised feedback. The technical challenge that remains, is to discover meaningful topics that simultaneously achieve good statistical fit with the Rasch model. To address this challenge, we combine the Rasch model with non-negative matrix factorisation based topic modelling, jointly fitting both models. We demonstrate the suitability of our approach with quantitative experiments on data from three Coursera MOOCs, and with qualitative survey results on topic interpretability on a Discrete Optimisation MOOC.

MOOCs Meet Measurement Theory: A Topic-Modelling Approach

Nov 25, 2015

Abstract:This paper adapts topic models to the psychometric testing of MOOC students based on their online forum postings. Measurement theory from education and psychology provides statistical models for quantifying a person's attainment of intangible attributes such as attitudes, abilities or intelligence. Such models infer latent skill levels by relating them to individuals' observed responses on a series of items such as quiz questions. The set of items can be used to measure a latent skill if individuals' responses on them conform to a Guttman scale. Such well-scaled items differentiate between individuals and inferred levels span the entire range from most basic to the advanced. In practice, education researchers manually devise items (quiz questions) while optimising well-scaled conformance. Due to the costly nature and expert requirements of this process, psychometric testing has found limited use in everyday teaching. We aim to develop usable measurement models for highly-instrumented MOOC delivery platforms, by using participation in automatically-extracted online forum topics as items. The challenge is to formalise the Guttman scale educational constraint and incorporate it into topic models. To favour topics that automatically conform to a Guttman scale, we introduce a novel regularisation into non-negative matrix factorisation-based topic modelling. We demonstrate the suitability of our approach with both quantitative experiments on three Coursera MOOCs, and with a qualitative survey of topic interpretability on two MOOCs by domain expert interviews.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge