Sandhya Saisubramanian

Multi-Objective Multi-Agent Path Finding with Lexicographic Cost Preferences

Oct 08, 2025Abstract:Many real-world scenarios require multiple agents to coordinate in shared environments, while balancing trade-offs between multiple, potentially competing objectives. Current multi-objective multi-agent path finding (MO-MAPF) algorithms typically produce conflict-free plans by computing Pareto frontiers. They do not explicitly optimize for user-defined preferences, even when the preferences are available, and scale poorly with the number of objectives. We propose a lexicographic framework for modeling MO-MAPF, along with an algorithm \textit{Lexicographic Conflict-Based Search} (LCBS) that directly computes a single solution aligned with a lexicographic preference over objectives. LCBS integrates a priority-aware low-level $A^*$ search with conflict-based search, avoiding Pareto frontier construction and enabling efficient planning guided by preference over objectives. We provide insights into optimality and scalability, and empirically demonstrate that LCBS computes optimal solutions while scaling to instances with up to ten objectives -- far beyond the limits of existing MO-MAPF methods. Evaluations on standard and randomized MAPF benchmarks show consistently higher success rates against state-of-the-art baselines, especially with increasing number of objectives.

Calibrating Biophysical Models for Grape Phenology Prediction via Multi-Task Learning

Aug 05, 2025Abstract:Accurate prediction of grape phenology is essential for timely vineyard management decisions, such as scheduling irrigation and fertilization, to maximize crop yield and quality. While traditional biophysical models calibrated on historical field data can be used for season-long predictions, they lack the precision required for fine-grained vineyard management. Deep learning methods are a compelling alternative but their performance is hindered by sparse phenology datasets, particularly at the cultivar level. We propose a hybrid modeling approach that combines multi-task learning with a recurrent neural network to parameterize a differentiable biophysical model. By using multi-task learning to predict the parameters of the biophysical model, our approach enables shared learning across cultivars while preserving biological structure, thereby improving the robustness and accuracy of predictions. Empirical evaluation using real-world and synthetic datasets demonstrates that our method significantly outperforms both conventional biophysical models and baseline deep learning approaches in predicting phenological stages, as well as other crop state variables such as cold-hardiness and wheat yield.

Learning with Expert Abstractions for Efficient Multi-Task Continuous Control

Mar 19, 2025Abstract:Decision-making in complex, continuous multi-task environments is often hindered by the difficulty of obtaining accurate models for planning and the inefficiency of learning purely from trial and error. While precise environment dynamics may be hard to specify, human experts can often provide high-fidelity abstractions that capture the essential high-level structure of a task and user preferences in the target environment. Existing hierarchical approaches often target discrete settings and do not generalize across tasks. We propose a hierarchical reinforcement learning approach that addresses these limitations by dynamically planning over the expert-specified abstraction to generate subgoals to learn a goal-conditioned policy. To overcome the challenges of learning under sparse rewards, we shape the reward based on the optimal state value in the abstract model. This structured decision-making process enhances sample efficiency and facilitates zero-shot generalization. Our empirical evaluation on a suite of procedurally generated continuous control environments demonstrates that our approach outperforms existing hierarchical reinforcement learning methods in terms of sample efficiency, task completion rate, scalability to complex tasks, and generalization to novel scenarios.

WOFOSTGym: A Crop Simulator for Learning Annual and Perennial Crop Management Strategies

Feb 26, 2025Abstract:We introduce WOFOSTGym, a novel crop simulation environment designed to train reinforcement learning (RL) agents to optimize agromanagement decisions for annual and perennial crops in single and multi-farm settings. Effective crop management requires optimizing yield and economic returns while minimizing environmental impact, a complex sequential decision-making problem well suited for RL. However, the lack of simulators for perennial crops in multi-farm contexts has hindered RL applications in this domain. Existing crop simulators also do not support multiple annual crops. WOFOSTGym addresses these gaps by supporting 23 annual crops and two perennial crops, enabling RL agents to learn diverse agromanagement strategies in multi-year, multi-crop, and multi-farm settings. Our simulator offers a suite of challenging tasks for learning under partial observability, non-Markovian dynamics, and delayed feedback. WOFOSTGym's standard RL interface allows researchers without agricultural expertise to explore a wide range of agromanagement problems. Our experiments demonstrate the learned behaviors across various crop varieties and soil types, highlighting WOFOSTGym's potential for advancing RL-driven decision support in agriculture.

Adaptive Querying for Reward Learning from Human Feedback

Dec 11, 2024

Abstract:Learning from human feedback is a popular approach to train robots to adapt to user preferences and improve safety. Existing approaches typically consider a single querying (interaction) format when seeking human feedback and do not leverage multiple modes of user interaction with a robot. We examine how to learn a penalty function associated with unsafe behaviors, such as side effects, using multiple forms of human feedback, by optimizing the query state and feedback format. Our framework for adaptive feedback selection enables querying for feedback in critical states in the most informative format, while accounting for the cost and probability of receiving feedback in a certain format. We employ an iterative, two-phase approach which first selects critical states for querying, and then uses information gain to select a feedback format for querying across the sampled critical states. Our evaluation in simulation demonstrates the sample efficiency of our approach.

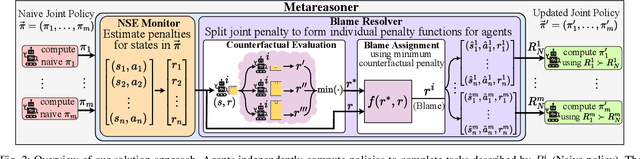

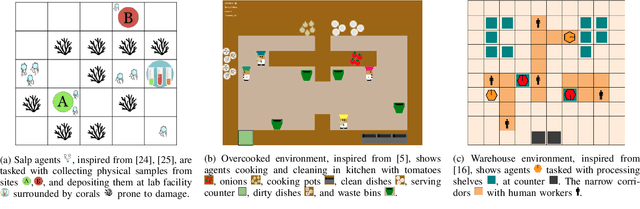

Mitigating Negative Side Effects in Multi-Agent Systems Using Blame Assignment

May 07, 2024

Abstract:When agents that are independently trained (or designed) to complete their individual tasks are deployed in a shared environment, their joint actions may produce negative side effects (NSEs). As their training does not account for the behavior of other agents or their joint action effects on the environment, the agents have no prior knowledge of the NSEs of their actions. We model the problem of mitigating NSEs in a cooperative multi-agent system as a Lexicographic Decentralized Markov Decision Process with two objectives. The agents must optimize the completion of their assigned tasks while mitigating NSEs. We assume independence of transitions and rewards with respect to the agents' tasks but the joint NSE penalty creates a form of dependence in this setting. To improve scalability, the joint NSE penalty is decomposed into individual penalties for each agent using credit assignment, which facilitates decentralized policy computation. Our results in simulation on three domains demonstrate the effectiveness and scalability of our approach in mitigating NSEs by updating the policies of a subset of agents in the system.

Mitigating Negative Side Effects via Environment Shaping

Feb 13, 2021

Abstract:Agents operating in unstructured environments often produce negative side effects (NSE), which are difficult to identify at design time. While the agent can learn to mitigate the side effects from human feedback, such feedback is often expensive and the rate of learning is sensitive to the agent's state representation. We examine how humans can assist an agent, beyond providing feedback, and exploit their broader scope of knowledge to mitigate the impacts of NSE. We formulate this problem as a human-agent team with decoupled objectives. The agent optimizes its assigned task, during which its actions may produce NSE. The human shapes the environment through minor reconfiguration actions so as to mitigate the impacts of the agent's side effects, without affecting the agent's ability to complete its assigned task. We present an algorithm to solve this problem and analyze its theoretical properties. Through experiments with human subjects, we assess the willingness of users to perform minor environment modifications to mitigate the impacts of NSE. Empirical evaluation of our approach shows that the proposed framework can successfully mitigate NSE, without affecting the agent's ability to complete its assigned task.

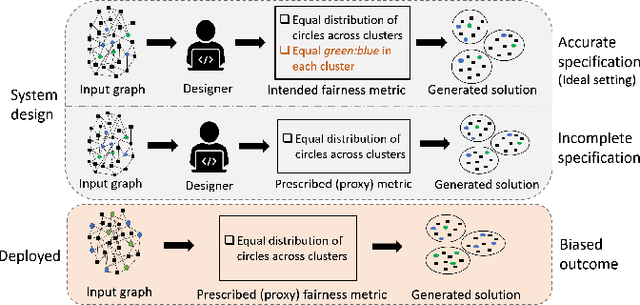

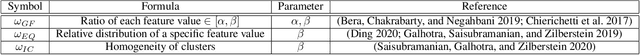

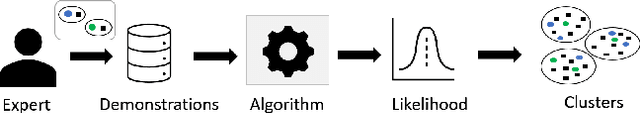

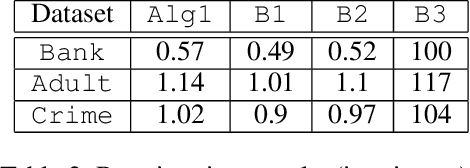

Learning to Generate Fair Clusters from Demonstrations

Feb 08, 2021

Abstract:Fair clustering is the process of grouping similar entities together, while satisfying a mathematically well-defined fairness metric as a constraint. Due to the practical challenges in precise model specification, the prescribed fairness constraints are often incomplete and act as proxies to the intended fairness requirement, leading to biased outcomes when the system is deployed. We examine how to identify the intended fairness constraint for a problem based on limited demonstrations from an expert. Each demonstration is a clustering over a subset of the data. We present an algorithm to identify the fairness metric from demonstrations and generate clusters using existing off-the-shelf clustering techniques, and analyze its theoretical properties. To extend our approach to novel fairness metrics for which clustering algorithms do not currently exist, we present a greedy method for clustering. Additionally, we investigate how to generate interpretable solutions using our approach. Empirical evaluation on three real-world datasets demonstrates the effectiveness of our approach in quickly identifying the underlying fairness and interpretability constraints, which are then used to generate fair and interpretable clusters.

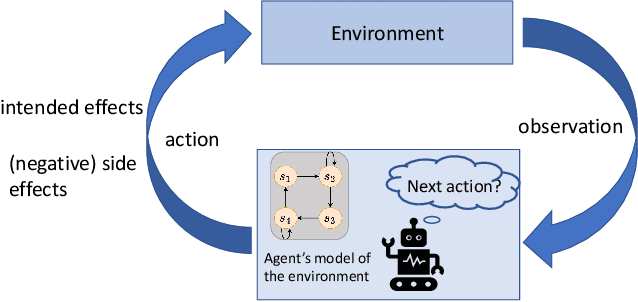

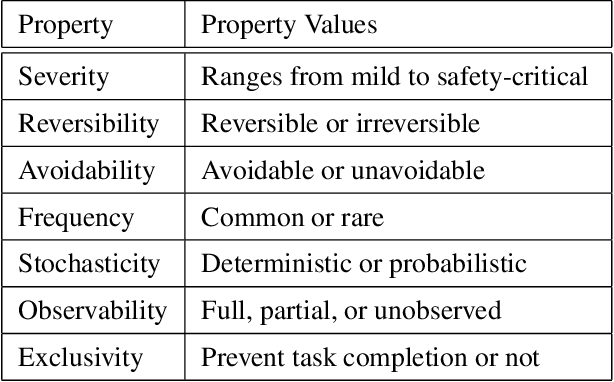

Avoiding Negative Side Effects due to Incomplete Knowledge of AI Systems

Aug 28, 2020

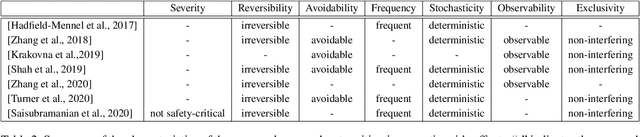

Abstract:Autonomous agents acting in the real-world often operate based on models that ignore certain aspects of the environment. The incompleteness of any given model---handcrafted or machine acquired---is inevitable due to practical limitations of any modeling technique for complex real-world settings. Due to the limited fidelity of its model, an agent's actions may have unexpected, undesirable consequences during execution. Learning to recognize and avoid such negative side effects of the agent's actions is critical to improving the safety and reliability of autonomous systems. This emerging research topic is attracting increased attention due to the increased deployment of AI systems and their broad societal impacts. This article provides a comprehensive overview of different forms of negative side effects and the recent research efforts to address them. We identify key characteristics of negative side effects, highlight the challenges in avoiding negative side effects, and discuss recently developed approaches, contrasting their benefits and limitations. We conclude with a discussion of open questions and suggestions for future research directions.

Balancing the Tradeoff Between Clustering Value and Interpretability

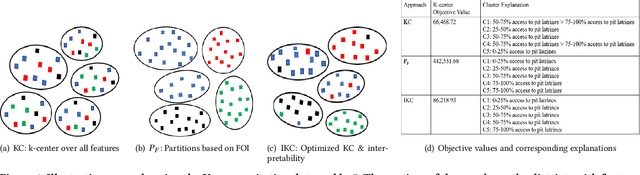

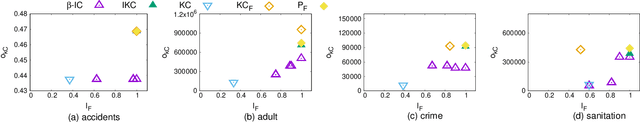

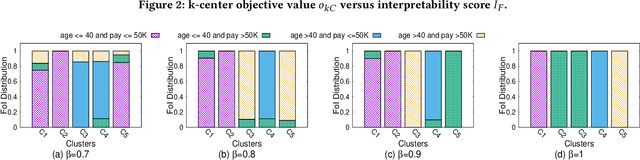

Jan 31, 2020

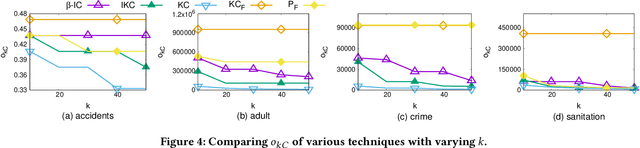

Abstract:Graph clustering groups entities -- the vertices of a graph -- based on their similarity, typically using a complex distance function over a large number of features. Successful integration of clustering approaches in automated decision-support systems hinges on the interpretability of the resulting clusters. This paper addresses the problem of generating interpretable clusters, given features of interest that signify interpretability to an end-user, by optimizing interpretability in addition to common clustering objectives. We propose a $\beta$-interpretable clustering algorithm that ensures that at least $\beta$ fraction of nodes in each cluster share the same feature value. The tunable parameter $\beta$ is user-specified. We also present a more efficient algorithm for scenarios with $\beta\!=\!1$ and analyze the theoretical guarantees of the two algorithms. Finally, we empirically demonstrate the benefits of our approaches in generating interpretable clusters using four real-world datasets. The interpretability of the clusters is complemented by generating simple explanations denoting the feature values of the nodes in the clusters, using frequent pattern mining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge