Sanaz Mohammadjafari

Improved $α$-GAN architecture for generating 3D connected volumes with an application to radiosurgery treatment planning

Jul 13, 2022

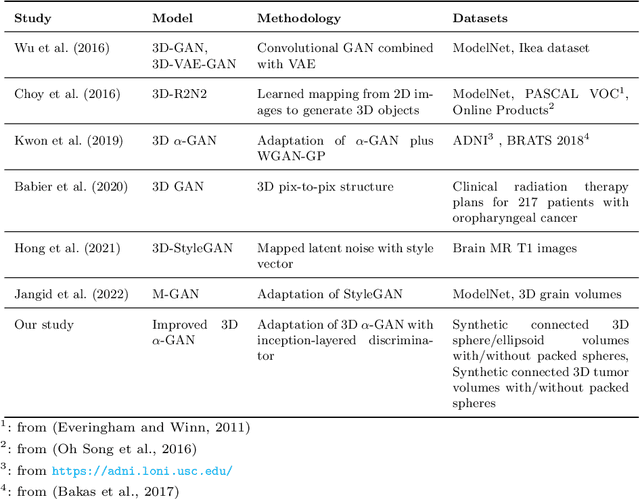

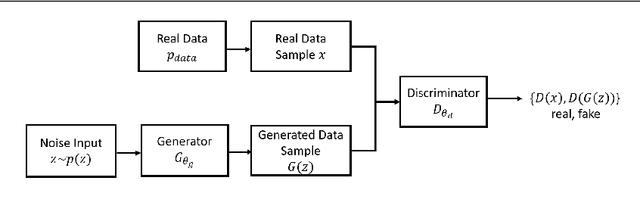

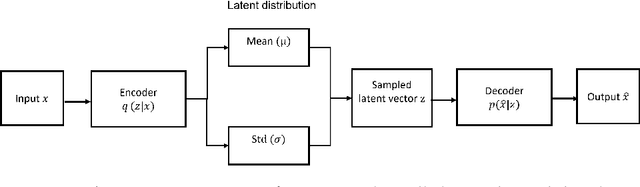

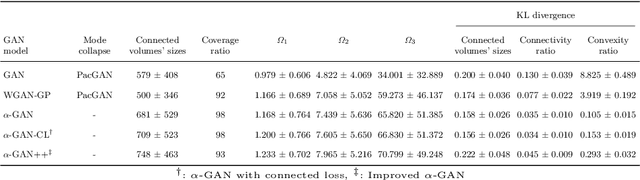

Abstract:Generative Adversarial Networks (GANs) have gained significant attention in several computer vision tasks for generating high-quality synthetic data. Various medical applications including diagnostic imaging and radiation therapy can benefit greatly from synthetic data generation due to data scarcity in the domain. However, medical image data is typically kept in 3D space, and generative models suffer from the curse of dimensionality issues in generating such synthetic data. In this paper, we investigate the potential of GANs for generating connected 3D volumes. We propose an improved version of 3D $\alpha$-GAN by incorporating various architectural enhancements. On a synthetic dataset of connected 3D spheres and ellipsoids, our model can generate fully connected 3D shapes with similar geometrical characteristics to that of training data. We also show that our 3D GAN model can successfully generate high-quality 3D tumor volumes and associated treatment specifications (e.g., isocenter locations). Similar moment invariants to the training data as well as fully connected 3D shapes confirm that improved 3D $\alpha$-GAN implicitly learns the training data distribution, and generates realistic-looking samples. The capability of improved 3D $\alpha$-GAN makes it a valuable source for generating synthetic medical image data that can help future research in this domain.

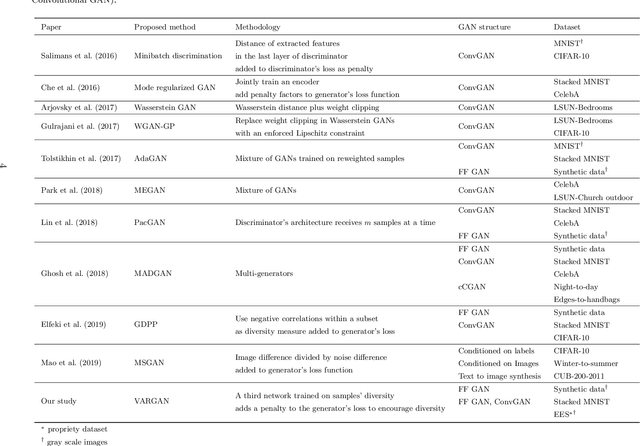

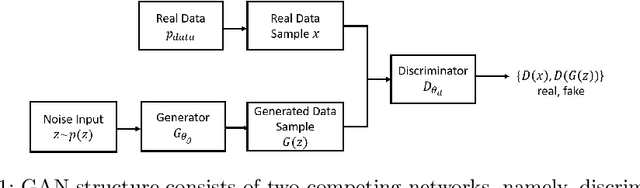

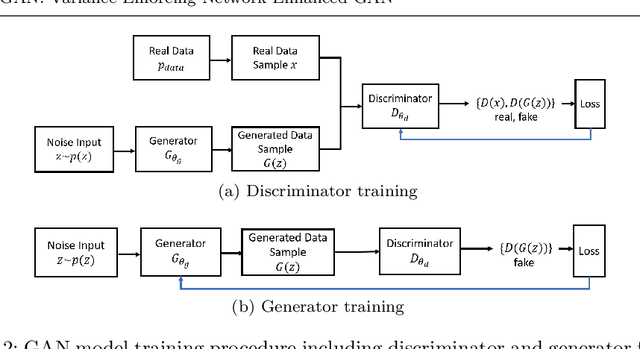

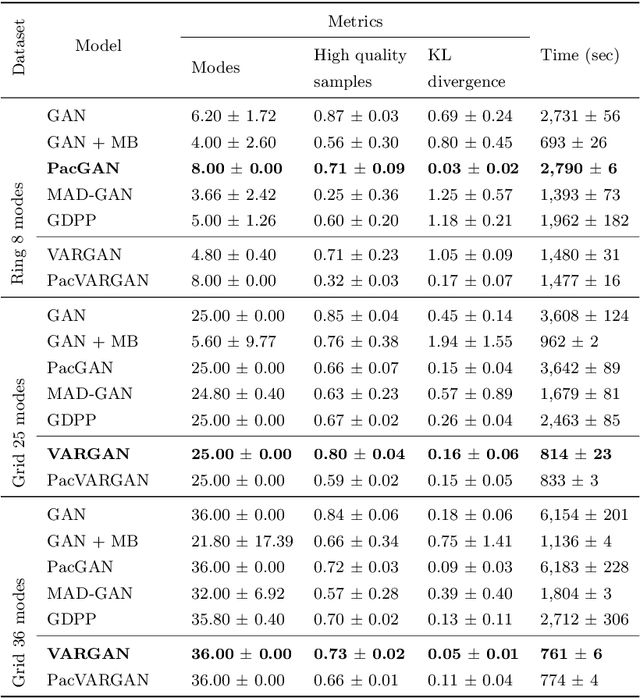

VARGAN: Variance Enforcing Network Enhanced GAN

Sep 05, 2021

Abstract:Generative adversarial networks (GANs) are one of the most widely used generative models. GANs can learn complex multi-modal distributions, and generate real-like samples. Despite the major success of GANs in generating synthetic data, they might suffer from unstable training process, and mode collapse. In this paper, we introduce a new GAN architecture called variance enforcing GAN (VARGAN), which incorporates a third network to introduce diversity in the generated samples. The third network measures the diversity of the generated samples, which is used to penalize the generator's loss for low diversity samples. The network is trained on the available training data and undesired distributions with limited modality. On a set of synthetic and real-world image data, VARGAN generates a more diverse set of samples compared to the recent state-of-the-art models. High diversity and low computational complexity, as well as fast convergence, make VARGAN a promising model to alleviate mode collapse.

Deep learning approaches for fast radio signal prediction

Jul 14, 2020

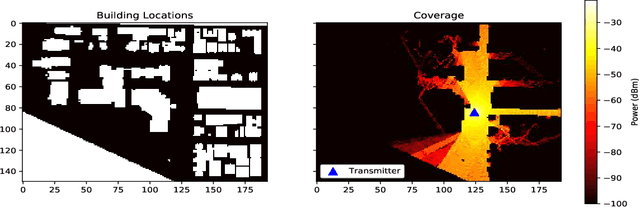

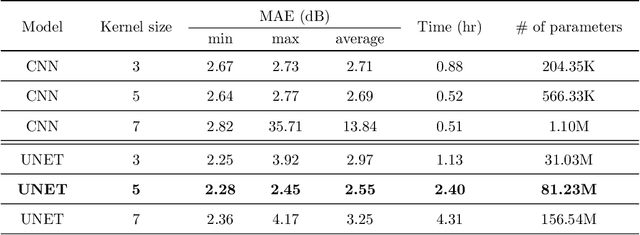

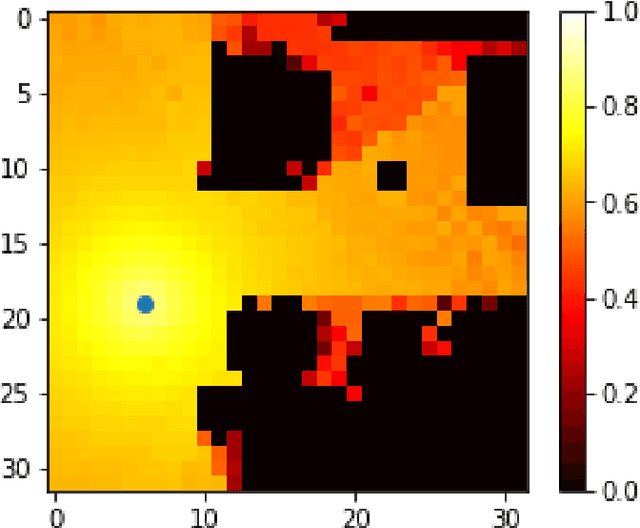

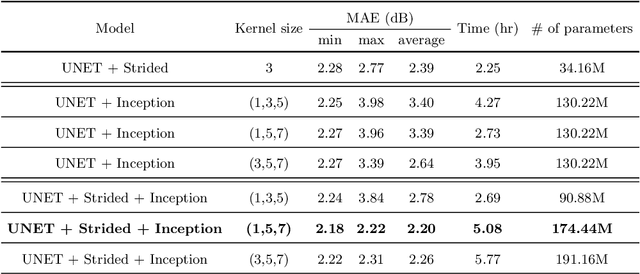

Abstract:The aim of this work is the prediction of power coverage in a dense urban environment given building and transmitter locations. Conventionally ray-tracing is regarded as the most accurate method to predict energy distribution patterns in the area in the presence of diverse radio propagation phenomena. However, ray-tracing simulations are time consuming and require extensive computational resources. We propose deep neural network models to learn from ray-tracing results and predict the power coverage dynamically from buildings and transmitter properties. The proposed UNET model with strided convolutions and inception modules provide highly accurate results that are close to the ray-tracing output on 32x32 frames. This model will allow practitioners to search for the best transmitter locations effectively and reduce the design time significantly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge