Samuel Bosch

Neural Networks for Programming Quantum Annealers

Aug 13, 2023

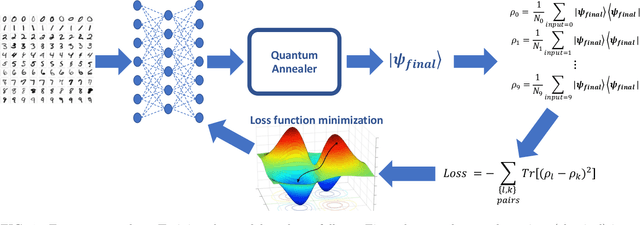

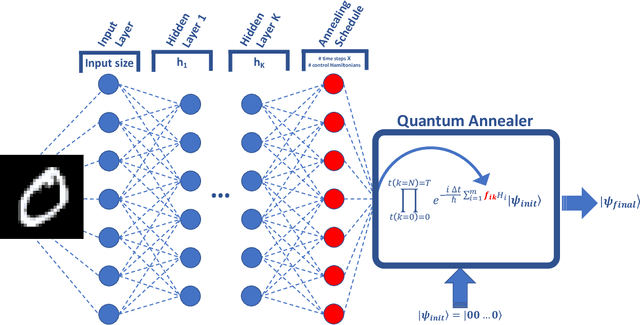

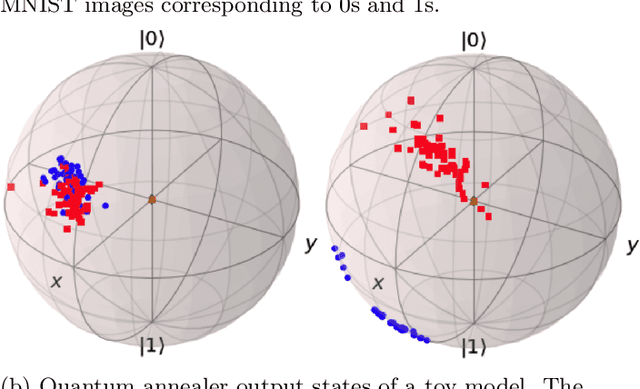

Abstract:Quantum machine learning has the potential to enable advances in artificial intelligence, such as solving problems intractable on classical computers. Some fundamental ideas behind quantum machine learning are similar to kernel methods in classical machine learning. Both process information by mapping it into high-dimensional vector spaces without explicitly calculating their numerical values. We explore a setup for performing classification on labeled classical datasets, consisting of a classical neural network connected to a quantum annealer. The neural network programs the quantum annealer's controls and thereby maps the annealer's initial states into new states in the Hilbert space. The neural network's parameters are optimized to maximize the distance of states corresponding to inputs from different classes and minimize the distance between quantum states corresponding to the same class. Recent literature showed that at least some of the "learning" is due to the quantum annealer, connecting a small linear network to a quantum annealer and using it to learn small and linearly inseparable datasets. In this study, we consider a similar but not quite the same case, where a classical fully-fledged neural network is connected with a small quantum annealer. In such a setting, the fully-fledged classical neural-network already has built-in nonlinearity and learning power, and can already handle the classification problem alone, we want to see whether an additional quantum layer could boost its performance. We simulate this system to learn several common datasets, including those for image and sound recognition. We conclude that adding a small quantum annealer does not provide a significant benefit over just using a regular (nonlinear) classical neural network.

QubitHD: A Stochastic Acceleration Method for HD Computing-Based Machine Learning

Dec 02, 2019

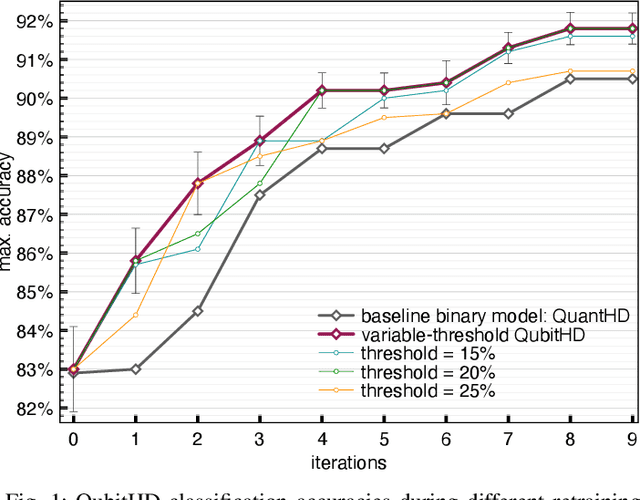

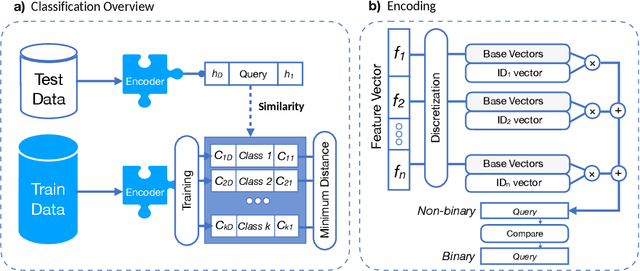

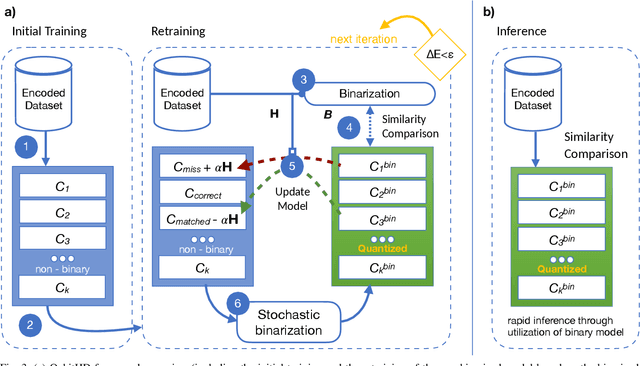

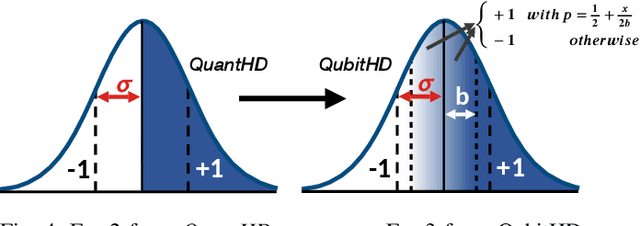

Abstract:Machine Learning algorithms based on Brain-inspired Hyperdimensional (HD) computing imitate cognition by exploiting statistical properties of high-dimensional vector spaces. It is a promising solution for achieving high energy-efficiency in different machine learning tasks, such as classification, semi-supervised learning and clustering. A weakness of existing HD computing-based ML algorithms is the fact that they have to be binarized for achieving very high energy-efficiency. At the same time, binarized models reach lower classification accuracies. To solve the problem of the trade-off between energy-efficiency and classification accuracy, we propose the QubitHD algorithm. It stochastically binarizes HD-based algorithms, while maintaining comparable classification accuracies to their non-binarized counterparts. The FPGA implementation of QubitHD provides a 65% improvement in terms of energy-efficiency, and a 95% improvement in terms of the training time, as compared to state-of-the-art HD-based ML algorithms. It also outperforms state-of-the-art low-cost classifiers (like Binarized Neural Networks) in terms of speed and energy-efficiency by an order of magnitude during training and inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge