Sampsa Vanhatalo

Modeling 3D Infant Kinetics Using Adaptive Graph Convolutional Networks

Feb 22, 2024

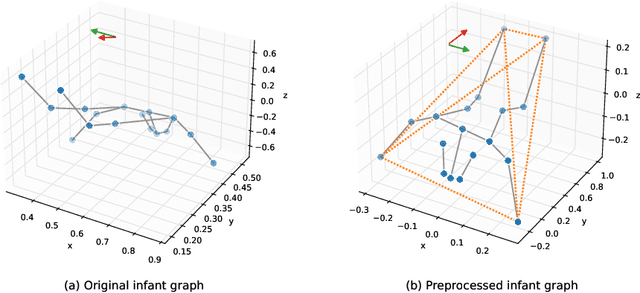

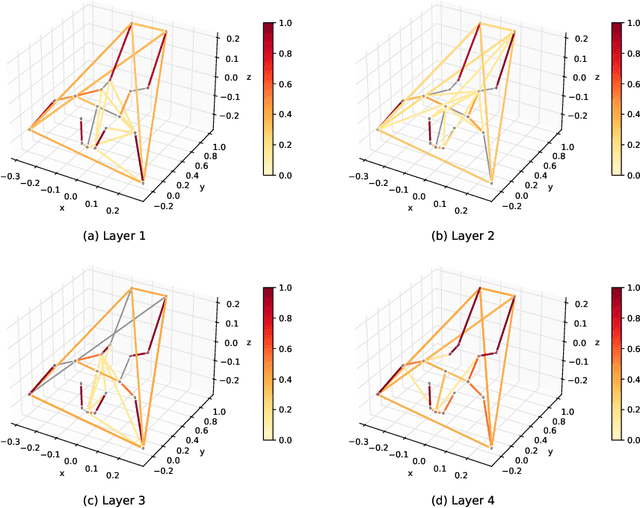

Abstract:Reliable methods for the neurodevelopmental assessment of infants are essential for early detection of medical issues that may need prompt interventions. Spontaneous motor activity, or `kinetics', is shown to provide a powerful surrogate measure of upcoming neurodevelopment. However, its assessment is by and large qualitative and subjective, focusing on visually identified, age-specific gestures. Here, we follow an alternative approach, predicting infants' neurodevelopmental maturation based on data-driven evaluation of individual motor patterns. We utilize 3D video recordings of infants processed with pose-estimation to extract spatio-temporal series of anatomical landmarks, and apply adaptive graph convolutional networks to predict the actual age. We show that our data-driven approach achieves improvement over traditional machine learning baselines based on manually engineered features.

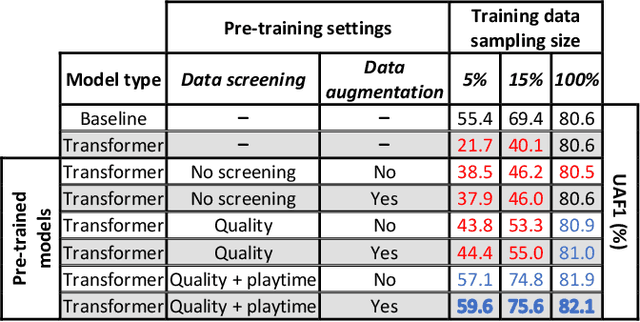

Evaluation of self-supervised pre-training for automatic infant movement classification using wearable movement sensors

May 16, 2023

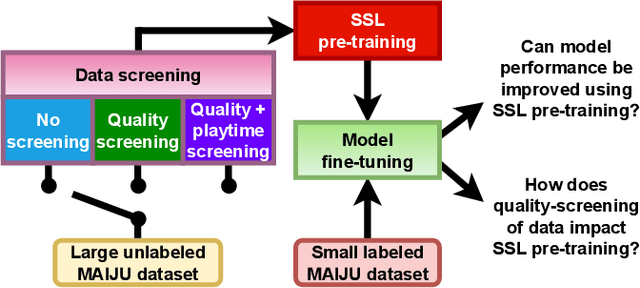

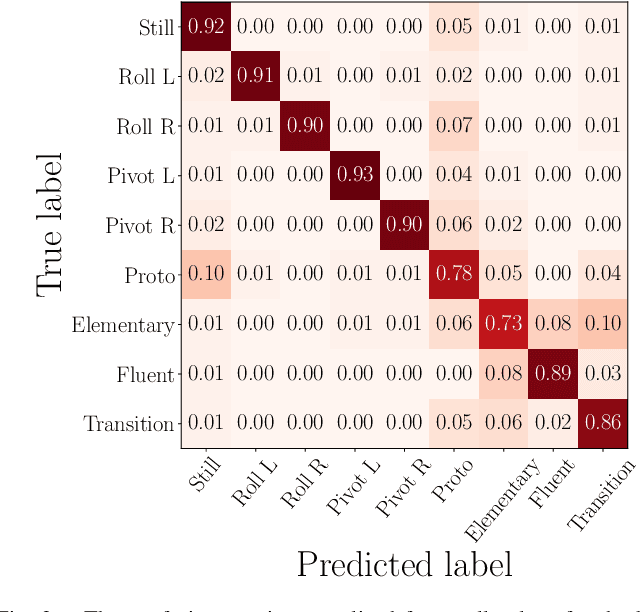

Abstract:The recently-developed infant wearable MAIJU provides a means to automatically evaluate infants' motor performance in an objective and scalable manner in out-of-hospital settings. This information could be used for developmental research and to support clinical decision-making, such as detection of developmental problems and guiding of their therapeutic interventions. MAIJU-based analyses rely fully on the classification of infant's posture and movement; it is hence essential to study ways to increase the accuracy of such classifications, aiming to increase the reliability and robustness of the automated analysis. Here, we investigated how self-supervised pre-training improves performance of the classifiers used for analyzing MAIJU recordings, and we studied whether performance of the classifier models is affected by context-selective quality-screening of pre-training data to exclude periods of little infant movement or with missing sensors. Our experiments show that i) pre-training the classifier with unlabeled data leads to a robust accuracy increase of subsequent classification models, and ii) selecting context-relevant pre-training data leads to substantial further improvements in the classifier performance.

Development of Sleep State Trend , a bedside measure of neonatal sleep state fluctuations based on single EEG channels

Aug 25, 2022

Abstract:Objective: To develop and validate an automated method for bedside monitoring of sleep state fluctuations in neonatal intensive care units. Methods: A deep learning -based algorithm was designed and trained using 53 EEG recordings from a long-term (a)EEG monitoring in 30 near-term neonates. The results were validated using an external dataset from 30 polysomnography recordings. In addition to training and validating a single EEG channel quiet sleep detector, we constructed Sleep State Trend (SST), a bedside-ready means for visualizing classifier outputs. Results: The accuracy of quiet sleep detection in the training data was 90%, and the accuracy was comparable (85-86%) in all bipolar derivations available from the 4-electrode recordings. The algorithm generalized well to an external dataset, showing 81% overall accuracy despite different signal derivations. SST allowed an intuitive, clear visualization of the classifier output. Conclusions: Fluctuations in sleep states can be detected at high fidelity from a single EEG channel, and the results can be visualized as a transparent and intuitive trend in the bedside monitors. Significance: The Sleep State Trend (SST) may provide caregivers a real-time view of sleep state fluctuations and its cyclicity.

Ensemble learning using individual neonatal data for seizure detection

Apr 11, 2022

Abstract:Sharing medical data between institutions is difficult in practice due to data protection laws and official procedures within institutions. Therefore, most existing algorithms are trained on relatively small electroencephalogram (EEG) data sets which is likely to be detrimental to prediction accuracy. In this work, we simulate a case when the data can not be shared by splitting the publicly available data set into disjoint sets representing data in individual institutions. We propose to train a (local) detector in each institution and aggregate their individual predictions into one final prediction. Four aggregation schemes are compared, namely, the majority vote, the mean, the weighted mean and the Dawid-Skene method. The approach allows different detector architectures amongst the institutions. The method was validated on an independent data set using only a subset of EEG channels. The ensemble reaches accuracy comparable to a single detector trained on all the data when sufficient amount of data is available in each institution. The weighted mean aggregation scheme showed best overall performance, it was only marginally outperformed by the Dawid-Skene method when local detectors approach performance of a single detector trained on all available data.

Validating an SVM-based neonatal seizure detection algorithm for generalizability, non-inferiority and clinical efficacy

Feb 24, 2022

Abstract:Neonatal seizure detection algorithms (SDA) are approaching the benchmark of human expert annotation. Measures of algorithm generalizability and non-inferiority as well as measures of clinical efficacy are needed to assess the full scope of neonatal SDA performance. We validated our neonatal SDA on an independent data set of 28 neonates. Generalizability was tested by comparing the performance of the original training set (cross-validation) to its performance on the validation set. Non-inferiority was tested by assessing inter-observer agreement between combinations of SDA and two human expert annotations. Clinical efficacy was tested by comparing how the SDA and human experts quantified seizure burden and identified clinically significant periods of seizure activity in the EEG. Algorithm performance was consistent between training and validation sets with no significant worsening in AUC (p>0.05, n =28). SDA output was inferior to the annotation of the human expert, however, re-training with an increased diversity of data resulted in non-inferior performance ($\Delta\kappa$=0.077, 95% CI: -0.002-0.232, n=18). The SDA assessment of seizure burden had an accuracy ranging from 89-93%, and 87% for identifying periods of clinical interest. The proposed SDA is approaching human equivalence and provides a clinically relevant interpretation of the EEG.

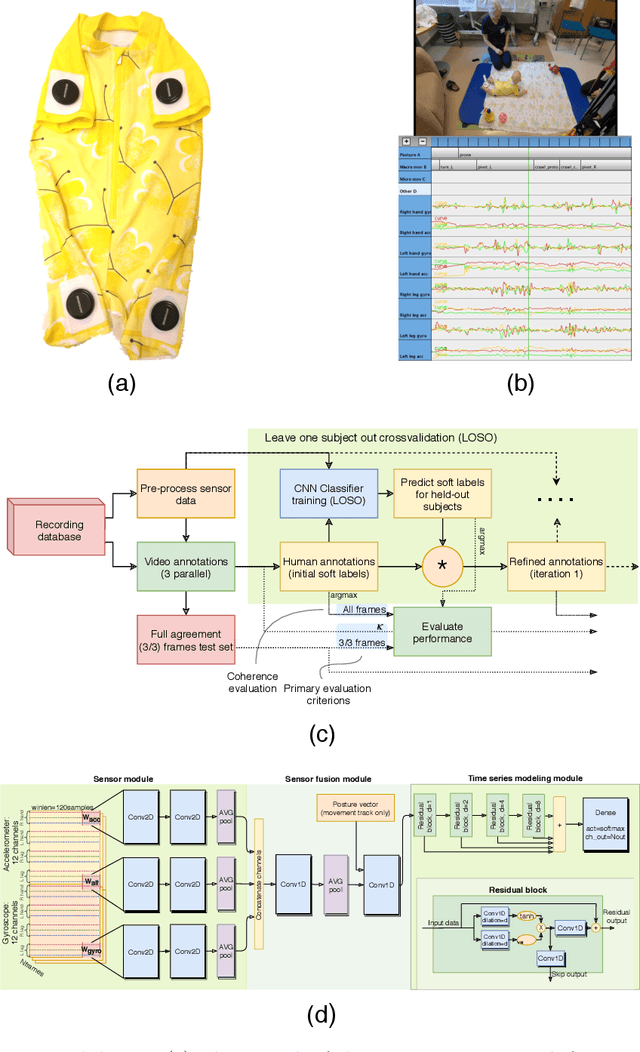

Comparison of end-to-end neural network architectures and data augmentation methods for automatic infant motility assessment using wearable sensors

Jul 02, 2021

Abstract:Infant motility assessment using intelligent wearables is a promising new approach for assessment of infant neurophysiological development, and where efficient signal analysis plays a central role. This study investigates the use of different end-to-end neural network architectures for processing infant motility data from wearable sensors. We focus on the performance and computational burden of alternative sensor encoder and time-series modelling modules and their combinations. In addition, we explore the benefits of data augmentation methods in ideal and non-ideal recording conditions. The experiments are conducted using a data-set of multi-sensor movement recordings from 7-month-old infants, as captured by a recently proposed smart jumpsuit for infant motility assessment. Our results indicate that the choice of the encoder module has a major impact on classifier performance. For sensor encoders, the best performance was obtained with parallel 2-dimensional convolutions for intra-sensor channel fusion with shared weights for all sensors. The results also indicate that a relatively compact feature representation is obtainable for within-sensor feature extraction without a drastic loss to classifier performance. Comparison of time-series models revealed that feed-forward dilated convolutions with residual and skip connections outperformed all RNN-based models in performance, training time, and training stability. The experiments also indicate that data augmentation improves model robustness in simulated packet loss or sensor dropout scenarios. In particular, signal- and sensor-dropout-based augmentation strategies provided considerable boosts to performance without negatively affecting the baseline performance. Overall the results provide tangible suggestions on how to optimize end-to-end neural network training for multi-channel movement sensor data.

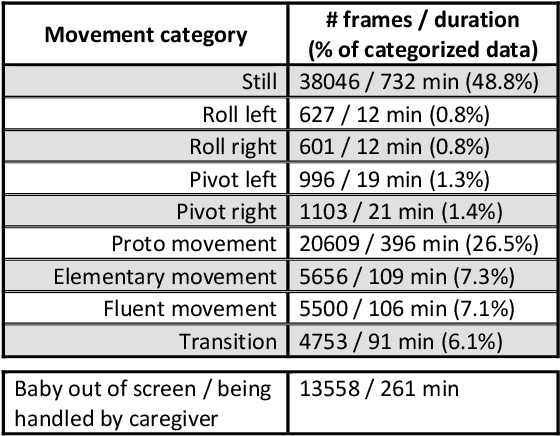

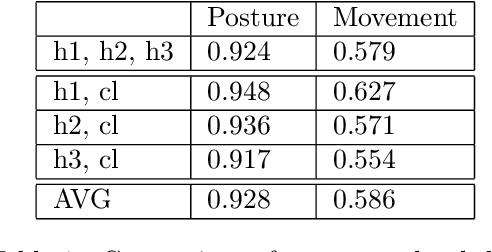

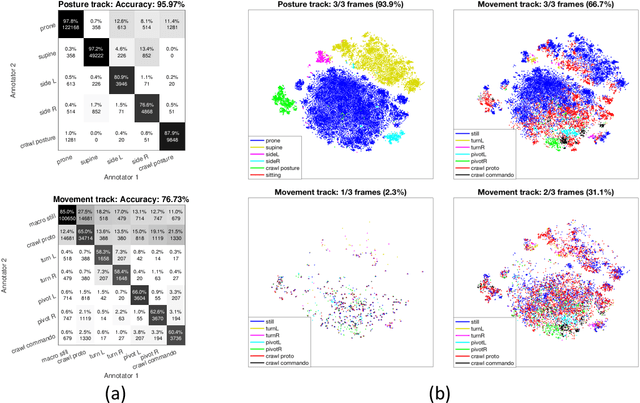

Automatic Posture and Movement Tracking of Infants with Wearable Movement Sensors

Sep 21, 2019

Abstract:Infant's spontaneous movements mirror integrity of brain networks, and thus also predict the future development of higher cognitive functions. Early recognition of infants with compromised motor development holds promise for guiding early therapies to improve lifelong neurocognitive outcomes. It has been challenging, however, to assess motor performance in ways that are objective and quantitative. Novel wearable technology has shown promise for offering efficient, scalable and automated methods in movement assessment. Here, we describe the development of an infant wearable, a multi-sensor smart jumpsuit that allows mobile data collection during independent movements. A deep learning algorithm, based on convolutional neural networks (CNNs), was then trained using multiple human annotations that incorporate the substantial inherent ambiguity in movement classifications. We also quantify the substantial ambiguity of a human observer, allowing its transfer to improving the automated classifier. Comparison of different sensor configurations and classifier designs shows that four-limb recording and end-to-end CNN classifier architecture allows the best movement classification. Our results show that quantitative tracking of independent movement activities is possible with a human equivalent accuracy, i.e. it meets the human inter-rater agreement levels in infant posture and movement classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge