Samir Al-Stouhi

Conf-Net: Predicting Depth Completion Error-Map For High-Confidence Dense 3D Point-Cloud

Jul 29, 2019

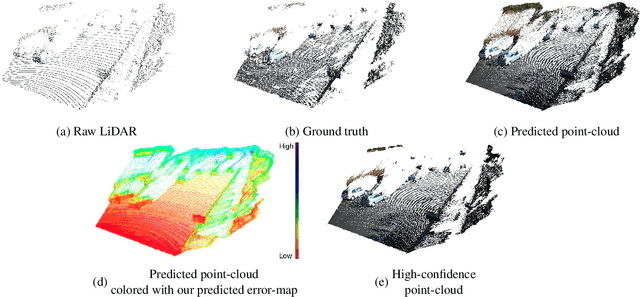

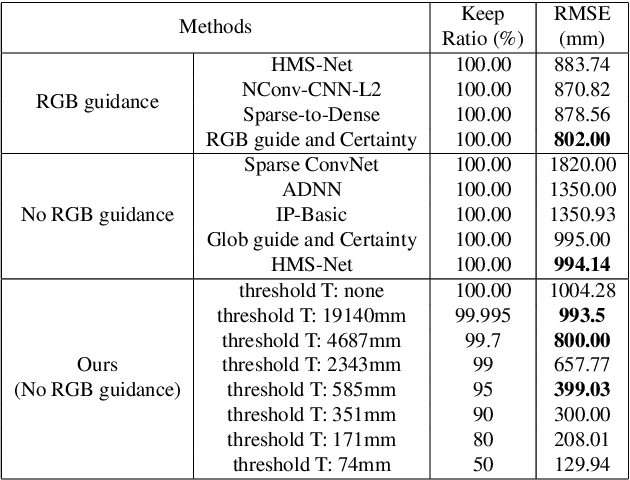

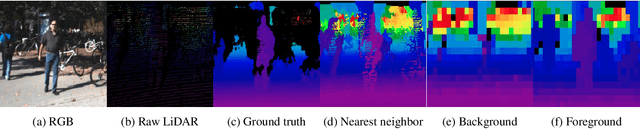

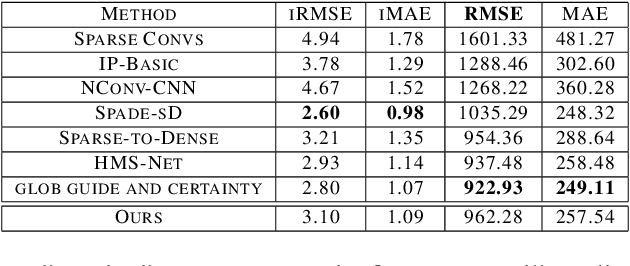

Abstract:This work proposes a new method for depth completion of sparse LiDAR data using a convolutional neural network which learns to generate almost full 3D point-clouds with significantly lower root mean squared error (RMSE) over state-of-the-art methods. An almost dense high-confidence/low-variance point-cloud is more valuable for safety-critical applications specifically real-world autonomous driving than a dense point-cloud with high error rate and high variance. We examine the error of the standard depth completion methods and demonstrate that the error exhibits a long tail distribution which can be significantly reduced if a small portion of the generated depth points can be identified and removed. We add a purging step to our neural network and present a novel end-to-end algorithm that learns to predict a high-quality error-map of its prediction. Using our predicted error map, we demonstrate that by up-filling a LiDAR point cloud from 18,000 points to 285,000 points, versus 300,000 points for full depth, we can reduce the RMSE error from 1004 to 399. This error is approximately 60% less than the state-of-the-art and 50% less than the state-of-the-art with RGB guidance. We only need to remove 0.3% of the predicted points to get comparable results with the state-of-the-art which has RGB guidance. Our post-processing step takes the output of a standard encoder-decoder network, to generate high resolution 360 degrees dense point-cloud. In addition to analyzing our results on Kitti depth completion dataset, we demonstrate the real-world performance of our algorithm using data gathered with a Velodyne VLP-32C LiDAR mounted on our vehicle to verify the effectiveness and real-time performance of our algorithm for autonomous driving. Codes and demo videos are available at http://github.com/hekmak/Conf-net.

Towards Full Automated Drive in Urban Environments: A Demonstration in GoMentum Station, California

May 02, 2017

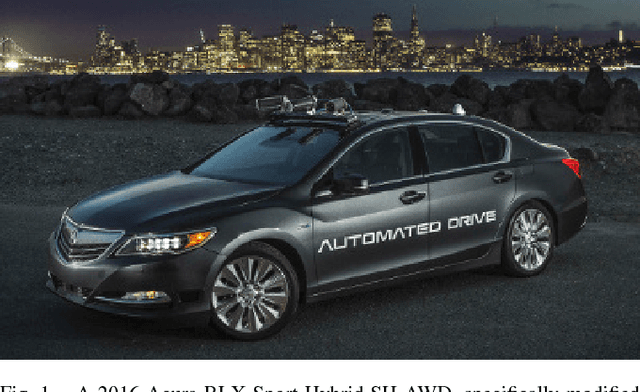

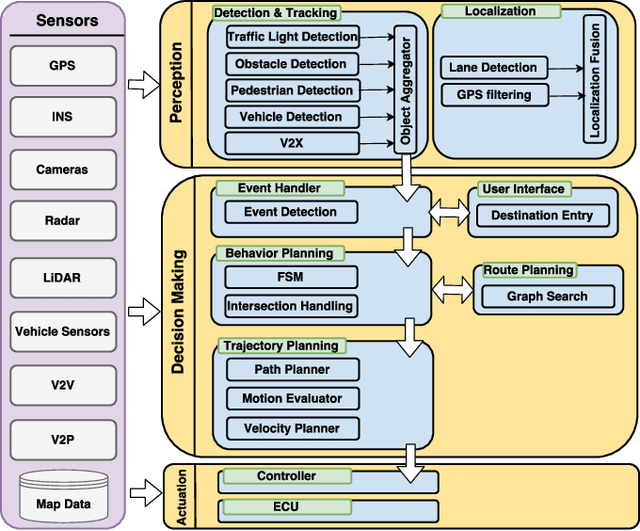

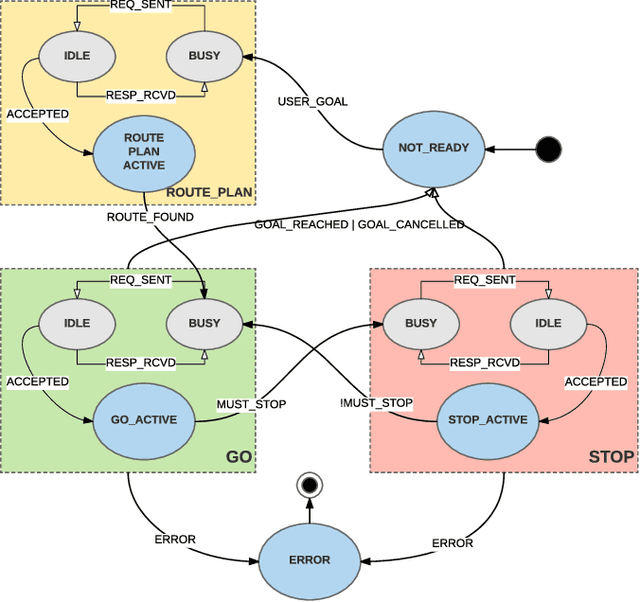

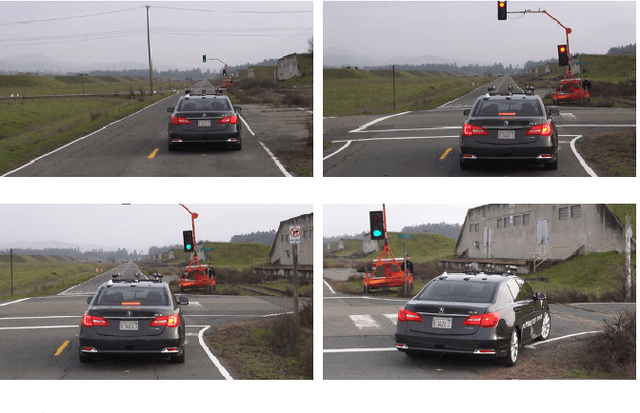

Abstract:Each year, millions of motor vehicle traffic accidents all over the world cause a large number of fatalities, injuries and significant material loss. Automated Driving (AD) has potential to drastically reduce such accidents. In this work, we focus on the technical challenges that arise from AD in urban environments. We present the overall architecture of an AD system and describe in detail the perception and planning modules. The AD system, built on a modified Acura RLX, was demonstrated in a course in GoMentum Station in California. We demonstrated autonomous handling of 4 scenarios: traffic lights, cross-traffic at intersections, construction zones and pedestrians. The AD vehicle displayed safe behavior and performed consistently in repeated demonstrations with slight variations in conditions. Overall, we completed 44 runs, encompassing 110km of automated driving with only 3 cases where the driver intervened the control of the vehicle, mostly due to error in GPS positioning. Our demonstration showed that robust and consistent behavior in urban scenarios is possible, yet more investigation is necessary for full scale roll-out on public roads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge