Salvador Ros

ALBERTI, a Multilingual Domain Specific Language Model for Poetry Analysis

Jul 03, 2023

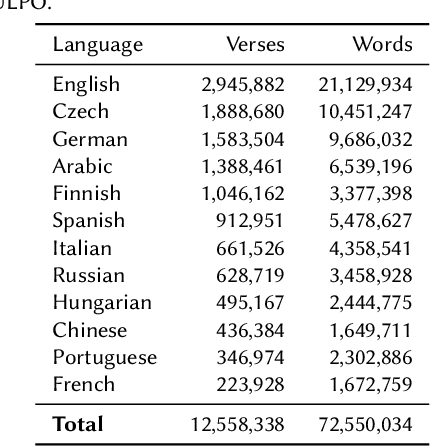

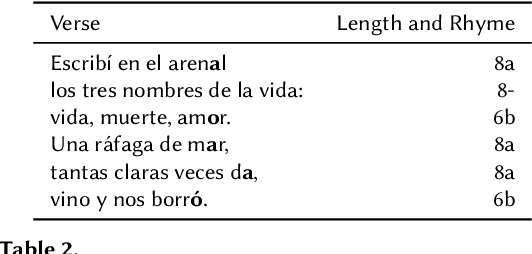

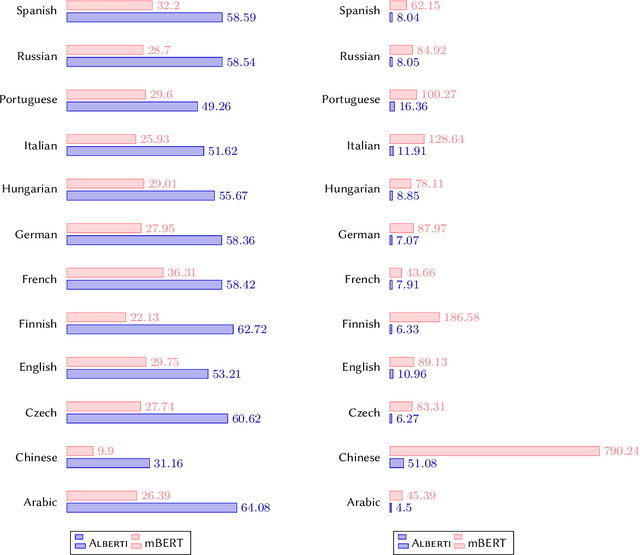

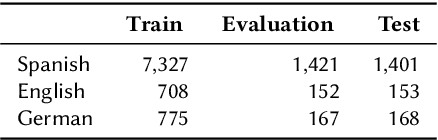

Abstract:The computational analysis of poetry is limited by the scarcity of tools to automatically analyze and scan poems. In a multilingual settings, the problem is exacerbated as scansion and rhyme systems only exist for individual languages, making comparative studies very challenging and time consuming. In this work, we present \textsc{Alberti}, the first multilingual pre-trained large language model for poetry. Through domain-specific pre-training (DSP), we further trained multilingual BERT on a corpus of over 12 million verses from 12 languages. We evaluated its performance on two structural poetry tasks: Spanish stanza type classification, and metrical pattern prediction for Spanish, English and German. In both cases, \textsc{Alberti} outperforms multilingual BERT and other transformers-based models of similar sizes, and even achieves state-of-the-art results for German when compared to rule-based systems, demonstrating the feasibility and effectiveness of DSP in the poetry domain.

LyricSIM: A novel Dataset and Benchmark for Similarity Detection in Spanish Song LyricS

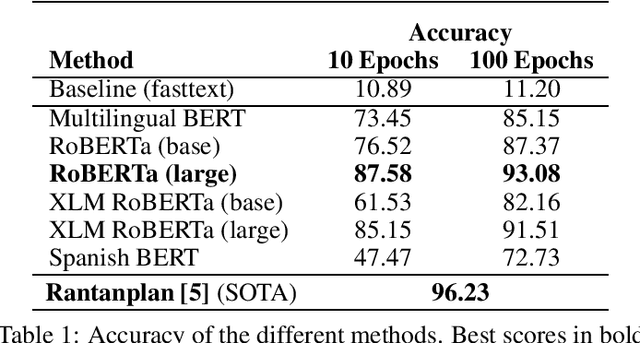

Jun 02, 2023Abstract:In this paper, we present a new dataset and benchmark tailored to the task of semantic similarity in song lyrics. Our dataset, originally consisting of 2775 pairs of Spanish songs, was annotated in a collective annotation experiment by 63 native annotators. After collecting and refining the data to ensure a high degree of consensus and data integrity, we obtained 676 high-quality annotated pairs that were used to evaluate the performance of various state-of-the-art monolingual and multilingual language models. Consequently, we established baseline results that we hope will be useful to the community in all future academic and industrial applications conducted in this context.

Predicting metrical patterns in Spanish poetry with language models

Nov 18, 2020

Abstract:In this paper, we compare automated metrical pattern identification systems available for Spanish against extensive experiments done by fine-tuning language models trained on the same task. Despite being initially conceived as a model suitable for semantic tasks, our results suggest that BERT-based models retain enough structural information to perform reasonably well for Spanish scansion.

DISCO PAL: Diachronic Spanish Sonnet Corpus with Psychological and Affective Labels

Jul 09, 2020

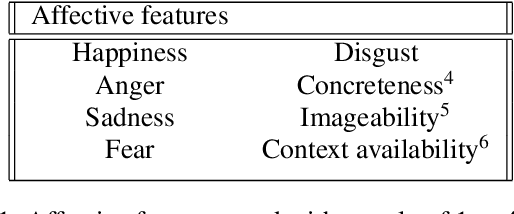

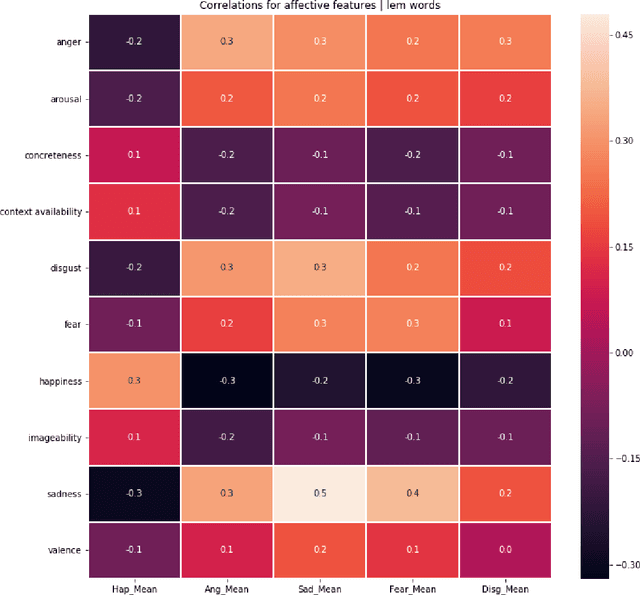

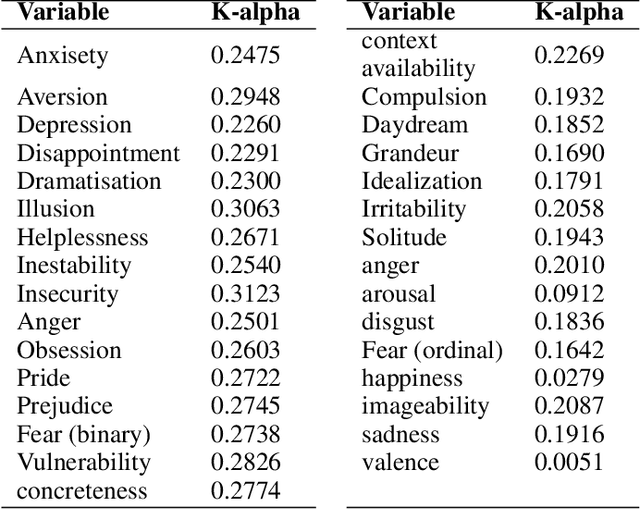

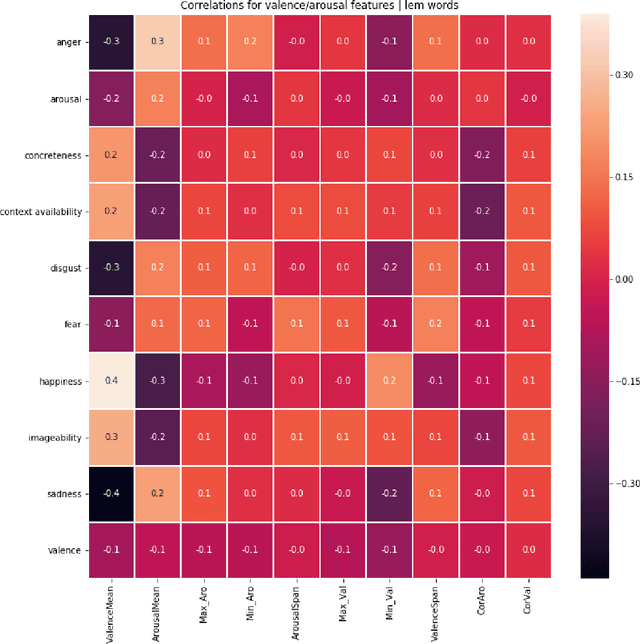

Abstract:Nowadays, there are many applications of text mining over corpus from different languages, such as using supervised machine learning in order to predict labels associated to a text using as predictors features derived from the text itself. However, most of these applications are based on texts in prose, with a lack of applications that work with poetry texts. An example of application of text mining in poetry is the usage of features derived from their individual word in order to capture the lexical, sublexical and interlexical meaning, and infer the General Affective Meaning (GAM) of the text. However, though this proposal has been proved as useful for poetry in some languages, there is a lack of studies for both Spanish poetry and for highly-structured poetic compositions such as sonnets. This article presents a study over a labeled corpus of Spanish sonnets, in order to analyse if it is possible to build features from their individual words in order to predict their GAM. The purpose of this is to model sonnets at an affective level. The article also analyses the relationship between the GAM of the sonnets and the content itself. For this, we consider the content from a psychological perspective, identifying with tags when a sonnet is related to a specific term (p.e, when the sonnet's content is related to "daydream"). Then, we study how the GAM changes according to each of those psychological terms. The corpus contains 230 Spanish sonnets from authors of different centuries, from 15th to 19th. This corpus was annotated by different domain experts. The experts annotated the poems with affective features, as well as with domain concepts that belong to psychology. Thanks to this, the corpora of sonnets can be used in different applications, such as poetry recommender systems, personality text mining studies of the authors, or the usage of poetry for therapeutic purposes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge