Sagar Patel

CrystalBox: Future-Based Explanations for DRL Network Controllers

Feb 27, 2023Abstract:Lack of explainability is a key factor limiting the practical adoption of high-performant Deep Reinforcement Learning (DRL) controllers. Explainable RL for networking hitherto used salient input features to interpret a controller's behavior. However, these feature-based solutions do not completely explain the controller's decision-making process. Often, operators are interested in understanding the impact of a controller's actions on performance in the future, which feature-based solutions cannot capture. In this paper, we present CrystalBox, a framework that explains a controller's behavior in terms of the future impact on key network performance metrics. CrystalBox employs a novel learning-based approach to generate succinct and expressive explanations. We use reward components of the DRL network controller, which are key performance metrics meaningful to operators, as the basis for explanations. CrystalBox is generalizable and can work across both discrete and continuous control environments without any changes to the controller or the DRL workflow. Using adaptive bitrate streaming and congestion control, we demonstrate CrytalBox's ability to generate high-fidelity future-based explanations. We additionally present three practical use cases of CrystalBox: cross-state explainability, guided reward design, and network observability.

Prioritized Trace Selection: Towards High-Performance DRL-based Network Controllers

Feb 24, 2023

Abstract:Deep Reinforcement Learning (DRL) based controllers offer high performance in a variety of network environments. However, simulator-based training of DRL controllers using highly skewed datasets of real-world traces often results in poor performance in the wild. In this paper, we put forward a generalizable solution for training high-performance DRL controllers in simulators -- Prioritized Trace Selection (PTS). PTS employs an automated three-stage process. First, we identify critical features that determine trace behavior. Second, we classify the traces into clusters. Finally, we dynamically identify and prioritize the salient clusters during training. PTS does not require any changes to the DRL workflow. It can work across both on-policy and off-policy DRL algorithms. We use Adaptive Bit Rate selection and Congestion Control as representative applications to show that PTS offers better performance in simulation and real-world, across multiple controllers and DRL algorithms. Our novel ABR controller, Gelato, trained with PTS outperforms state-of-the-art controllers on the real-world live-streaming platform, Puffer, reducing stalls by 59% and significantly improving average video quality.

Feature Selection on a Flare Forecasting Testbed: A Comparative Study of 24 Methods

Sep 30, 2021

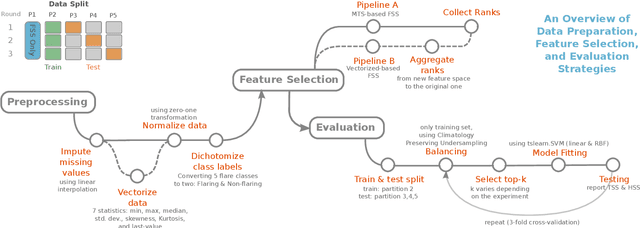

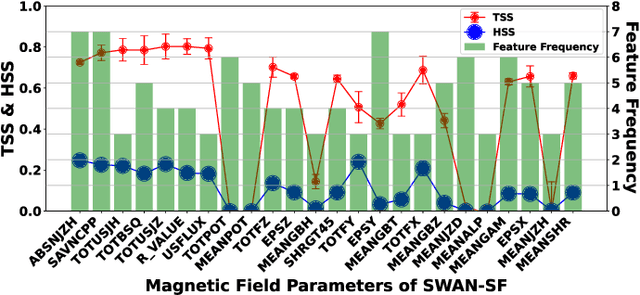

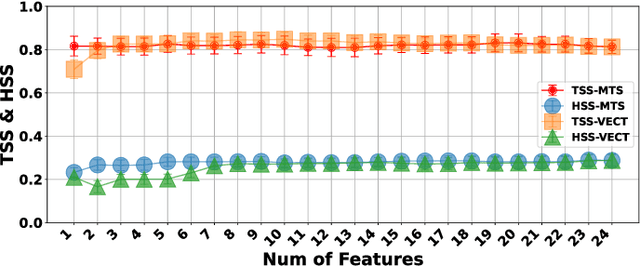

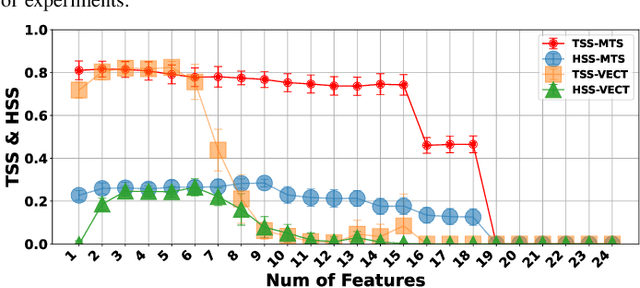

Abstract:The Space-Weather ANalytics for Solar Flares (SWAN-SF) is a multivariate time series benchmark dataset recently created to serve the heliophysics community as a testbed for solar flare forecasting models. SWAN-SF contains 54 unique features, with 24 quantitative features computed from the photospheric magnetic field maps of active regions, describing their precedent flare activity. In this study, for the first time, we systematically attacked the problem of quantifying the relevance of these features to the ambitious task of flare forecasting. We implemented an end-to-end pipeline for preprocessing, feature selection, and evaluation phases. We incorporated 24 Feature Subset Selection (FSS) algorithms, including multivariate and univariate, supervised and unsupervised, wrappers and filters. We methodologically compared the results of different FSS algorithms, both on the multivariate time series and vectorized formats, and tested their correlation and reliability, to the extent possible, by using the selected features for flare forecasting on unseen data, in univariate and multivariate fashions. We concluded our investigation with a report of the best FSS methods in terms of their top-k features, and the analysis of the findings. We wish the reproducibility of our study and the availability of the data allow the future attempts be comparable with our findings and themselves.

3D Topology Optimization using Convolutional Neural Networks

Aug 22, 2018

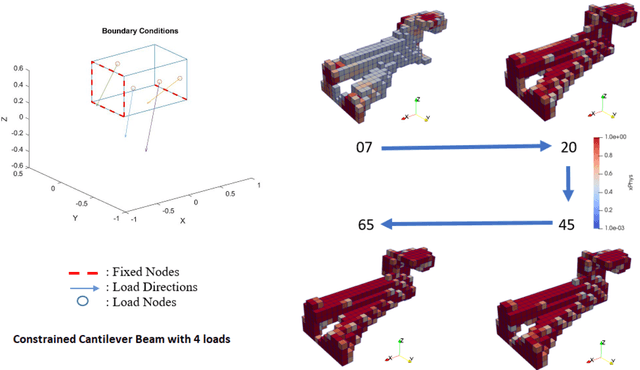

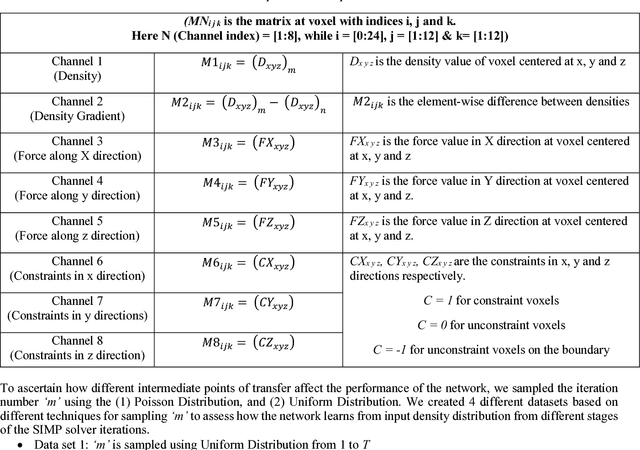

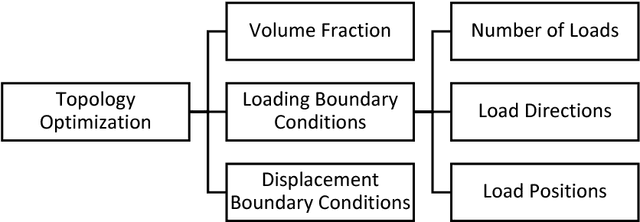

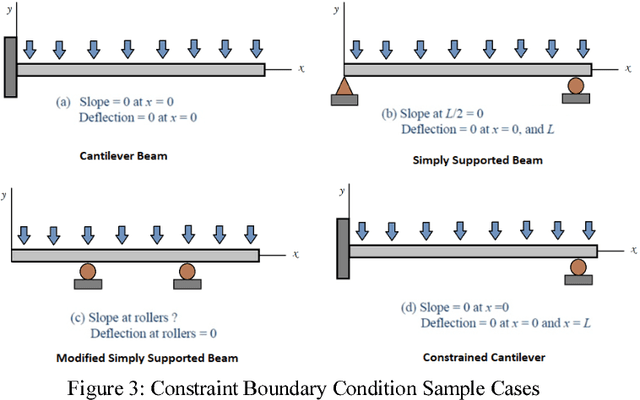

Abstract:Topology optimization is computationally demanding that requires the assembly and solution to a finite element problem for each material distribution hypothesis. As a complementary alternative to the traditional physics-based topology optimization, we explore a data-driven approach that can quickly generate accurate solutions. To this end, we propose a deep learning approach based on a 3D encoder-decoder Convolutional Neural Network architecture for accelerating 3D topology optimization and to determine the optimal computational strategy for its deployment. Analysis of iteration-wise progress of the Solid Isotropic Material with Penalization process is used as a guideline to study how the earlier steps of the conventional topology optimization can be used as input for our approach to predict the final optimized output structure directly from this input. We conduct a comparative study between multiple strategies for training the neural network and assess the effect of using various input combinations for the CNN to finalize the strategy with the highest accuracy in predictions for practical deployment. For the best performing network, we achieved about 40% reduction in overall computation time while also attaining structural accuracies in the order of 96%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge