Saeed Akhavan

Sparsity Based Multi-Source Robust 3D Localization Using a Moving Receiver

Aug 12, 2024

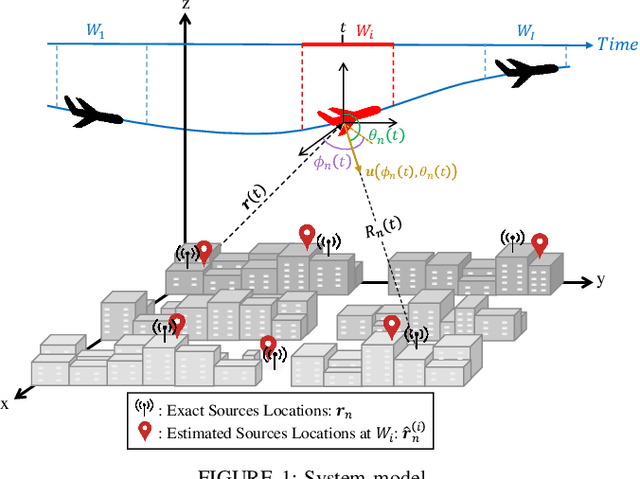

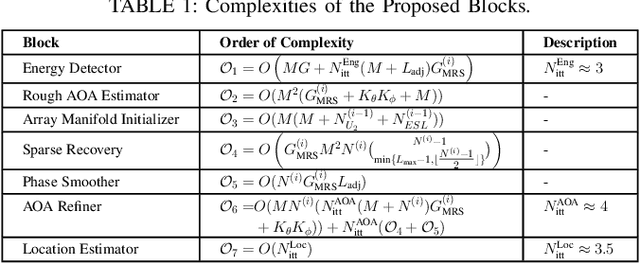

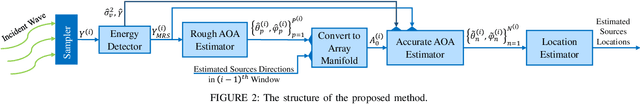

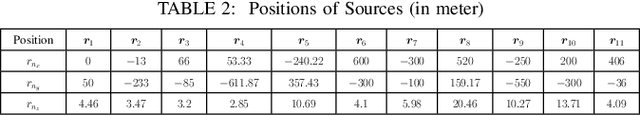

Abstract:Accurately localizing multiple sources is a critical task with various applications in wireless communications, such as emergency services including natural post-disaster search and rescue operations. However, the scenarios where the receiver is moving, are not addressed by recent studies. This paper tackles the angle of arrival (AOA) 3D-localization problem for multiple sparse signal sources with a moving receiver having limited antennas, potentially outnumbered by the sources. First, an energy detector algorithm is proposed to exploit the sparsity of the signal to eliminate the noisy samples of the signals. Subsequently, elevation and azimuth AOAs of sources are roughly estimated using two dimensional multiple signal classification (2D-MUSIC) method. Next, an algorithm is proposed to refine and estimate the elevation and azimuth AOAs more accurately. To this end, we propose a sparse recovery algorithm to exploit the sparsity feature of the signals. Then, we propose a phase smoothing algorithm to refine the estimations in the output of sparse recovery algorithm. Finally, K-SVD algorithm is employed to find the accurate elevation and azimuth AOAs of sources. For localization, a new multi-source 3D-localization algorithm is proposed to estimate the positions of sources in a sequence of time windows. Extensive simulations are carried out to demonstrate the effectiveness of the proposed framework.

SUM: Saliency Unification through Mamba for Visual Attention Modeling

Jun 25, 2024

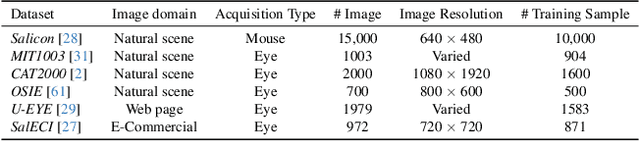

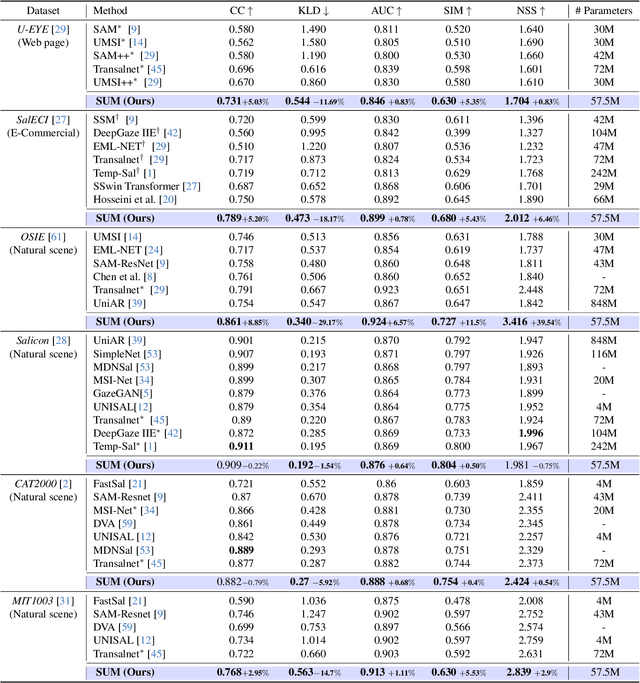

Abstract:Visual attention modeling, important for interpreting and prioritizing visual stimuli, plays a significant role in applications such as marketing, multimedia, and robotics. Traditional saliency prediction models, especially those based on Convolutional Neural Networks (CNNs) or Transformers, achieve notable success by leveraging large-scale annotated datasets. However, the current state-of-the-art (SOTA) models that use Transformers are computationally expensive. Additionally, separate models are often required for each image type, lacking a unified approach. In this paper, we propose Saliency Unification through Mamba (SUM), a novel approach that integrates the efficient long-range dependency modeling of Mamba with U-Net to provide a unified model for diverse image types. Using a novel Conditional Visual State Space (C-VSS) block, SUM dynamically adapts to various image types, including natural scenes, web pages, and commercial imagery, ensuring universal applicability across different data types. Our comprehensive evaluations across five benchmarks demonstrate that SUM seamlessly adapts to different visual characteristics and consistently outperforms existing models. These results position SUM as a versatile and powerful tool for advancing visual attention modeling, offering a robust solution universally applicable across different types of visual content.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge