Ryan Watkins

Needs and Artificial Intelligence

Feb 18, 2022Abstract:Throughout their history, homo sapiens have used technologies to better satisfy their needs. The relation between needs and technology is so fundamental that the US National Research Council defined the distinguishing characteristic of technology as its goal "to make modifications in the world to meet human needs". Artificial intelligence (AI) is one of the most promising emerging technologies of our time. Similar to other technologies, AI is expected "to meet [human] needs". In this article, we reflect on the relationship between needs and AI, and call for the realisation of needs-aware AI systems. We argue that re-thinking needs for, through, and by AI can be a very useful means towards the development of realistic approaches for Sustainable, Human-centric, Accountable, Lawful, and Ethical (HALE) AI systems. We discuss some of the most critical gaps, barriers, enablers, and drivers of co-creating future AI-based socio-technical systems in which [human] needs are well considered and met. Finally, we provide an overview of potential threats and HALE considerations that should be carefully taken into account, and call for joint, immediate, and interdisciplinary efforts and collaborations.

Needs-aware Artificial Intelligence: AI that 'serves [human] needs'

Feb 10, 2022Abstract:Many boundaries are, and will continue to, shape the future of Artificial Intelligence (AI). We push on these boundaries in order to make progress, but they are both pliable and resilient--always creating new boundaries of what AI can (or should) achieve. Among these are technical boundaries (such as processing capacity), psychological boundaries (such as human trust in AI systems), ethical boundaries (such as with AI weapons), and conceptual boundaries (such as the AI people can imagine). It is within this final category while it can play a fundamental role in all other boundaries} that we find the construct of needs and the limitations that our current concept of need places on the future AI.

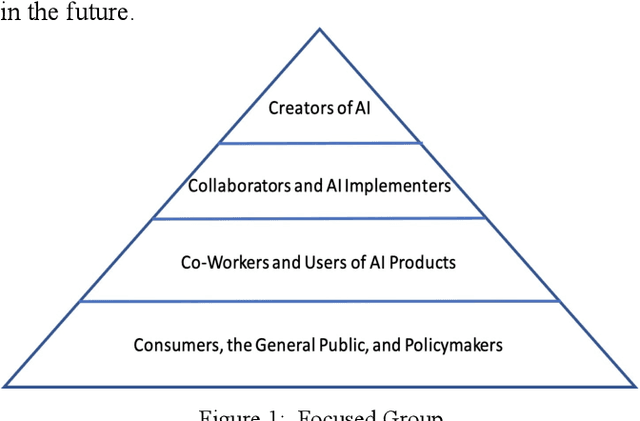

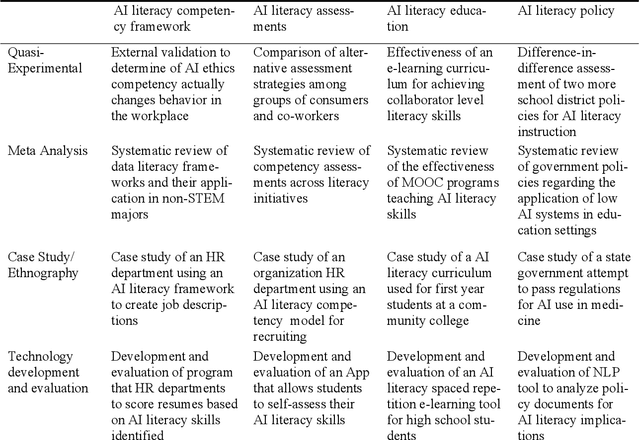

Competency Model Approach to AI Literacy: Research-based Path from Initial Framework to Model

Aug 12, 2021

Abstract:The recent developments in Artificial Intelligence (AI) technologies challenge educators and educational institutions to respond with curriculum and resources that prepare students of all ages with the foundational knowledge and skills for success in the AI workplace. Research on AI Literacy could lead to an effective and practical platform for developing these skills. We propose and advocate for a pathway for developing AI Literacy as a pragmatic and useful tool for AI education. Such a discipline requires moving beyond a conceptual framework to a multi-level competency model with associated competency assessments. This approach to an AI Literacy could guide future development of instructional content as we prepare a range of groups (i.e., consumers, co-workers, collaborators, and creators). We propose here a research matrix as an initial step in the development of a roadmap for AI Literacy research, which requires a systematic and coordinated effort with the support of publication outlets and research funding, to expand the areas of competency and assessments.

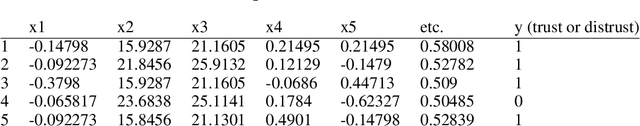

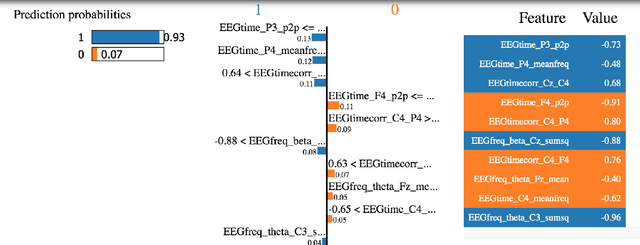

Monitoring Trust in Human-Machine Interactions for Public Sector Applications

Oct 16, 2020

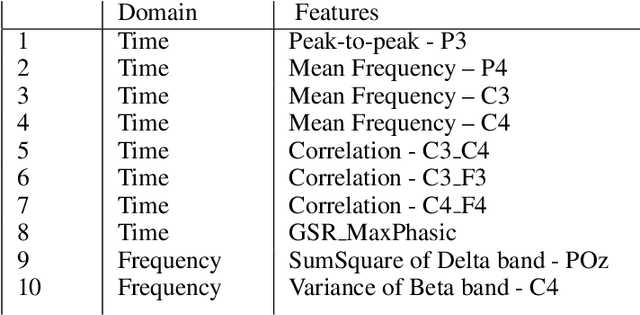

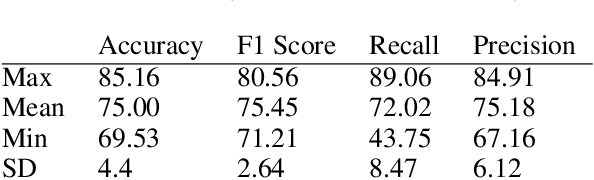

Abstract:The work reported here addresses the capacity of psychophysiological sensors and measures using Electroencephalogram (EEG) and Galvanic Skin Response (GSR) to detect levels of trust for humans using AI-supported Human-Machine Interaction (HMI). Improvements to the analysis of EEG and GSR data may create models that perform as well, or better than, traditional tools. A challenge to analyzing the EEG and GSR data is the large amount of training data required due to a large number of variables in the measurements. Researchers have routinely used standard machine-learning classifiers like artificial neural networks (ANN), support vector machines (SVM), and K-nearest neighbors (KNN). Traditionally, these have provided few insights into which features of the EEG and GSR data facilitate the more and least accurate predictions - thus making it harder to improve the HMI and human-machine trust relationship. A key ingredient to applying trust-sensor research results to practical situations and monitoring trust in work environments is the understanding of which key features are contributing to trust and then reducing the amount of data needed for practical applications. We used the Local Interpretable Model-agnostic Explanations (LIME) model as a process to reduce the volume of data required to monitor and enhance trust in HMI systems - a technology that could be valuable for governmental and public sector applications. Explainable AI can make HMI systems transparent and promote trust. From customer service in government agencies and community-level non-profit public service organizations to national military and cybersecurity institutions, many public sector organizations are increasingly concerned to have effective and ethical HMI with services that are trustworthy, unbiased, and free of unintended negative consequences.

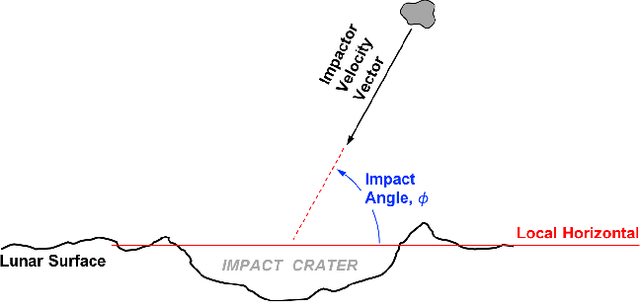

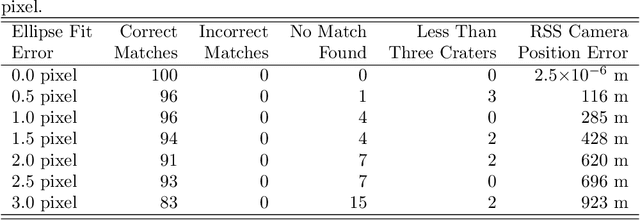

Lunar Crater Identification in Digital Images

Sep 14, 2020

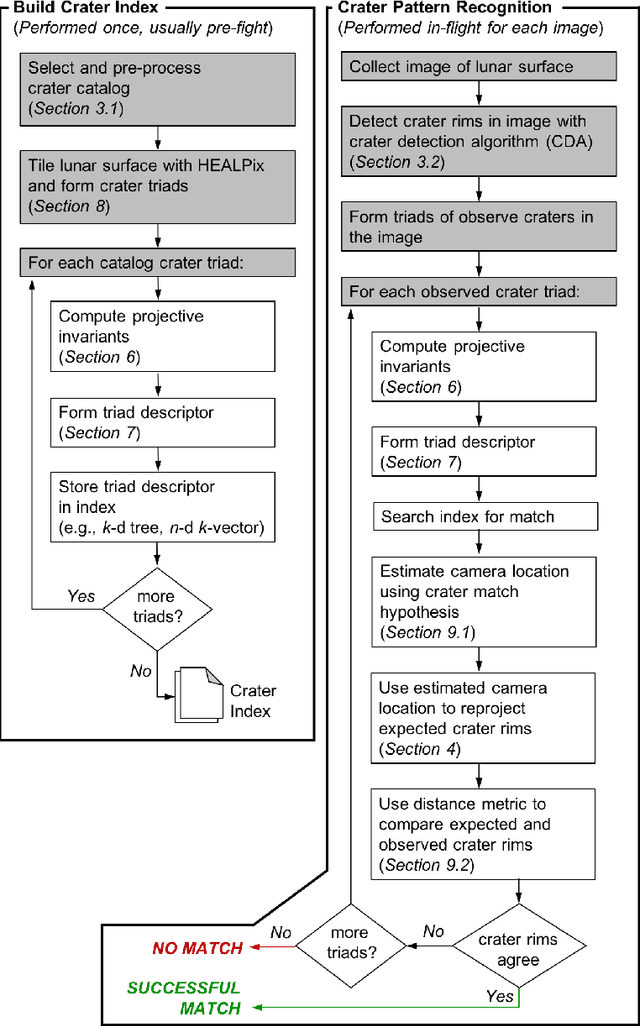

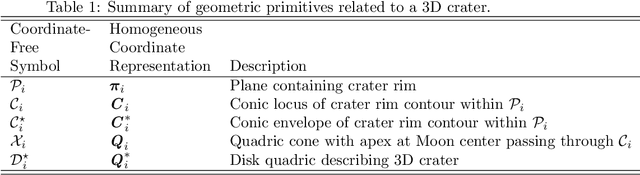

Abstract:It is often necessary to identify a pattern of observed craters in a single image of the lunar surface and without any prior knowledge of the camera's location. This so-called "lost-in-space" crater identification problem is common in both crater-based terrain relative navigation (TRN) and in automatic registration of scientific imagery. Past work on crater identification has largely been based on heuristic schemes, with poor performance outside of a narrowly defined operating regime (e.g., nadir pointing images, small search areas). This work provides the first mathematically rigorous treatment of the general crater identification problem. It is shown when it is (and when it is not) possible to recognize a pattern of elliptical crater rims in an image formed by perspective projection. For the cases when it is possible to recognize a pattern, descriptors are developed using invariant theory that provably capture all of the viewpoint invariant information. These descriptors may be pre-computed for known crater patterns and placed in a searchable index for fast recognition. New techniques are also developed for computing pose from crater rim observations and for evaluating crater rim correspondences. These techniques are demonstrated on both synthetic and real images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge