Ryan Gerdes

Contrastive Graph Convolutional Networks for Hardware Trojan Detection in Third Party IP Cores

Mar 04, 2022

Abstract:The availability of wide-ranging third-party intellectual property (3PIP) cores enables integrated circuit (IC) designers to focus on designing high-level features in ASICs/SoCs. The massive proliferation of ICs brings with it an increased number of bad actors seeking to exploit those circuits for various nefarious reasons. This is not surprising as integrated circuits affect every aspect of society. Thus, malicious logic (Hardware Trojans, HT) being surreptitiously injected by untrusted vendors into 3PIP cores used in IC design is an ever present threat. In this paper, we explore methods for identification of trigger-based HT in designs containing synthesizable IP cores without a golden model. Specifically, we develop methods to detect hardware trojans by detecting triggers embedded in ICs purely based on netlists acquired from the vendor. We propose GATE-Net, a deep learning model based on graph-convolutional networks (GCN) trained using supervised contrastive learning, for flagging designs containing randomly-inserted triggers using only the corresponding netlist. Our proposed architecture achieves significant improvements over state-of-the-art learning models yielding an average 46.99% improvement in detection performance for combinatorial triggers and 21.91% improvement for sequential triggers across a variety of circuit types. Through rigorous experimentation, qualitative and quantitative performance evaluations, we demonstrate effectiveness of GATE-Net and the supervised contrastive training of GATE-Net for HT detection.

Securing your Airspace: Detection of Drones Trespassing Protected Areas

Nov 05, 2021

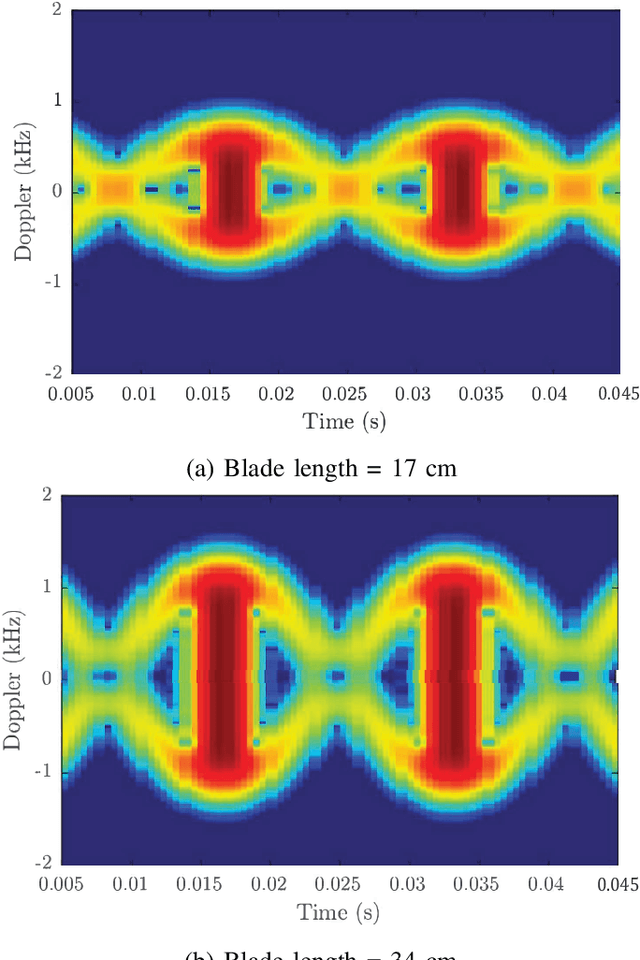

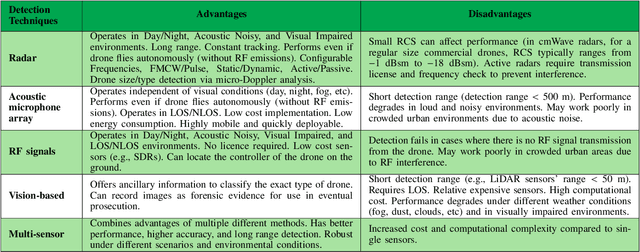

Abstract:There has been a rapid growth in the deployment of Unmanned Aerial Vehicles (UAVs) in various applications ranging from vital safety-of-life such as surveillance and reconnaissance at nuclear power plants to entertainment and hobby applications. While popular, drones can pose serious security threats that can be unintentional or intentional. Thus, there is an urgent need for real-time accurate detection and classification of drones. In this article, we perform a survey of drone detection approaches presenting their advantages and limitations. We analyze detection techniques that employ radars, acoustic and optical sensors, and emitted radio frequency (RF) signals. We compare their performance, accuracy, and cost, concluding that combining multiple sensing modalities might be the path forward.

GhostImage: Perception Domain Attacks against Vision-based Object Classification Systems

Jan 21, 2020

Abstract:In vision-based object classification systems, imaging sensors perceive the environment and then objects are detected and classified for decision-making purposes. Vulnerabilities in the perception domain enable an attacker to inject false data into the sensor which could lead to unsafe consequences. In this work, we focus on camera-based systems and propose GhostImage attacks, with the goal of either creating a fake perceived object or obfuscating the object's image that leads to wrong classification results. This is achieved by remotely projecting adversarial patterns into camera-perceived images, exploiting two common effects in optical imaging systems, namely lens flare/ghost effects, and auto-exposure control. To improve the robustness of the attack to channel perturbations, we generate optimal input patterns by integrating adversarial machine learning techniques with a trained end-to-end channel model. We realize GhostImage attacks with a projector, and conducted comprehensive experiments, using three different image datasets, in indoor and outdoor environments, and three different cameras. We demonstrate that GhostImage attacks are applicable to both autonomous driving and security surveillance scenarios. Experiment results show that, depending on the projector-camera distance, attack success rates can reach as high as 100%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge