Russell S. A. Brinkworth

A generalised novel loss function for computational fluid dynamics

Nov 26, 2024

Abstract:Computational fluid dynamics (CFD) simulations are crucial in automotive, aerospace, maritime and medical applications, but are limited by the complexity, cost and computational requirements of directly calculating the flow, often taking days of compute time. Machine-learning architectures, such as controlled generative adversarial networks (cGANs) hold significant potential in enhancing or replacing CFD investigations, due to cGANs ability to approximate the underlying data distribution of a dataset. Unlike traditional cGAN applications, where the entire image carries information, CFD data contains small regions of highly variant data, immersed in a large context of low variance that is of minimal importance. This renders most existing deep learning techniques that give equal importance to every portion of the data during training, inefficient. To mitigate this, a novel loss function is proposed called Gradient Mean Squared Error (GMSE) which automatically and dynamically identifies the regions of importance on a field-by-field basis, assigning appropriate weights according to the local variance. To assess the effectiveness of the proposed solution, three identical networks were trained; optimised with Mean Squared Error (MSE) loss, proposed GMSE loss and a dynamic variant of GMSE (DGMSE). The novel loss function resulted in faster loss convergence, correlating to reduced training time, whilst also displaying an 83.6% reduction in structural similarity error between the generated field and ground truth simulations, a 76.6% higher maximum rate of loss and an increased ability to fool a discriminator network. It is hoped that this loss function will enable accelerated machine learning within computational fluid dynamics.

Automatic Ground Truths: Projected Image Annotations for Omnidirectional Vision

Sep 12, 2017

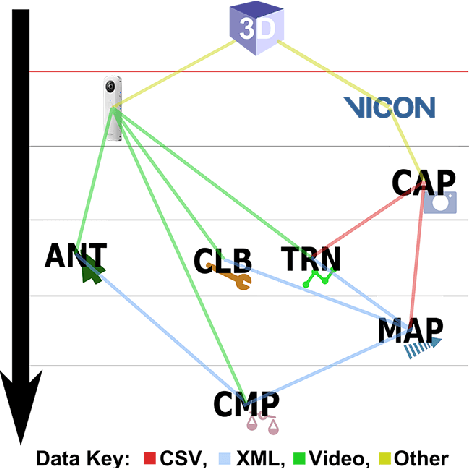

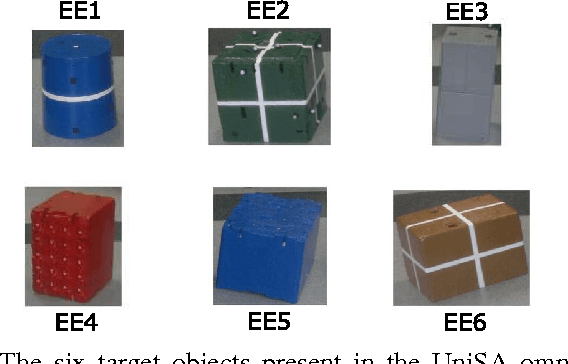

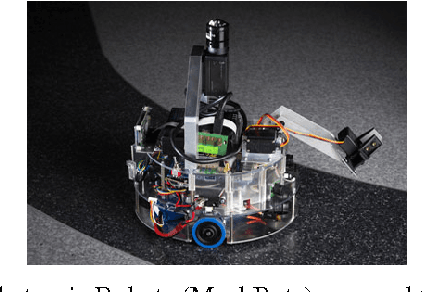

Abstract:We present a novel data set made up of omnidirectional video of multiple objects whose centroid positions are annotated automatically. Omnidirectional vision is an active field of research focused on the use of spherical imagery in video analysis and scene understanding, involving tasks such as object detection, tracking and recognition. Our goal is to provide a large and consistently annotated video data set that can be used to train and evaluate new algorithms for these tasks. Here we describe the experimental setup and software environment used to capture and map the 3D ground truth positions of multiple objects into the image. Furthermore, we estimate the expected systematic error on the mapped positions. In addition to final data products, we release publicly the software tools and raw data necessary to re-calibrate the camera and/or redo this mapping. The software also provides a simple framework for comparing the results of standard image annotation tools or visual tracking systems against our mapped ground truth annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge