Ruikang K. Wang

Choroidal thinning assessment through facial video analysis

Jan 29, 2024Abstract:Different features of skin are associated with various medical conditions and provide opportunities to evaluate and monitor body health. This study created a strategy to assess choroidal thinning through the video analysis of facial skin. Videos capturing the entire facial skin were collected from 48 participants with age-related macular degeneration (AMD) and 12 healthy individuals. These facial videos were analyzed using video-based trans-angiosomes imaging photoplethysmography (TaiPPG) to generate facial imaging biomarkers that were correlated with choroidal thickness (CT) measurements. The CT of all patients was determined using swept-source optical coherence tomography (SS-OCT). The results revealed the relationship between relative blood pulsation amplitude (BPA) in three typical facial angiosomes (cheek, side-forehead and mid-forehead) and the average macular CT (r = 0.48, p < 0.001; r = -0.56, p < 0.001; r = -0.40, p < 0.01). When considering a diagnostic threshold of 200{\mu}m, the newly developed facial video analysis tool effectively distinguished between cases of choroidal thinning and normal cases, yielding areas under the curve of 0.75, 0.79 and 0.69. These findings shed light on the connection between choroidal blood flow and facial skin hemodynamics, which suggests the potential for predicting vascular diseases through widely accessible skin imaging data.

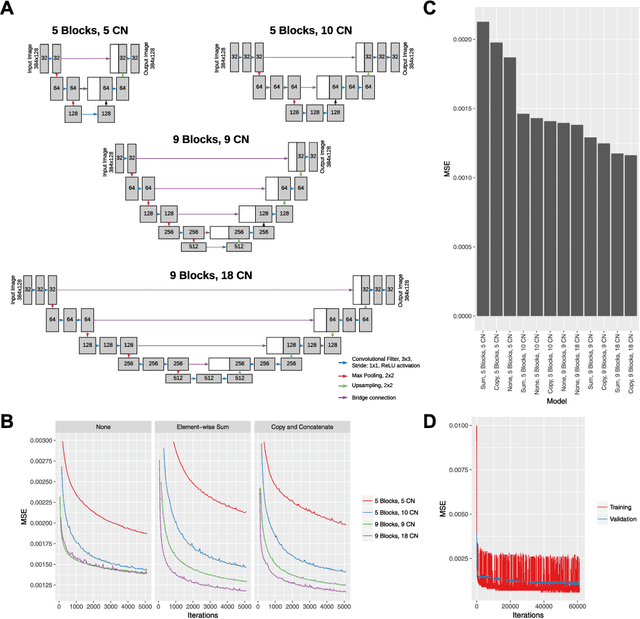

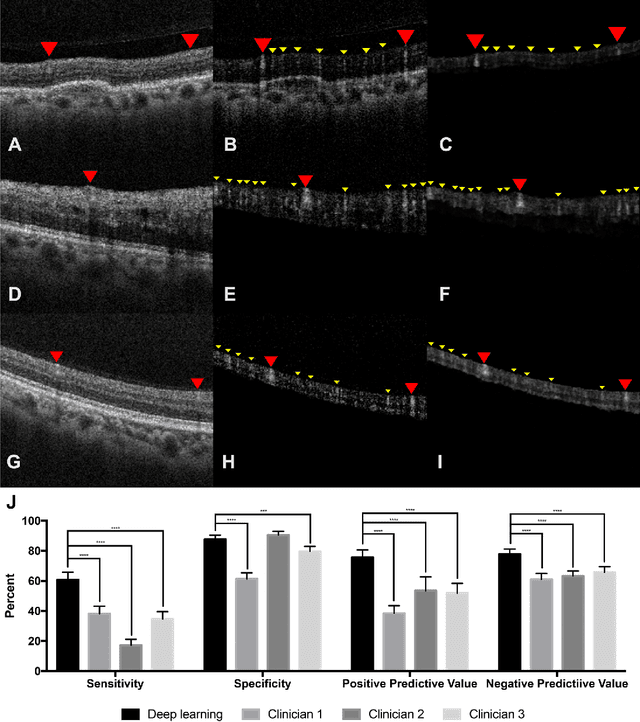

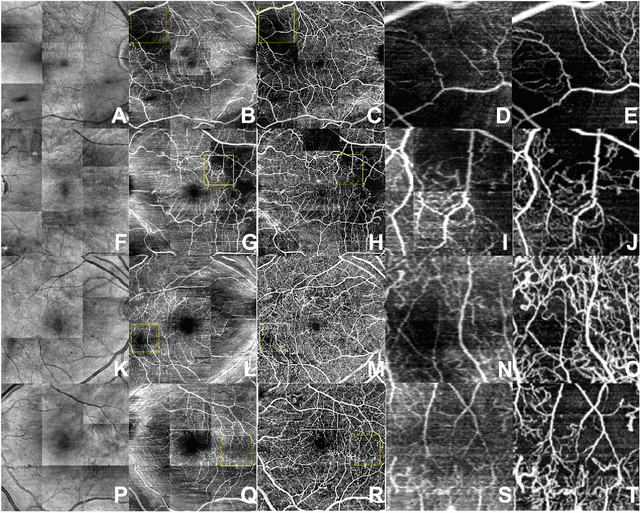

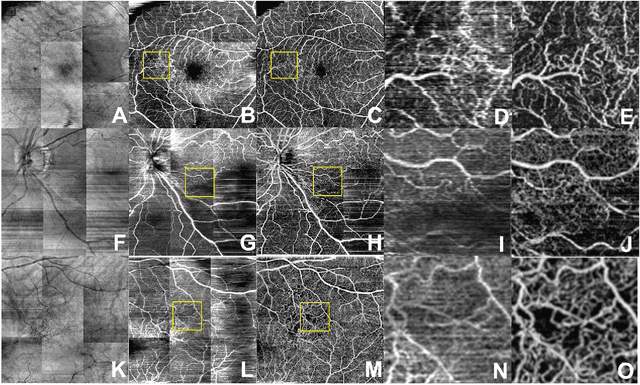

Generating retinal flow maps from structural optical coherence tomography with artificial intelligence

Feb 24, 2018

Abstract:Despite significant advances in artificial intelligence (AI) for computer vision, its application in medical imaging has been limited by the burden and limits of expert-generated labels. We used images from optical coherence tomography angiography (OCTA), a relatively new imaging modality that measures perfusion of the retinal vasculature, to train an AI algorithm to generate vasculature maps from standard structural optical coherence tomography (OCT) images of the same retinae, both exceeding the ability and bypassing the need for expert labeling. Deep learning was able to infer perfusion of microvasculature from structural OCT images with similar fidelity to OCTA and significantly better than expert clinicians (P < 0.00001). OCTA suffers from need of specialized hardware, laborious acquisition protocols, and motion artifacts; whereas our model works directly from standard OCT which are ubiquitous and quick to obtain, and allows unlocking of large volumes of previously collected standard OCT data both in existing clinical trials and clinical practice. This finding demonstrates a novel application of AI to medical imaging, whereby subtle regularities between different modalities are used to image the same body part and AI is used to generate detailed and accurate inferences of tissue function from structure imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge