Rui Prada

Building Persuasive Robots with Social Power Strategies

Jul 12, 2023

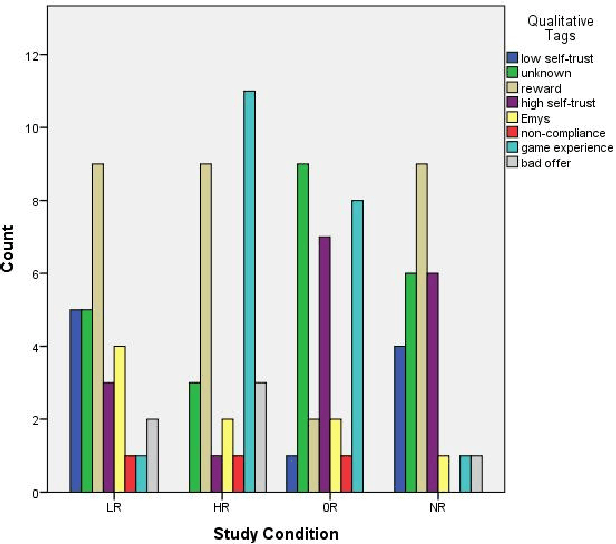

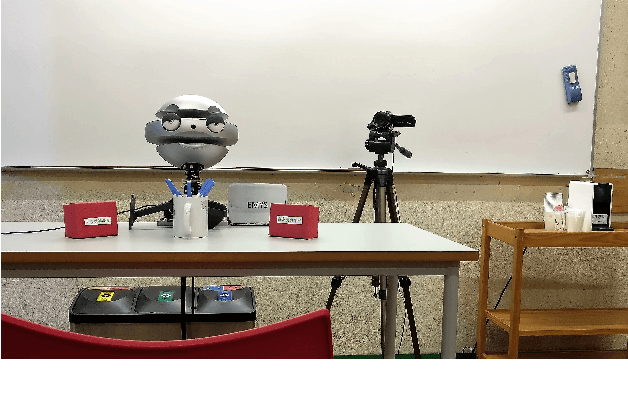

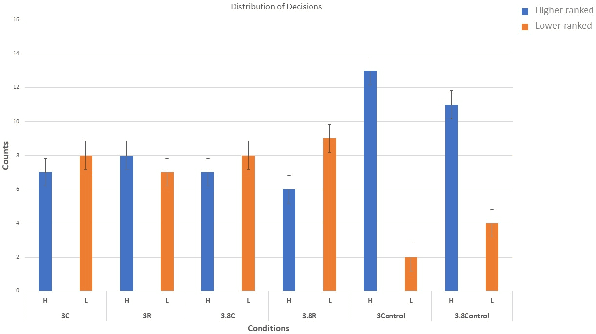

Abstract:Can social power endow social robots with the capacity to persuade? This paper represents our recent endeavor to design persuasive social robots. We have designed and run three different user studies to investigate the effectiveness of different bases of social power (inspired by French and Raven's theory) on peoples' compliance to the requests of social robots. The results show that robotic persuaders that exert social power (specifically from expert, reward, and coercion bases) demonstrate increased ability to influence humans. The first study provides a positive answer and shows that under the same circumstances, people with different personalities prefer robots using a specific social power base. In addition, social rewards can be useful in persuading individuals. The second study suggests that by employing social power, social robots are capable of persuading people objectively to select a less desirable choice among others. Finally, the third study shows that the effect of power on persuasion does not decay over time and might strengthen under specific circumstances. Moreover, exerting stronger social power does not necessarily lead to higher persuasion. Overall, we argue that the results of these studies are relevant for designing human--robot-interaction scenarios especially the ones aiming at behavioral change.

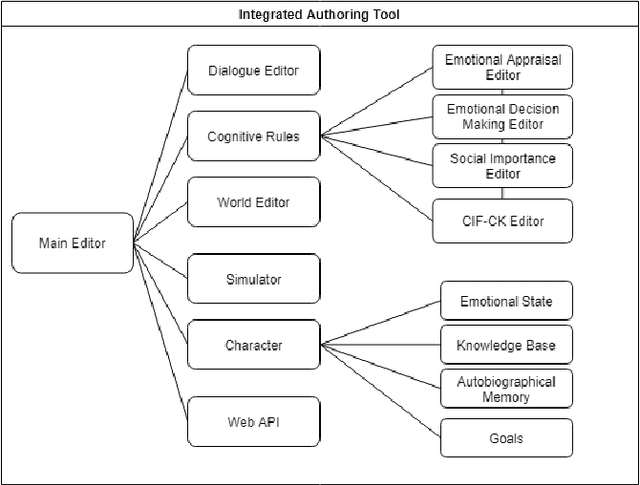

Towards Explainable Social Agent Authoring tools: A case study on FAtiMA-Toolkit

Jun 07, 2022

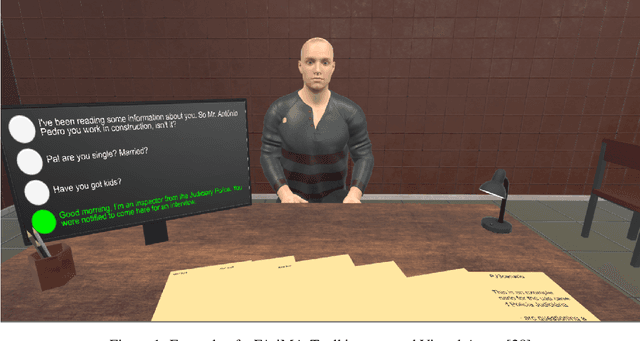

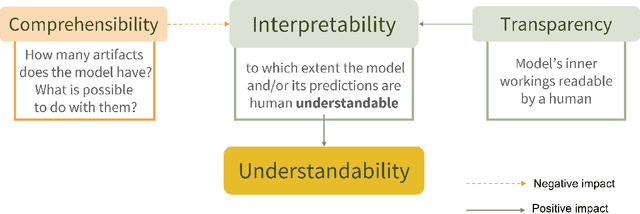

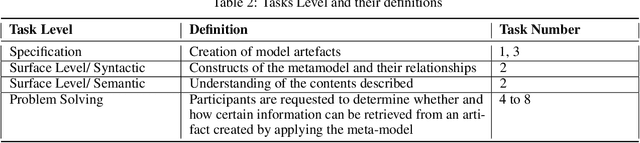

Abstract:The deployment of Socially Intelligent Agents (SIAs) in learning environments has proven to have several advantages in different areas of application. Social Agent Authoring Tools allow scenario designers to create tailored experiences with high control over SIAs behaviour, however, on the flip side, this comes at a cost as the complexity of the scenarios and its authoring can become overbearing. In this paper we introduce the concept of Explainable Social Agent Authoring Tools with the goal of analysing if authoring tools for social agents are understandable and interpretable. To this end we examine whether an authoring tool, FAtiMA-Toolkit, is understandable and its authoring steps interpretable, from the point-of-view of the author. We conducted two user studies to quantitatively assess the Interpretability, Comprehensibility and Transparency of FAtiMA-Toolkit from the perspective of a scenario designer. One of the key findings is the fact that FAtiMA-Toolkit's conceptual model is, in general, understandable, however the emotional-based concepts were not as easily understood and used by the authors. Although there are some positive aspects regarding the explainability of FAtiMA-Toolkit, there is still progress to be made to achieve a fully explainable social agent authoring tool. We provide a set of key concepts and possible solutions that can guide developers to build such tools.

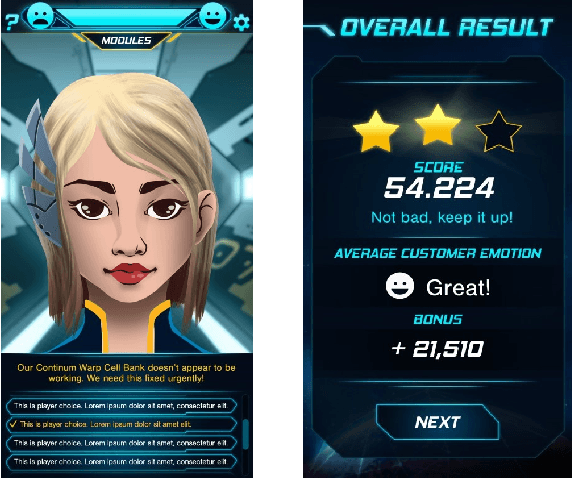

Agents for Automated User Experience Testing

Apr 13, 2021

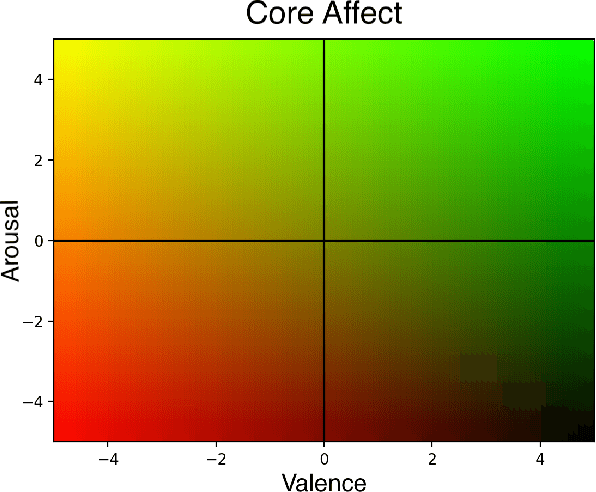

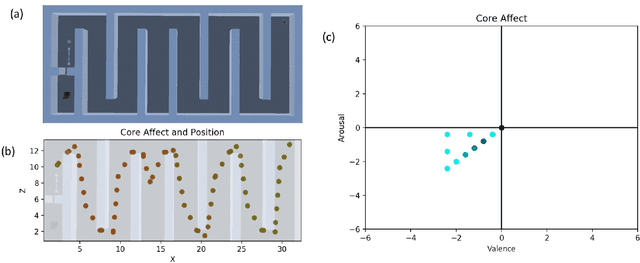

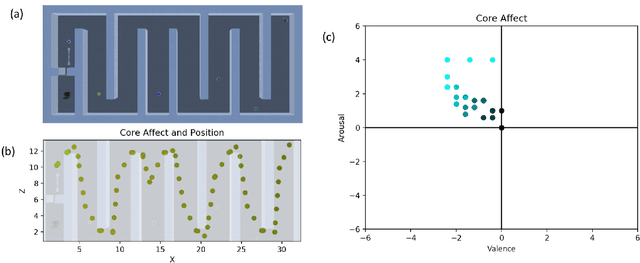

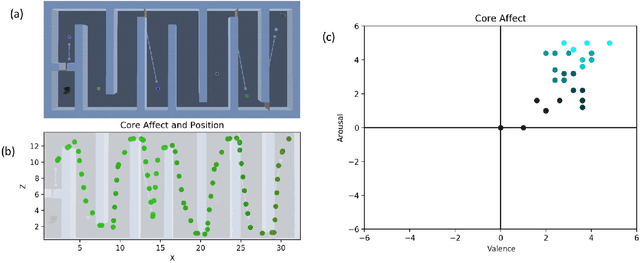

Abstract:The automation of functional testing in software has allowed developers to continuously check for negative impacts on functionality throughout the iterative phases of development. This is not the case for User eXperience (UX), which has hitherto relied almost exclusively on testing with real users. User testing is a slow endeavour that can become a bottleneck for development of interactive systems. To address this problem, we here propose an agent based approach for automatic UX testing. We develop agents with basic problem solving skills and a core affect model, allowing us to model an artificial affective state as they traverse different levels of a game. Although this research is still at a primordial state, we believe the results here presented make a strong case for the use of intelligent agents endowed with affective computing models for automating UX testing.

FAtiMA Toolkit -- Toward an effective and accessible tool for the development of intelligent virtual agents and social robots

Mar 04, 2021

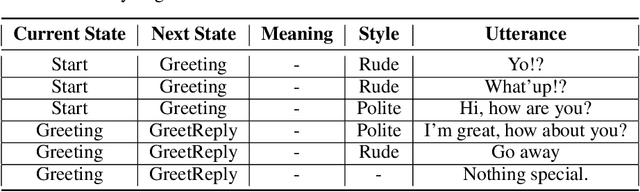

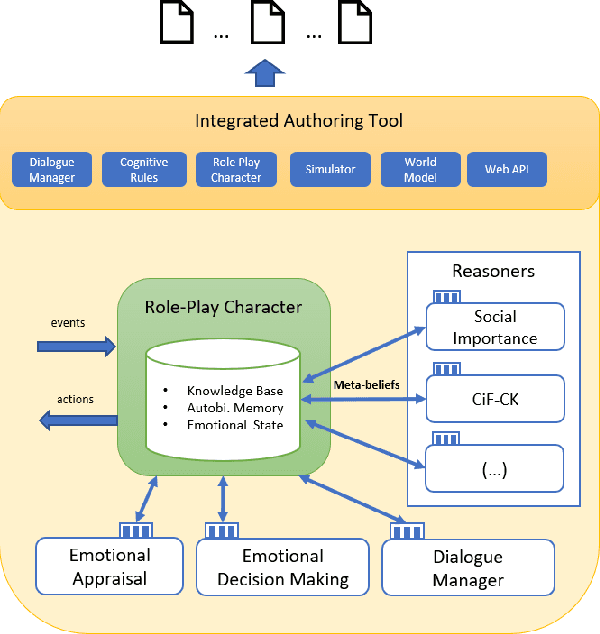

Abstract:More than a decade has passed since the development of FearNot!, an application designed to help children deal with bullying through role-playing with virtual characters. It was also the application that led to the creation of FAtiMA, an affective agent architecture for creating autonomous characters that can evoke empathic responses. In this paper, we describe FAtiMA Toolkit, a collection of open-source tools that is designed to help researchers, game developers and roboticists incorporate a computational model of emotion and decision-making in their work. The toolkit was developed with the goal of making FAtiMA more accessible, easier to incorporate into different projects and more flexible in its capabilities for human-agent interaction, based upon the experience gathered over the years across different virtual environments and human-robot interaction scenarios. As a result, this work makes several different contributions to the field of Agent-Based Architectures. More precisely, FAtiMA Toolkit's library based design allows developers to easily integrate it with other frameworks, its meta-cognitive model affords different internal reasoners and affective components and its explicit dialogue structure gives control to the author even within highly complex scenarios. To demonstrate the use of FAtiMA Toolkit, several different use cases where the toolkit was successfully applied are described and discussed.

A Game AI Competition to foster Collaborative AI research and development

Oct 17, 2020

Abstract:Game AI competitions are important to foster research and development on Game AI and AI in general. These competitions supply different challenging problems that can be translated into other contexts, virtual or real. They provide frameworks and tools to facilitate the research on their core topics and provide means for comparing and sharing results. A competition is also a way to motivate new researchers to study these challenges. In this document, we present the Geometry Friends Game AI Competition. Geometry Friends is a two-player cooperative physics-based puzzle platformer computer game. The concept of the game is simple, though its solving has proven to be difficult. While the main and apparent focus of the game is cooperation, it also relies on other AI-related problems such as planning, plan execution, and motion control, all connected to situational awareness. All of these must be solved in real-time. In this paper, we discuss the competition and the challenges it brings, and present an overview of the current solutions.

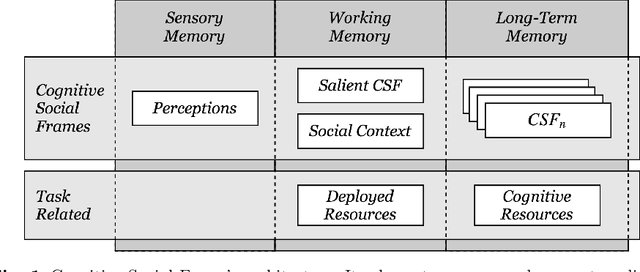

Towards Social Identity in Socio-Cognitive Agents

Jan 20, 2020

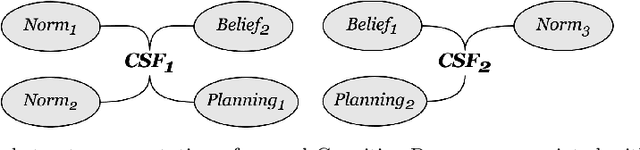

Abstract:Current architectures for social agents are designed around some specific units of social behaviour that address particular challenges. Although their performance might be adequate for controlled environments, deploying these agents in the wild is difficult. Moreover, the increasing demand for autonomous agents capable of living alongside humans calls for the design of more robust social agents that can cope with diverse social situations. We believe that to design such agents, their sociality and cognition should be conceived as one. This includes creating mechanisms for constructing social reality as an interpretation of the physical world with social meanings and selective deployment of cognitive resources adequate to the situation. We identify several design principles that should be considered while designing agent architectures for socio-cognitive systems. Taking these remarks into account, we propose a socio-cognitive agent model based on the concept of Cognitive Social Frames that allow the adaptation of an agent's cognition based on its interpretation of its surroundings, its Social Context. Our approach supports an agent's reasoning about other social actors and its relationship with them. Cognitive Social Frames can be built around social groups, and form the basis for social group dynamics mechanisms and construct of Social Identity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge