Rui Che

DDLNet: Boosting Remote Sensing Change Detection with Dual-Domain Learning

Jun 19, 2024

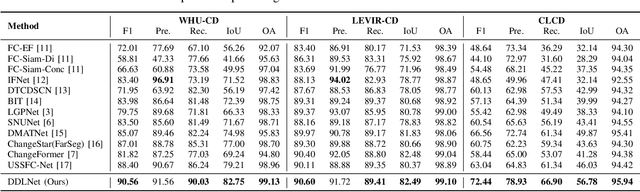

Abstract:Remote sensing change detection (RSCD) aims to identify the changes of interest in a region by analyzing multi-temporal remote sensing images, and has an outstanding value for local development monitoring. Existing RSCD methods are devoted to contextual modeling in the spatial domain to enhance the changes of interest. Despite the satisfactory performance achieved, the lack of knowledge in the frequency domain limits the further improvement of model performance. In this paper, we propose DDLNet, a RSCD network based on dual-domain learning (i.e., frequency and spatial domains). In particular, we design a Frequency-domain Enhancement Module (FEM) to capture frequency components from the input bi-temporal images using Discrete Cosine Transform (DCT) and thus enhance the changes of interest. Besides, we devise a Spatial-domain Recovery Module (SRM) to fuse spatiotemporal features for reconstructing spatial details of change representations. Extensive experiments on three benchmark RSCD datasets demonstrate that the proposed method achieves state-of-the-art performance and reaches a more satisfactory accuracy-efficiency trade-off. Our code is publicly available at https://github.com/xwmaxwma/rschange.

SACANet: scene-aware class attention network for semantic segmentation of remote sensing images

Apr 22, 2023

Abstract:Spatial attention mechanism has been widely used in semantic segmentation of remote sensing images given its capability to model long-range dependencies. Many methods adopting spatial attention mechanism aggregate contextual information using direct relationships between pixels within an image, while ignoring the scene awareness of pixels (i.e., being aware of the global context of the scene where the pixels are located and perceiving their relative positions). Given the observation that scene awareness benefits context modeling with spatial correlations of ground objects, we design a scene-aware attention module based on a refined spatial attention mechanism embedding scene awareness. Besides, we present a local-global class attention mechanism to address the problem that general attention mechanism introduces excessive background noises while hardly considering the large intra-class variance in remote sensing images. In this paper, we integrate both scene-aware and class attentions to propose a scene-aware class attention network (SACANet) for semantic segmentation of remote sensing images. Experimental results on three datasets show that SACANet outperforms other state-of-the-art methods and validate its effectiveness. Code is available at https://github.com/xwmaxwma/rssegmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge