Rudraksh Kapil

ShadowSense: Unsupervised Domain Adaptation and Feature Fusion for Shadow-Agnostic Tree Crown Detection from RGB-Thermal Drone Imagery

Oct 24, 2023Abstract:Accurate detection of individual tree crowns from remote sensing data poses a significant challenge due to the dense nature of forest canopy and the presence of diverse environmental variations, e.g., overlapping canopies, occlusions, and varying lighting conditions. Additionally, the lack of data for training robust models adds another limitation in effectively studying complex forest conditions. This paper presents a novel method for detecting shadowed tree crowns and provides a challenging dataset comprising roughly 50k paired RGB-thermal images to facilitate future research for illumination-invariant detection. The proposed method (ShadowSense) is entirely self-supervised, leveraging domain adversarial training without source domain annotations for feature extraction and foreground feature alignment for feature pyramid networks to adapt domain-invariant representations by focusing on visible foreground regions, respectively. It then fuses complementary information of both modalities to effectively improve upon the predictions of an RGB-trained detector and boost the overall accuracy. Extensive experiments demonstrate the superiority of the proposed method over both the baseline RGB-trained detector and state-of-the-art techniques that rely on unsupervised domain adaptation or early image fusion. Our code and data are available: https://github.com/rudrakshkapil/ShadowSense

Classification of Bark Beetle-Induced Forest Tree Mortality using Deep Learning

Jul 15, 2022

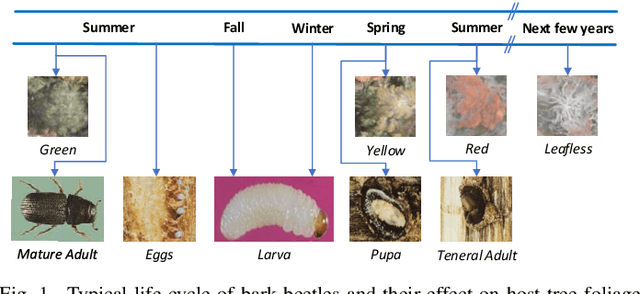

Abstract:Bark beetle outbreaks can dramatically impact forest ecosystems and services around the world. For the development of effective forest policies and management plans, the early detection of infested trees is essential. Despite the visual symptoms of bark beetle infestation, this task remains challenging, considering overlapping tree crowns and non-homogeneity in crown foliage discolouration. In this work, a deep learning based method is proposed to effectively classify different stages of bark beetle attacks at the individual tree level. The proposed method uses RetinaNet architecture (exploiting a robust feature extraction backbone pre-trained for tree crown detection) to train a shallow subnetwork for classifying the different attack stages of images captured by unmanned aerial vehicles (UAVs). Moreover, various data augmentation strategies are examined to address the class imbalance problem, and consequently, the affine transformation is selected to be the most effective one for this purpose. Experimental evaluations demonstrate the effectiveness of the proposed method by achieving an average accuracy of 98.95%, considerably outperforming the baseline method by approximately 10%.

Deep Learning Framework to Detect Face Masks from Video Footage

Nov 04, 2020

Abstract:The use of facial masks in public spaces has become a social obligation since the wake of the COVID-19 global pandemic and the identification of facial masks can be imperative to ensure public safety. Detection of facial masks in video footages is a challenging task primarily due to the fact that the masks themselves behave as occlusions to face detection algorithms due to the absence of facial landmarks in the masked regions. In this work, we propose an approach for detecting facial masks in videos using deep learning. The proposed framework capitalizes on the MTCNN face detection model to identify the faces and their corresponding facial landmarks present in the video frame. These facial images and cues are then processed by a neoteric classifier that utilises the MobileNetV2 architecture as an object detector for identifying masked regions. The proposed framework was tested on a dataset which is a collection of videos capturing the movement of people in public spaces while complying with COVID-19 safety protocols. The proposed methodology demonstrated its effectiveness in detecting facial masks by achieving high precision, recall, and accuracy.

* Contains 6 pages and 6 figures. Published in 12th CICN 2020

SD-Measure: A Social Distancing Detector

Nov 04, 2020

Abstract:The practice of social distancing is imperative to curbing the spread of contagious diseases and has been globally adopted as a non-pharmaceutical prevention measure during the COVID-19 pandemic. This work proposes a novel framework named SD-Measure for detecting social distancing from video footages. The proposed framework leverages the Mask R-CNN deep neural network to detect people in a video frame. To consistently identify whether social distancing is practiced during the interaction between people, a centroid tracking algorithm is utilised to track the subjects over the course of the footage. With the aid of authentic algorithms for approximating the distance of people from the camera and between themselves, we determine whether the social distancing guidelines are being adhered to. The framework attained a high accuracy value in conjunction with a low false alarm rate when tested on Custom Video Footage Dataset (CVFD) and Custom Personal Images Dataset (CPID), where it manifested its effectiveness in determining whether social distancing guidelines were practiced.

* Contains 6 pages & 7 figures. Published in 12th CICN 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge