Devin Goodsman

Crown-CAM: Reliable Visual Explanations for Tree Crown Detection in Aerial Images

Nov 23, 2022Abstract:Visual explanation of "black-box" models has enabled researchers and experts in artificial intelligence (AI) to exploit the localization abilities of such methods to a much greater extent. Despite most of the developed visual explanation methods applied to single object classification problems, they are not well-explored in the detection task, where the challenges may go beyond simple coarse area-based discrimination. This is of particular importance when a detector should face several objects with different scales from various viewpoints or if the objects of interest are absent. In this paper, we propose CrownCAM to generate reliable visual explanations for the challenging and dynamic problem of tree crown detection in aerial images. It efficiently provides fine-grain localization of tree crowns and non-contextual background suppression for scenarios with highly dense forest trees in the presence of potential distractors or scenes without tree crowns. Additionally, two Intersection over Union (IoU)-based metrics are introduced that can effectively quantify both the accuracy and inaccuracy of generated visual explanations with respect to regions with or without tree crowns in the image. Empirical evaluations demonstrate that the proposed Crown-CAM outperforms the Score-CAM, Augmented ScoreCAM, and Eigen-CAM methods by an average IoU margin of 8.7, 5.3, and 21.7 (and 3.3, 9.8, and 16.5) respectively in improving the accuracy (and decreasing inaccuracy) of visual explanations on the challenging NEON tree crown dataset.

Early Detection of Bark Beetle Attack Using Remote Sensing and Machine Learning: A Review

Oct 07, 2022

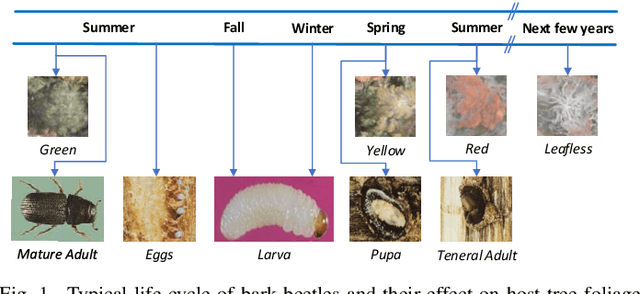

Abstract:Bark beetle outbreaks can result in a devastating impact on forest ecosystem processes, biodiversity, forest structure and function, and economies. Accurate and timely detection of bark beetle infestations is crucial to mitigate further damage, develop proactive forest management activities, and minimize economic losses. Incorporating remote sensing (RS) data with machine learning (ML) (or deep learning (DL)) can provide a great alternative to the current approaches that rely on aerial surveys and field surveys, which are impractical over vast geographical regions. This paper provides a comprehensive review of past and current advances in the early detection of bark beetle-induced tree mortality from three key perspectives: bark beetle & host interactions, RS, and ML/DL. We parse recent literature according to bark beetle species & attack phases, host trees, study regions, imagery platforms & sensors, spectral/spatial/temporal resolutions, spectral signatures, spectral vegetation indices (SVIs), ML approaches, learning schemes, task categories, models, algorithms, classes/clusters, features, and DL networks & architectures. This review focuses on challenging early detection, discussing current challenges and potential solutions. Our literature survey suggests that the performance of current ML methods is limited (less than 80%) and depends on various factors, including imagery sensors & resolutions, acquisition dates, and employed features & algorithms/networks. A more promising result from DL networks and then the random forest (RF) algorithm highlighted the potential to detect subtle changes in visible, thermal, and short-wave infrared (SWIR) spectral regions.

Classification of Bark Beetle-Induced Forest Tree Mortality using Deep Learning

Jul 15, 2022

Abstract:Bark beetle outbreaks can dramatically impact forest ecosystems and services around the world. For the development of effective forest policies and management plans, the early detection of infested trees is essential. Despite the visual symptoms of bark beetle infestation, this task remains challenging, considering overlapping tree crowns and non-homogeneity in crown foliage discolouration. In this work, a deep learning based method is proposed to effectively classify different stages of bark beetle attacks at the individual tree level. The proposed method uses RetinaNet architecture (exploiting a robust feature extraction backbone pre-trained for tree crown detection) to train a shallow subnetwork for classifying the different attack stages of images captured by unmanned aerial vehicles (UAVs). Moreover, various data augmentation strategies are examined to address the class imbalance problem, and consequently, the affine transformation is selected to be the most effective one for this purpose. Experimental evaluations demonstrate the effectiveness of the proposed method by achieving an average accuracy of 98.95%, considerably outperforming the baseline method by approximately 10%.

Learning-based Monocular 3D Reconstruction of Birds: A Contemporary Survey

Jul 10, 2022

Abstract:In nature, the collective behavior of animals, such as flying birds is dominated by the interactions between individuals of the same species. However, the study of such behavior among the bird species is a complex process that humans cannot perform using conventional visual observational techniques such as focal sampling in nature. For social animals such as birds, the mechanism of group formation can help ecologists understand the relationship between social cues and their visual characteristics over time (e.g., pose and shape). But, recovering the varying pose and shapes of flying birds is a highly challenging problem. A widely-adopted solution to tackle this bottleneck is to extract the pose and shape information from 2D image to 3D correspondence. Recent advances in 3D vision have led to a number of impressive works on the 3D shape and pose estimation, each with different pros and cons. To the best of our knowledge, this work is the first attempt to provide an overview of recent advances in 3D bird reconstruction based on monocular vision, give both computer vision and biology researchers an overview of existing approaches, and compare their characteristics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge