Ruck Thawonmas

Multiverse of Greatness: Generating Story Branches with LLMs

Nov 22, 2024Abstract:This paper presents Dynamic Context Prompting/Programming (DCP/P), a novel framework for interacting with LLMs to generate graph-based content with a dynamic context window history. While there is an existing study utilizing LLMs to generate a visual novel game, the previous study involved a manual process of output extraction and did not provide flexibility in generating a longer, coherent story. We evaluate DCP/P against our baseline, which does not provide context history to an LLM and only relies on the initial story data. Through objective evaluation, we show that simply providing the LLM with a summary leads to a subpar story compared to additionally providing the LLM with the proper context of the story. We also provide an extensive qualitative analysis and discussion. We qualitatively examine the quality of the objectively best-performing generated game from each approach. In addition, we examine biases in word choices and word sentiment of the generated content. We find a consistent observation with previous studies that LLMs are biased towards certain words, even with a different LLM family. Finally, we provide a comprehensive discussion on opportunities for future studies.

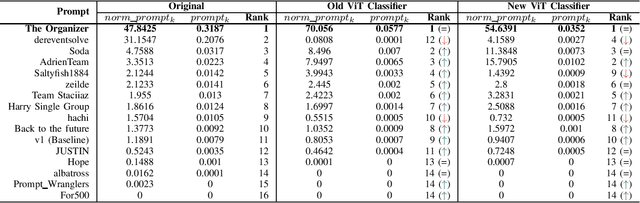

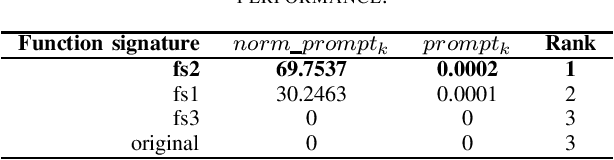

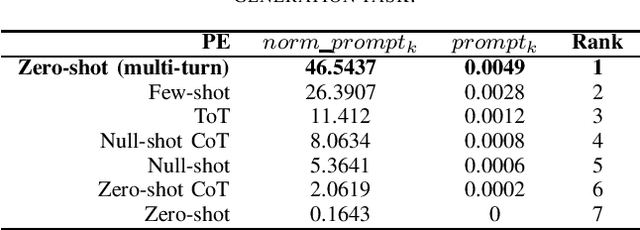

ChatGPT4PCG 2 Competition: Prompt Engineering for Science Birds Level Generation

Mar 05, 2024

Abstract:This paper presents the second ChatGPT4PCG competition at the 2024 IEEE Conference on Games. In this edition of the competition, we follow the first edition, but make several improvements and changes. We introduce a new evaluation metric along with allowing a more flexible format for participants' submissions and making several improvements to the evaluation pipeline. Continuing from the first edition, we aim to foster and explore the realm of prompt engineering (PE) for procedural content generation (PCG). While the first competition saw success, it was hindered by various limitations; we aim to mitigate these limitations in this edition. We introduce diversity as a new metric to discourage submissions aimed at producing repetitive structures. Furthermore, we allow submission of a Python program instead of a prompt text file for greater flexibility in implementing advanced PE approaches, which may require control flow, including conditions and iterations. We also make several improvements to the evaluation pipeline with a better classifier for similarity evaluation and better-performing function signatures. We thoroughly evaluate the effectiveness of the new metric and the improved classifier. Additionally, we perform an ablation study to select a function signature to instruct ChatGPT for level generation. Finally, we provide implementation examples of various PE techniques in Python and evaluate their preliminary performance. We hope this competition serves as a resource and platform for learning about PE and PCG in general.

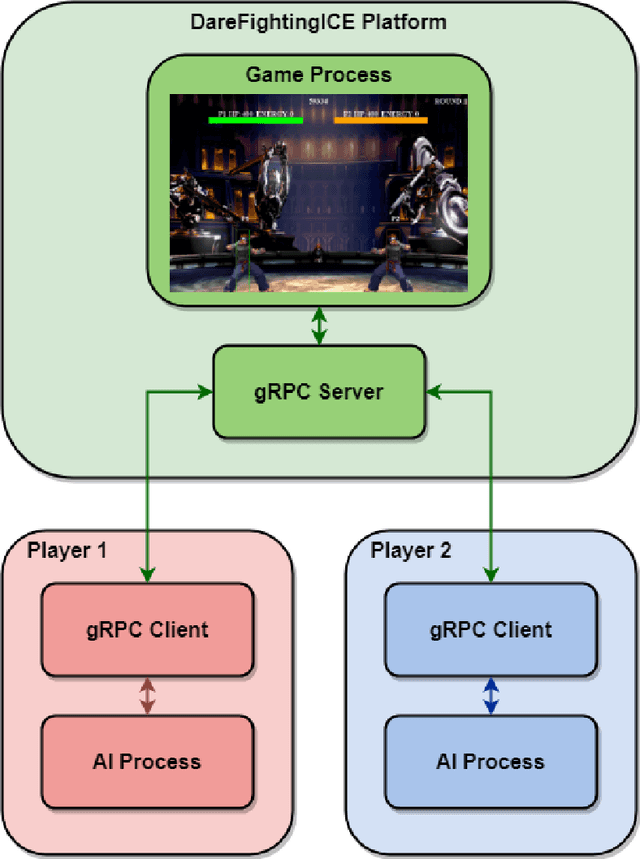

Enhanced DareFightingICE Competitions: Sound Design and AI Competitions

Mar 05, 2024

Abstract:This paper presents a new and improved DareFightingICE platform, a fighting game platform with a focus on visually impaired players (VIPs), in the Unity game engine. It also introduces the separation of the DareFightingICE Competition into two standalone competitions called DareFightingICE Sound Design Competition and DareFightingICE AI Competition--at the 2024 IEEE Conference on Games (CoG)--in which a new platform will be used. This new platform is an enhanced version of the old DareFightingICE platform, having a better audio system to convey 3D sound and a better way to send audio data to AI agents. With this enhancement and by utilizing Unity, the new DareFightingICE platform is more accessible in terms of adding new features for VIPs and future audio research. This paper also improves the evaluation method for evaluating sound designs in the Sound Design Competition which will ensure a better sound design for VIPs as this competition continues to run at future CoG. To the best of our knowledge, both of our competitions are first of their kind, and the connection between the competitions to mutually improve the entries' quality with time makes these competitions an important part of representing an often overlooked segment within the broader gaming community, VIPs.

Fighting Game Adaptive Background Music for Improved Gameplay

Mar 05, 2024

Abstract:This paper presents our work to enhance the background music (BGM) in DareFightingICE by adding adaptive features. The adaptive BGM consists of three different categories of instruments playing the BGM of the winner sound design from the 2022 DareFightingICE Competition. The BGM adapts by changing the volume of each category of instruments. Each category is connected to a different element of the game. We then run experiments to evaluate the adaptive BGM by using a deep reinforcement learning AI agent that only uses audio as input (Blind DL AI). The results show that the performance of the Blind DL AI improves while playing with the adaptive BGM as compared to playing without the adaptive BGM.

Large Language Models are Null-Shot Learners

Jan 16, 2024Abstract:This paper presents null-shot prompting. Null-shot prompting exploits hallucination in large language models (LLMs) by instructing LLMs to utilize information from the "Examples" section that never exists within the provided context to perform a task. While reducing hallucination is crucial and non-negligible for daily and critical uses of LLMs, we propose that in the current landscape in which these LLMs still hallucinate, it is possible, in fact, to exploit hallucination to increase performance in performing tasks compared to standard zero-shot prompting. Experiments with six LLMs show improvements in performance across the majority of eight datasets, including reading comprehension, arithmetic reasoning, and closed-book question answering. The observed inconsistency in increased relative performance across LLMs also potentially indicates a different degree of inherent hallucination in each model. These differences show that it is possible to utilize null-shot prompting as a way to detect degrees of hallucination in LLMs using existing benchmarking datasets. We also perform ablation studies, including experimenting with a modified version of null-shot prompting that incorporates ideas from zero-shot chain-of-thought prompting, which shows different trends of results.

Achieving Fairness in DareFightingICE Agents Evaluation Through a Delay Mechanism

Dec 26, 2023

Abstract:This paper proposes a delay mechanism to mitigate the impact of latency differences in the gRPC framework--a high-performance, open-source universal remote procedure call (RPC) framework--between different programming languages on the performance of agents in DareFightingICE, a fighting game research platform. The study finds that gRPC latency differences between Java and Python can significantly impact real-time decision-making. Without a delay mechanism, Java-based agents outperform Python-based ones due to lower gRPC latency on the Java platform. However, with the proposed delay mechanism, both Java-based and Python-based agents exhibit similar performance, leading to a fair comparison between agents developed using different programming languages. Thus, this work underscores the crucial importance of considering gRPC latency when developing and evaluating agents in DareFightingICE, and the insights gained could potentially extend to other gRPC-based applications.

Adaptive Background Music for a Fighting Game: A Multi-Instrument Volume Modulation Approach

Mar 28, 2023Abstract:This paper presents our work to enhance the background music (BGM) in DareFightingICE by adding an adaptive BGM. The adaptive BGM consists of five different instruments playing a classical music piece called "Air on G-String." The BGM adapts by changing the volume of the instruments. Each instrument is connected to a different element of the game. We then run experiments to evaluate the adaptive BGM by using a deep reinforcement learning AI that only uses audio as input (Blind DL AI). The results show that the performance of the Blind DL AI improves while playing with the adaptive BGM as compared to playing without the adaptive BGM.

ChatGPT4PCG Competition: Character-like Level Generation for Science Birds

Mar 28, 2023

Abstract:This paper presents the first ChatGPT4PCG Competition at the 2023 IEEE Conference on Games. The objective of this competition is for participants to create effective prompts for ChatGPT--enabling it to generate Science Birds levels with high stability and character-like qualities--fully using their creativity as well as prompt engineering skills. ChatGPT is a conversational agent developed by OpenAI. Science Birds is selected as the competition platform because designing an Angry Birds-like level is not a trivial task due to the in-game gravity; the playability of the levels is determined by their stability. To lower the entry barrier to the competition, we limit the task to the generation of capitalized English alphabetical characters. Here, the quality of the generated levels is determined by their stability and similarity to the given characters. A sample prompt is provided to participants for their reference. An experiment is conducted to determine the effectiveness of its modified versions on level stability and similarity by testing them on several characters. To the best of our knowledge, we believe that ChatGPT4PCG is the first competition of its kind and hope to inspire enthusiasm for prompt engineering in procedural content generation.

Improving Data Transfer Efficiency for AIs in the DareFightingICE using gRPC

Mar 11, 2023

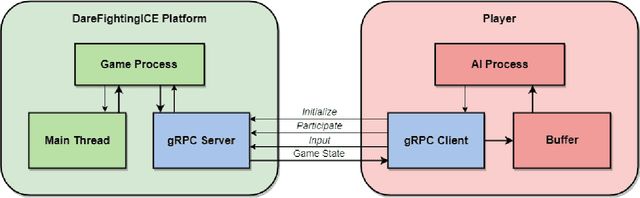

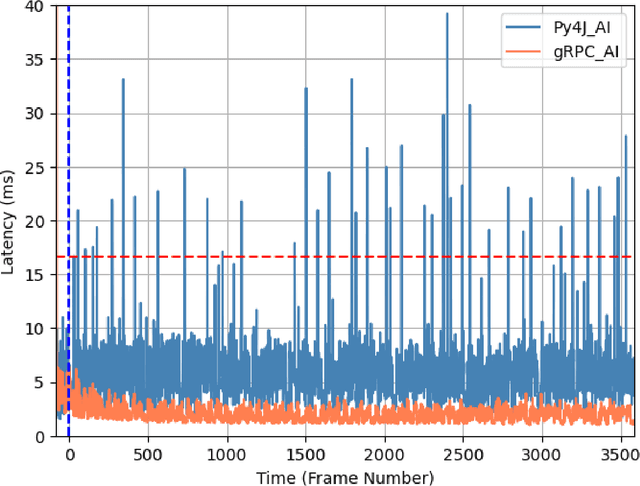

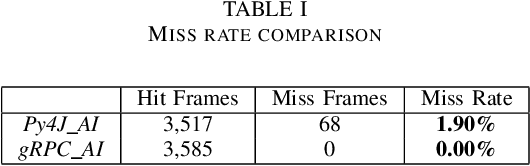

Abstract:This paper presents a new communication interface for the DareFightingICE platform, a Java-based fighting game focused on implementing AI for controlling a non-player character. The interface uses an open-source remote procedure call, gRPC to improve the efficiency of data transfer between the game and the AI, reducing the time spent on receiving information from the game server. This is important because the main challenge of implementing AI in a fighting game is the need for the AI to select an action to perform within a short response time. The DareFightingICE platform has been integrated with Py4J, allowing developers to create AIs using Python. However, Py4J is less efficient at handling large amounts of data, resulting in excessive latency. In contrast, gRPC is well-suited for transmitting large amounts of data. To evaluate the effectiveness of the new communication interface, we conducted an experiment comparing the latency of gRPC and Py4J, using a rule-based AI that sends a kick command regardless of the information received from the game server. The experiment results showed not only a 65\% reduction in latency but also improved stability and eliminated missed frames compared to the current interface.

A Deep Reinforcement Learning Blind AI in DareFightingICE

May 16, 2022

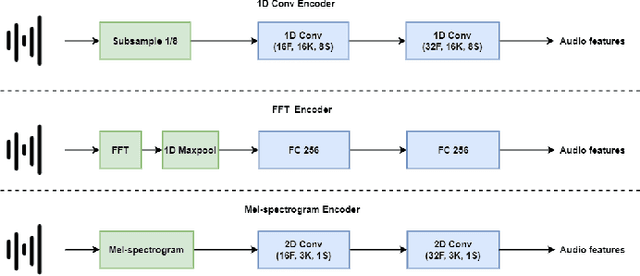

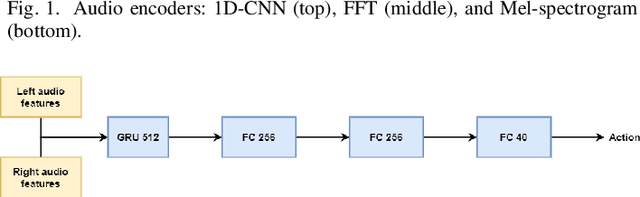

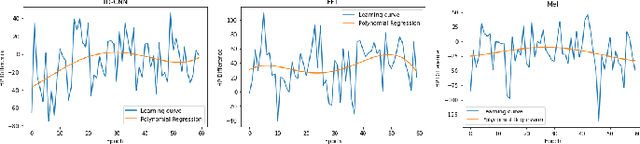

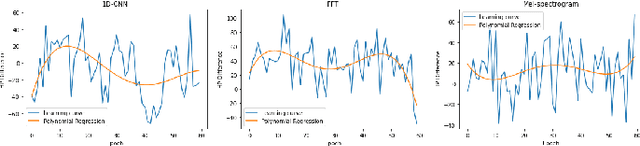

Abstract:This paper presents a deep reinforcement learning AI that uses sound as the input on the DareFightingICE platform at the DareFightingICE Competition in IEEE CoG 2022. In this work, an AI that only uses sound as the input is called blind AI. While state-of-the-art AIs rely mostly on visual or structured observations provided by their environments, learning to play games from only sound is still new and thus challenging. We propose different approaches to process audio data and use the Proximal Policy Optimization algorithm for our blind AI. We also propose to use our blind AI in evaluation of sound designs submitted to the competition and define three metrics for this task. The experimental results show the effectiveness of not only our blind AI but also the proposed three metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge