Ronald F. DeMara

An Efficient Real-Time Object Detection Framework on Resource-Constricted Hardware Devices via Software and Hardware Co-design

Aug 20, 2024

Abstract:The fast development of object detection techniques has attracted attention to developing efficient Deep Neural Networks (DNNs). However, the current state-of-the-art DNN models can not provide a balanced solution among accuracy, speed, and model size. This paper proposes an efficient real-time object detection framework on resource-constrained hardware devices through hardware and software co-design. The Tensor Train (TT) decomposition is proposed for compressing the YOLOv5 model. By unitizing the unique characteristics given by the TT decomposition, we develop an efficient hardware accelerator based on FPGA devices. Experimental results show that the proposed method can significantly reduce the model size and improve the execution time.

Electrically-Tunable Stochasticity for Spin-based Neuromorphic Circuits: Self-Adjusting to Variation

May 02, 2020

Abstract:Energy-efficient methods are addressed for leveraging low energy barrier nanomagnetic devices within neuromorphic architectures. Using a Magnetoresistive Random Access Memory (MRAM) probabilistic device (p-bit) as the basis of neuronal structures in Deep Belief Networks (DBNs), the impact of reducing the Magnetic Tunnel Junction's (MTJ's) energy barrier is assessed and optimized for the resulting stochasticity present in the learning system. This can mitigate the process variation sensitivity of stochastic DBNs which encounter a sharp drop-off when energy barriers exceed near-zero kT. As evaluated for the MNIST dataset for energy barriers at near-zero kT to 2.0 kT in increments of 0.5 kT, it is shown that the stability factor changes by 5 orders of magnitude. The self-compensating circuit developed herein provides a compact, and low complexity approach to mitigating process variation impacts towards practical implementation and fabrication.

Modular Simulation Framework for Process Variation Analysis of MRAM-based Deep Belief Networks

Feb 03, 2020

Abstract:Magnetic Random-Access Memory (MRAM) based p-bit neuromorphic computing devices are garnering increasing interest as a means to compactly and efficiently realize machine learning operations in Restricted Boltzmann Machines (RBMs). When embedded within an RBM resistive crossbar array, the p-bit based neuron realizes a tunable sigmoidal activation function. Since the stochasticity of activation is dependent on the energy barrier of the MRAM device, it is essential to assess the impact of process variation on the voltage-dependent behavior of the sigmoid function. Other influential performance factors arise from varying energy barriers on power consumption requiring a simulation environment to facilitate the multi-objective optimization of device and network parameters. Herein, transportable Python scripts are developed to analyze the output variation under changes in device dimensions on the accuracy of machine learning applications. Evaluation with RBM circuits using the MNIST dataset reveal impacts and limits for processing variation of device fabrication in terms of the resulting energy vs. accuracy tradeoffs, and the resulting simulation framework is available via a Creative Commons license.

SNRA: A Spintronic Neuromorphic Reconfigurable Array for In-Circuit Training and Evaluation of Deep Belief Networks

Jan 08, 2019

Abstract:In this paper, a spintronic neuromorphic reconfigurable Array (SNRA) is developed to fuse together power-efficient probabilistic and in-field programmable deterministic computing during both training and evaluation phases of restricted Boltzmann machines (RBMs). First, probabilistic spin logic devices are used to develop an RBM realization which is adapted to construct deep belief networks (DBNs) having one to three hidden layers of size 10 to 800 neurons each. Second, we design a hardware implementation for the contrastive divergence (CD) algorithm using a four-state finite state machine capable of unsupervised training in N+3 clocks where N denotes the number of neurons in each RBM. The functionality of our proposed CD hardware implementation is validated using ModelSim simulations. We synthesize the developed Verilog HDL implementation of our proposed test/train control circuitry for various DBN topologies where the maximal RBM dimensions yield resource utilization ranging from 51 to 2,421 lookup tables (LUTs). Next, we leverage spin Hall effect (SHE)-magnetic tunnel junction (MTJ) based non-volatile LUTs circuits as an alternative for static random access memory (SRAM)-based LUTs storing the deterministic logic configuration to form a reconfigurable fabric. Finally, we compare the performance of our proposed SNRA with SRAM-based configurable fabrics focusing on the area and power consumption induced by the LUTs used to implement both CD and evaluation modes. The results obtained indicate more than 80% reduction in combined dynamic and static power dissipation, while achieving at least 50% reduction in device count.

Composable Probabilistic Inference Networks Using MRAM-based Stochastic Neurons

Nov 28, 2018

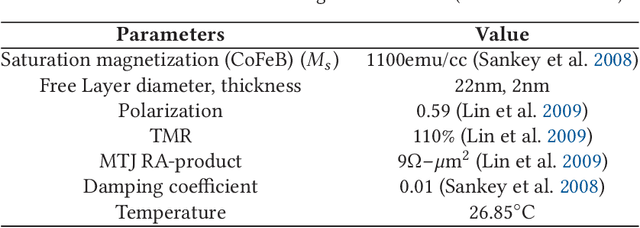

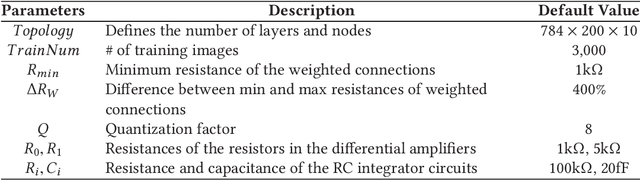

Abstract:Magnetoresistive random access memory (MRAM) technologies with thermally unstable nanomagnets are leveraged to develop an intrinsic stochastic neuron as a building block for restricted Boltzmann machines (RBMs) to form deep belief networks (DBNs). The embedded MRAM-based neuron is modeled using precise physics equations. The simulation results exhibit the desired sigmoidal relation between the input voltages and probability of the output state. A probabilistic inference network simulator (PIN-Sim) is developed to realize a circuit-level model of an RBM utilizing resistive crossbar arrays along with differential amplifiers to implement the positive and negative weight values. The PIN-Sim is composed of five main blocks to train a DBN, evaluate its accuracy, and measure its power consumption. The MNIST dataset is leveraged to investigate the energy and accuracy tradeoffs of seven distinct network topologies in SPICE using the 14nm HP-FinFET technology library with the nominal voltage of 0.8V, in which an MRAM-based neuron is used as the activation function. The software and hardware level simulations indicate that a $784\times200\times10$ topology can achieve less than 5% error rates with $\sim400 pJ$ energy consumption. The error rates can be reduced to 2.5% by using a $784\times500\times500\times500\times10$ DBN at the cost of $\sim10\times$ higher energy consumption and significant area overhead. Finally, the effects of specific hardware-level parameters on power dissipation and accuracy tradeoffs are identified via the developed PIN-Sim framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge