Romann Weber

Spectrogram Feature Losses for Music Source Separation

Jan 18, 2019

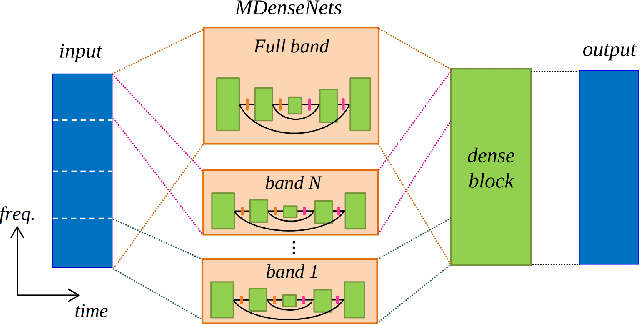

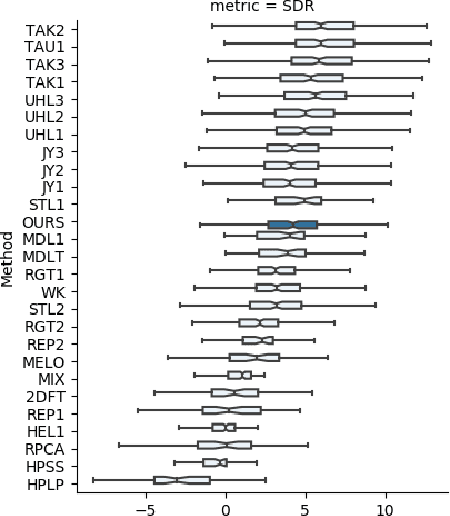

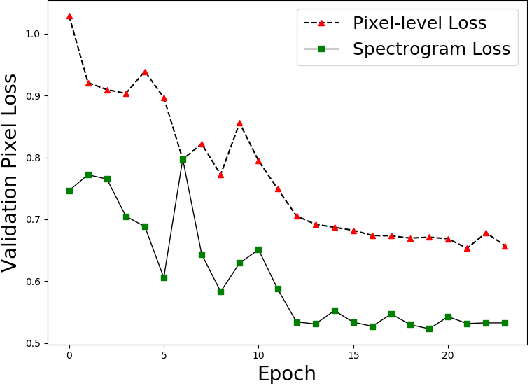

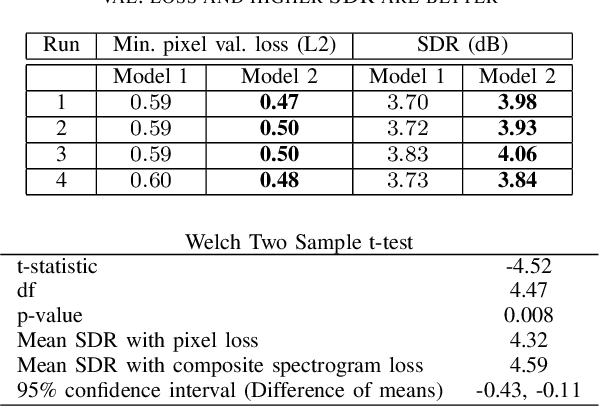

Abstract:In this paper we study deep learning-based music source separation, and explore using an alternative loss to the standard spectrogram pixel-level L2 loss for model training. Our main contribution is in demonstrating that adding a high-level feature loss term, extracted from the spectrograms using a VGG net, can improve separation quality vis-a-vis a pure pixel-level loss. We show this improvement in the context of the MMDenseNet, a State-of-the-Art deep learning model for this task, for the extraction of drums and vocal sounds from songs in the musdb18 database, covering a broad range of western music genres. We believe that this finding can be generalized and applied to broader machine learning-based systems in the audio domain.

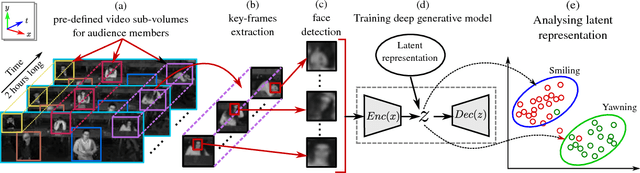

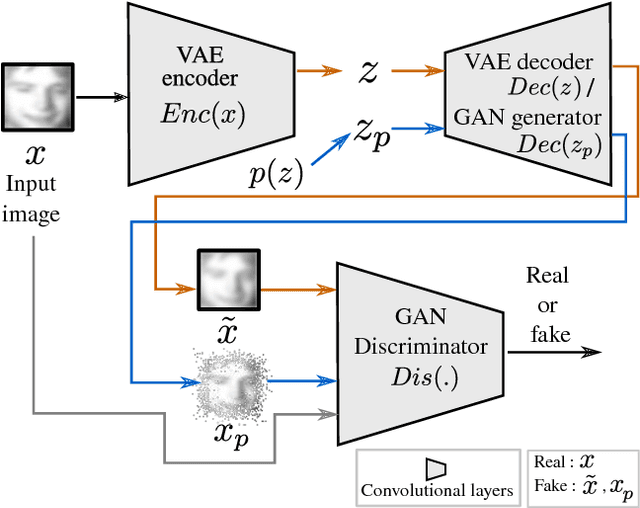

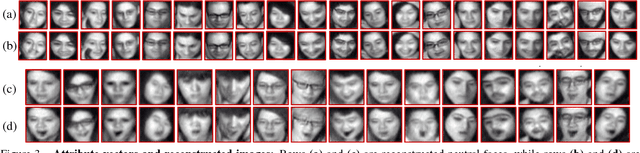

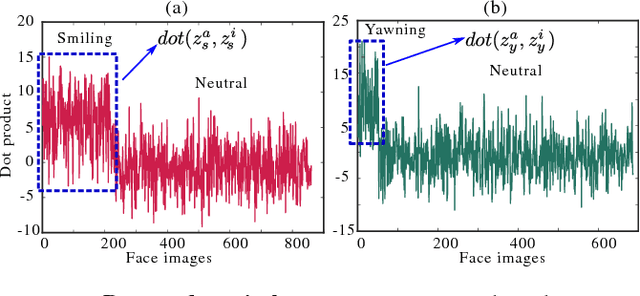

Unsupervised Deep Representations for Learning Audience Facial Behaviors

May 10, 2018

Abstract:In this paper, we present an unsupervised learning approach for analyzing facial behavior based on a deep generative model combined with a convolutional neural network (CNN). We jointly train a variational auto-encoder (VAE) and a generative adversarial network (GAN) to learn a powerful latent representation from footage of audiences viewing feature-length movies. We show that the learned latent representation successfully encodes meaningful signatures of behaviors related to audience engagement (smiling & laughing) and disengagement (yawning). Our results provide a proof of concept for a more general methodology for annotating hard-to-label multimedia data featuring sparse examples of signals of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge