Roberta L. Klatzky

A Promising Method for Touch-typing Keyboard Rendering

Dec 18, 2019

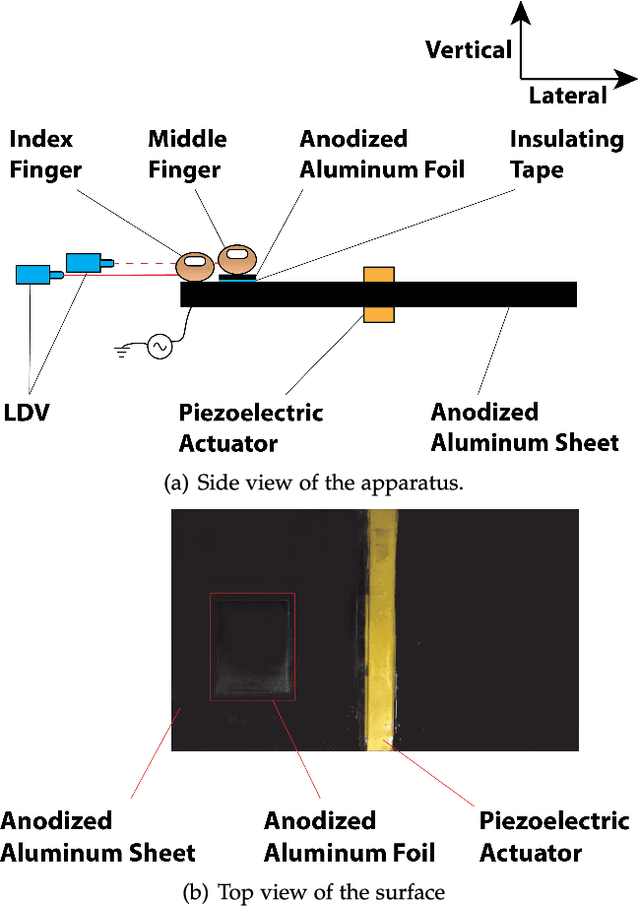

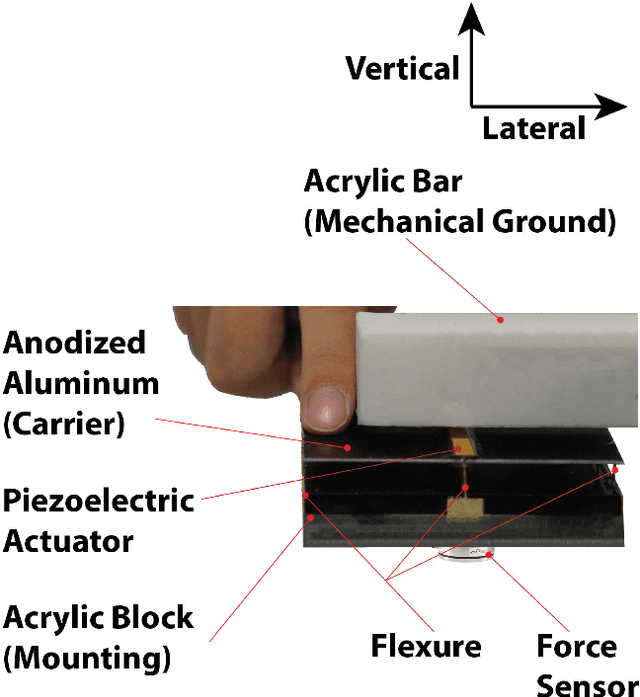

Abstract:We have developed a novel button click rendering mechanism based on active lateral force feedback. The effect can be localized because electroadhesion between a finger and a surface can be localized. Psychophysical experiments were conducted to evaluate the quality of a rendered button click, which subjects judged to be acceptable. Both the experiment results and the subjects' comments confirm that this button click rendering mechanism has the ability to generate a range of realistic button click sensations that could match subjects' different preferences. We can thus generate a button click on a flat surface without macroscopic motion of the surface in the lateral or normal direction, and we can localize this haptic effect to an individual finger. This mechanism is promising for touch-typing keyboard rendering.

Learning efficient haptic shape exploration with a rigid tactile sensor array

Feb 22, 2019

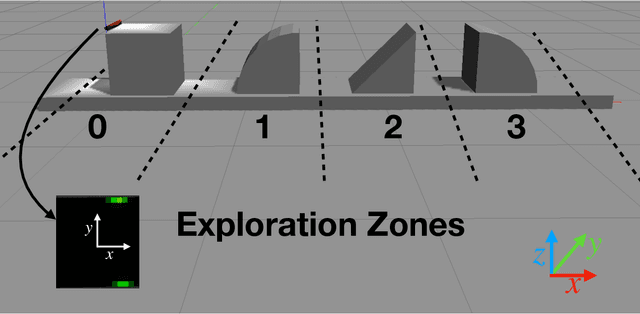

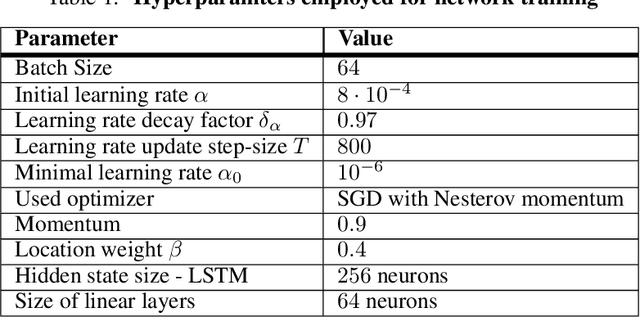

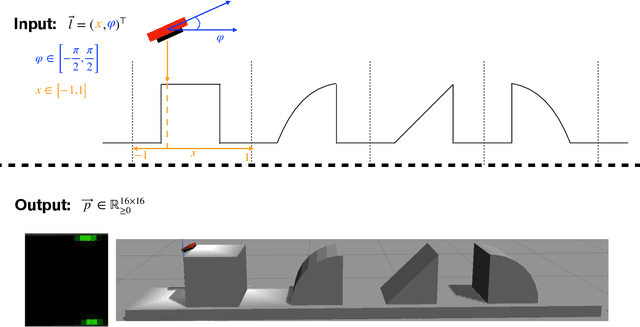

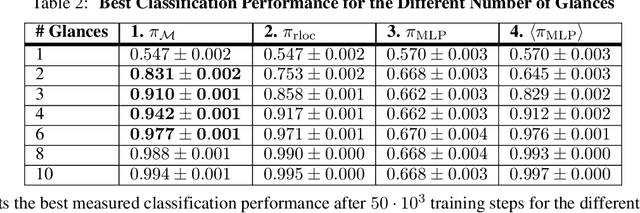

Abstract:Haptic exploration is a key skill for both robots and humans to discriminate and handle unknown or recognize familiar objects. Its active nature is impressively evident in humans which from early on reliably acquire sophisticated sensory-motor capabilites for active exploratory touch and directed manual exploration that associates surfaces and object properties with their spatial locations. In stark contrast, in robotics the relative lack of good real-world interaction models, along with very restricted sensors and a scarcity of suitable training data to leverage machine learning methods has so far rendered haptic exploration a largely underdeveloped skill for robots, very unlike vision where deep learning approaches and an abundance of available training data have triggered huge advances. In the present work, we connect recent advances in recurrent models of visual attention (RAM) with previous insights about the organisation of human haptic search behavior, exploratory procedures and haptic glances for a novel learning architecture that learns a generative model of haptic exploration in a simplified three-dimensional environment. The proposed algorithm simultaneously optimizes main perception-action loop components: feature extraction, integration of features over time, and the control strategy, while continuously acquiring data online. The resulting method has been successfully tested with four different objects. It achieved results close to 100% while performing object contour exploration that has been optimized for its own sensor morphology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge