Robert J. Shahla

Estimating the Number of HTTP/3 Responses in QUIC Using Deep Learning

Oct 08, 2024Abstract:QUIC, a new and increasingly used transport protocol, enhances TCP by providing better security, performance, and features like stream multiplexing. These features, however, also impose challenges for network middle-boxes that need to monitor and analyze web traffic. This paper proposes a novel solution for estimating the number of HTTP/3 responses in a given QUIC connection by an observer. This estimation reveals server behavior, client-server interactions, and data transmission efficiency, which is crucial for various applications such as designing a load balancing solution and detecting HTTP/3 flood attacks. The proposed scheme transforms QUIC connection traces into a sequence of images and trains machine learning (ML) models to predict the number of responses. Then, by aggregating images of a QUIC connection, an observer can estimate the total number of responses. As the problem is formulated as a discrete regression problem, we introduce a dedicated loss function. The proposed scheme is evaluated on a dataset of over seven million images, generated from $100,000$ traces collected from over $44,000$ websites over a four-month period, from various vantage points. The scheme achieves up to 97\% cumulative accuracy in both known and unknown web server settings and 92\% accuracy in estimating the total number of responses in unseen QUIC traces.

Distributed Monitoring for Data Distribution Shifts in Edge-ML Fraud Detection

Jan 10, 2024Abstract:The digital era has seen a marked increase in financial fraud. edge ML emerged as a promising solution for smartphone payment services fraud detection, enabling the deployment of ML models directly on edge devices. This approach enables a more personalized real-time fraud detection. However, a significant gap in current research is the lack of a robust system for monitoring data distribution shifts in these distributed edge ML applications. Our work bridges this gap by introducing a novel open-source framework designed for continuous monitoring of data distribution shifts on a network of edge devices. Our system includes an innovative calculation of the Kolmogorov-Smirnov (KS) test over a distributed network of edge devices, enabling efficient and accurate monitoring of users behavior shifts. We comprehensively evaluate the proposed framework employing both real-world and synthetic financial transaction datasets and demonstrate the framework's effectiveness.

Non-Adaptive Randomized Algorithm for Group Testing

Aug 09, 2017

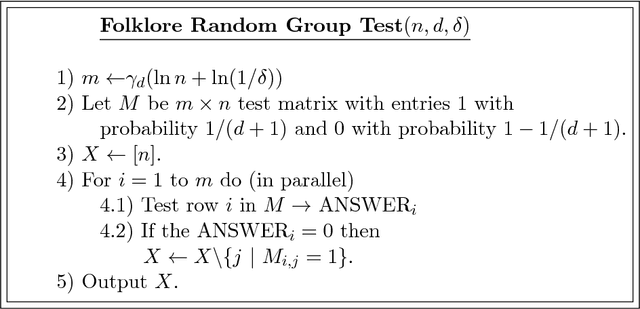

Abstract:We study the problem of group testing with a non-adaptive randomized algorithm in the random incidence design (RID) model where each entry in the test is chosen randomly independently from $\{0,1\}$ with a fixed probability $p$. The property that is sufficient and necessary for a unique decoding is the separability of the tests, but unfortunately no linear time algorithm is known for such tests. In order to achieve linear-time decodable tests, the algorithms in the literature use the disjunction property that gives almost optimal number of tests. We define a new property for the tests which we call semi-disjunction property. We show that there is a linear time decoding for such test and for $d\to \infty$ the number of tests converges to the number of tests with the separability property and is therefore optimal (in the RID model). Our analysis shows that, in the RID model, the number of tests in our algorithm is better than the one with the disjunction property even for small $d$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge