Rita Fioresi

Sheaf Neural Networks and biomedical applications

Jan 29, 2026Abstract:The purpose of this paper is to elucidate the theory and mathematical modelling behind the sheaf neural network (SNN) algorithm and then show how SNN can effectively answer to biomedical questions in a concrete case study and outperform the most popular graph neural networks (GNNs) as graph convolutional networks (GCNs), graph attention networks (GAT) and GraphSage.

Enhancing CNNs robustness to occlusions with bioinspired filters for border completion

Apr 24, 2025

Abstract:We exploit the mathematical modeling of the visual cortex mechanism for border completion to define custom filters for CNNs. We see a consistent improvement in performance, particularly in accuracy, when our modified LeNet 5 is tested with occluded MNIST images.

Cartan moving frames and the data manifolds

Sep 18, 2024Abstract:The purpose of this paper is to employ the language of Cartan moving frames to study the geometry of the data manifolds and its Riemannian structure, via the data information metric and its curvature at data points. Using this framework and through experiments, explanations on the response of a neural network are given by pointing out the output classes that are easily reachable from a given input. This emphasizes how the proposed mathematical relationship between the output of the network and the geometry of its inputs can be exploited as an explainable artificial intelligence tool.

Model-centric Data Manifold: the Data Through the Eyes of the Model

Apr 26, 2021

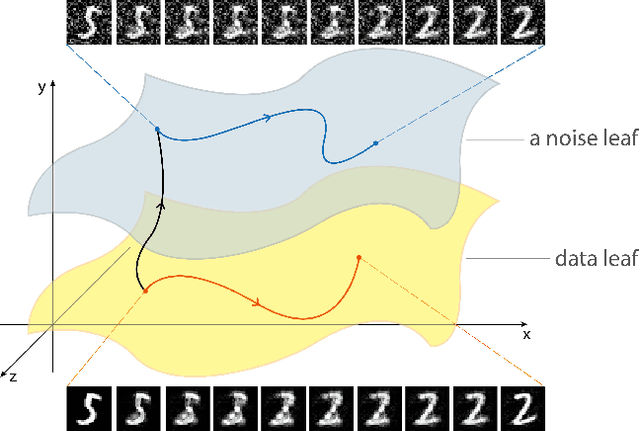

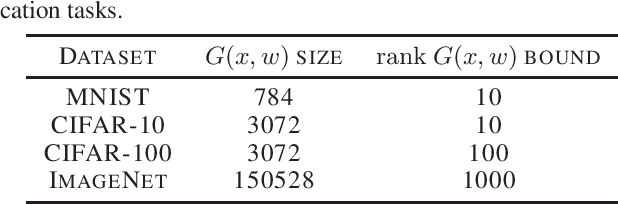

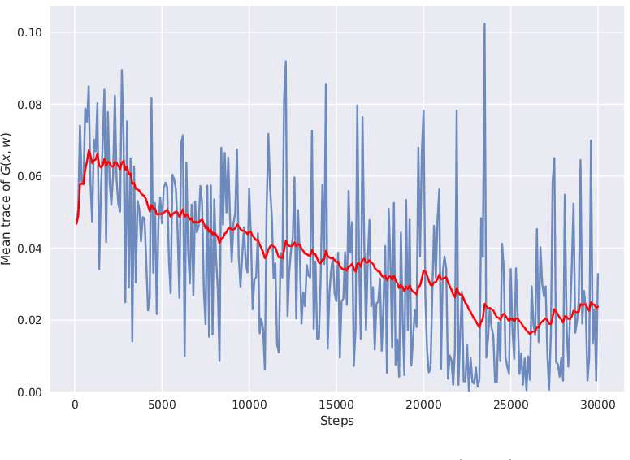

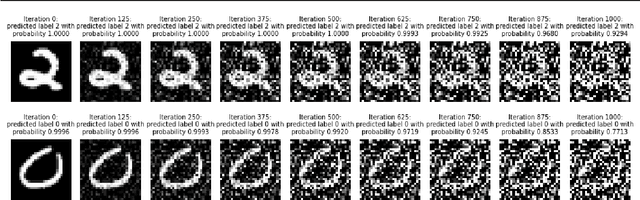

Abstract:We discover that deep ReLU neural network classifiers can see a low-dimensional Riemannian manifold structure on data. Such structure comes via the local data matrix, a variation of the Fisher information matrix, where the role of the model parameters is taken by the data variables. We obtain a foliation of the data domain and we show that the dataset on which the model is trained lies on a leaf, the data leaf, whose dimension is bounded by the number of classification labels. We validate our results with some experiments with the MNIST dataset: paths on the data leaf connect valid images, while other leaves cover noisy images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge