Richard J. Duro

Autonomous Generation of Sub-goals for Lifelong Learning in Robots

Mar 24, 2025Abstract:One of the challenges of open-ended learning in robots is the need to autonomously discover goals and learn skills to achieve them. However, when in lifelong learning settings, it is always desirable to generate sub-goals with their associated skills, without relying on explicit reward, as steppingstones to a goal. This allows sub-goals and skills to be reused to facilitate achieving other goals. This work proposes a two-pronged approach for sub-goal generation to address this challenge: a top-down approach, where sub-goals are hierarchically derived from general goals using intrinsic motivations to discover them, and a bottom-up approach, where sub-goal chains emerge from making latent relationships between goals and perceptual classes that were previously learned in different domains explicit. These methods help the robot to autonomously generate and chain sub-goals as a way to achieve more general goals. Additionally, they create more abstract representations of goals, helping to reduce sub-goal duplication and make the learning of skills more efficient. Implemented within an existing cognitive architecture for lifelong open-ended learning and tested with a real robot, our approach enhances the robot's ability to discover and achieve goals, generate sub-goals in an efficient manner, generalize learned skills, and operate in dynamic and unknown environments without explicit intermediate rewards.

Purpose for Open-Ended Learning Robots: A Computational Taxonomy, Definition, and Operationalisation

Mar 04, 2024Abstract:Autonomous open-ended learning (OEL) robots are able to cumulatively acquire new skills and knowledge through direct interaction with the environment, for example relying on the guidance of intrinsic motivations and self-generated goals. OEL robots have a high relevance for applications as they can use the autonomously acquired knowledge to accomplish tasks relevant for their human users. OEL robots, however, encounter an important limitation: this may lead to the acquisition of knowledge that is not so much relevant to accomplish the users' tasks. This work analyses a possible solution to this problem that pivots on the novel concept of `purpose'. Purposes indicate what the designers and/or users want from the robot. The robot should use internal representations of purposes, called here `desires', to focus its open-ended exploration towards the acquisition of knowledge relevant to accomplish them. This work contributes to develop a computational framework on purpose in two ways. First, it formalises a framework on purpose based on a three-level motivational hierarchy involving: (a) the purposes; (b) the desires, which are domain independent; (c) specific domain dependent state-goals. Second, the work highlights key challenges highlighted by the framework such as: the `purpose-desire alignment problem', the `purpose-goal grounding problem', and the `arbitration between desires'. Overall, the approach enables OEL robots to learn in an autonomous way but also to focus on acquiring goals and skills that meet the purposes of the designers and users.

Autonomous Open-Ended Learning of Tasks with Non-Stationary Interdependencies

May 16, 2022

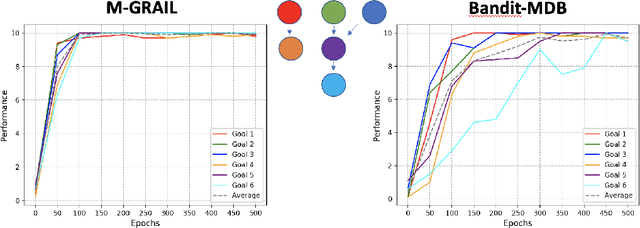

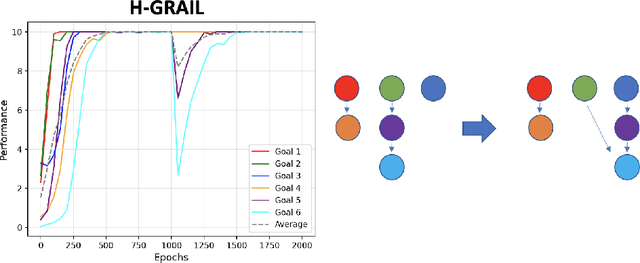

Abstract:Autonomous open-ended learning is a relevant approach in machine learning and robotics, allowing the design of artificial agents able to acquire goals and motor skills without the necessity of user assigned tasks. A crucial issue for this approach is to develop strategies to ensure that agents can maximise their competence on as many tasks as possible in the shortest possible time. Intrinsic motivations have proven to generate a task-agnostic signal to properly allocate the training time amongst goals. While the majority of works in the field of intrinsically motivated open-ended learning focus on scenarios where goals are independent from each other, only few of them studied the autonomous acquisition of interdependent tasks, and even fewer tackled scenarios where goals involve non-stationary interdependencies. Building on previous works, we tackle these crucial issues at the level of decision making (i.e., building strategies to properly select between goals), and we propose a hierarchical architecture that treating sub-tasks selection as a Markov Decision Process is able to properly learn interdependent skills on the basis of intrinsically generated motivations. In particular, we first deepen the analysis of a previous system, showing the importance of incorporating information about the relationships between tasks at a higher level of the architecture (that of goal selection). Then we introduce H-GRAIL, a new system that extends the previous one by adding a new learning layer to store the autonomously acquired sequences of tasks to be able to modify them in case the interdependencies are non-stationary. All systems are tested in a real robotic scenario, with a Baxter robot performing multiple interdependent reaching tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge