Reza Jalil Mozhdehi

Deep Convolutional Correlation Iterative Particle Filter for Visual Tracking

Jul 07, 2021Abstract:This work proposes a novel framework for visual tracking based on the integration of an iterative particle filter, a deep convolutional neural network, and a correlation filter. The iterative particle filter enables the particles to correct themselves and converge to the correct target position. We employ a novel strategy to assess the likelihood of the particles after the iterations by applying K-means clustering. Our approach ensures a consistent support for the posterior distribution. Thus, we do not need to perform resampling at every video frame, improving the utilization of prior distribution information. Experimental results on two different benchmark datasets show that our tracker performs favorably against state-of-the-art methods.

Deep Heterogeneous Autoencoder for Subspace Clustering of Sequential Data

Jul 14, 2020

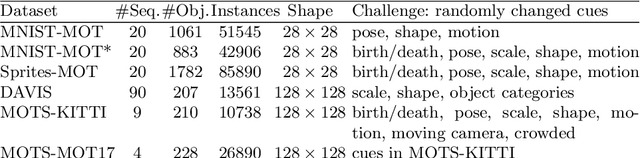

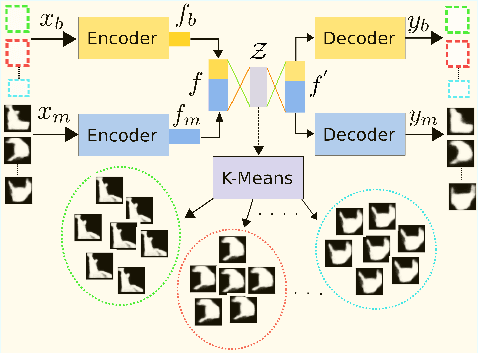

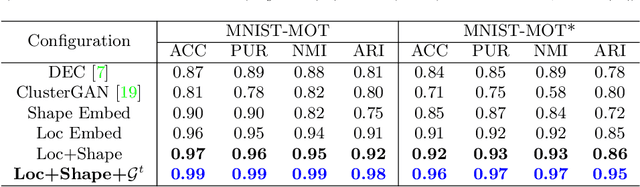

Abstract:We propose an unsupervised learning approach using a convolutional and fully connected autoencoder, which we call deep heterogeneous autoencoder, to learn discriminative features from segmentation masks and detection bounding boxes. To learn the mask shape information and its corresponding location in an input image, we extract coarse masks from a pretrained semantic segmentation network as well as their corresponding bounding boxes. We train the autoencoders jointly using task-dependent uncertainty weights to generate common latent features. The feature vector is then fed to the k-means clustering algorithm to separate the data points in the latent space. Finally, we incorporate additional penalties in the form of a constraints graph based on prior knowledge of the sequential data to increase clustering robustness. We evaluate the performance of our method using both synthetic and real world multi-object video datasets to demonstrate the applicability of our proposed model. Our results show that the proposed technique outperforms several state-of-the-art methods on challenging video sequences.

Deep Convolutional Likelihood Particle Filter for Visual Tracking

Jun 11, 2020

Abstract:We propose a novel particle filter for convolutional-correlation visual trackers. Our method uses correlation response maps to estimate likelihood distributions and employs these likelihoods as proposal densities to sample particles. Likelihood distributions are more reliable than proposal densities based on target transition distributions because correlation response maps provide additional information regarding the target's location. Additionally, our particle filter searches for multiple modes in the likelihood distribution, which improves performance in target occlusion scenarios while decreasing computational costs by more efficiently sampling particles. In other challenging scenarios such as those involving motion blur, where only one mode is present but a larger search area may be necessary, our particle filter allows for the variance of the likelihood distribution to increase. We tested our algorithm on the Visual Tracker Benchmark v1.1 (OTB100) and our experimental results demonstrate that our framework outperforms state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge