Renato Vicente

Moral Susceptibility and Robustness under Persona Role-Play in Large Language Models

Nov 11, 2025Abstract:Large language models (LLMs) increasingly operate in social contexts, motivating analysis of how they express and shift moral judgments. In this work, we investigate the moral response of LLMs to persona role-play, prompting a LLM to assume a specific character. Using the Moral Foundations Questionnaire (MFQ), we introduce a benchmark that quantifies two properties: moral susceptibility and moral robustness, defined from the variability of MFQ scores across and within personas, respectively. We find that, for moral robustness, model family accounts for most of the variance, while model size shows no systematic effect. The Claude family is, by a significant margin, the most robust, followed by Gemini and GPT-4 models, with other families exhibiting lower robustness. In contrast, moral susceptibility exhibits a mild family effect but a clear within-family size effect, with larger variants being more susceptible. Moreover, robustness and susceptibility are positively correlated, an association that is more pronounced at the family level. Additionally, we present moral foundation profiles for models without persona role-play and for personas averaged across models. Together, these analyses provide a systematic view of how persona conditioning shapes moral behavior in large language models.

A unified framework for dataset shift diagnostics

May 17, 2022

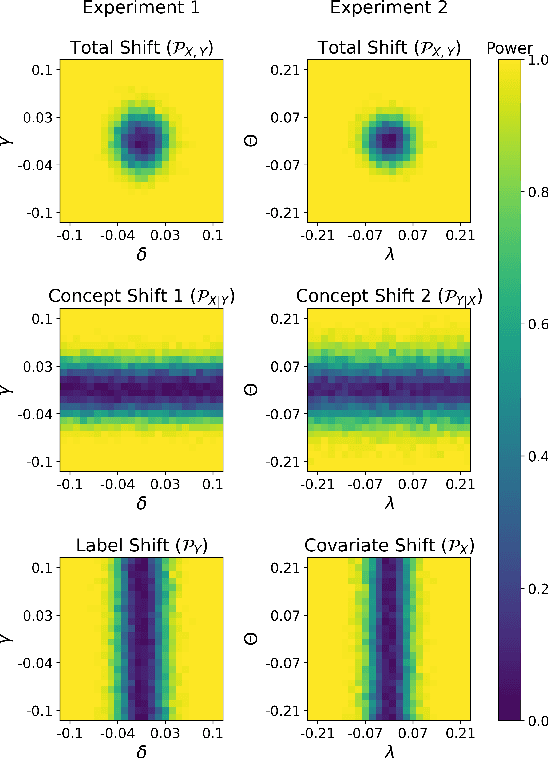

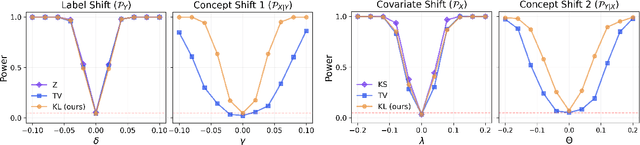

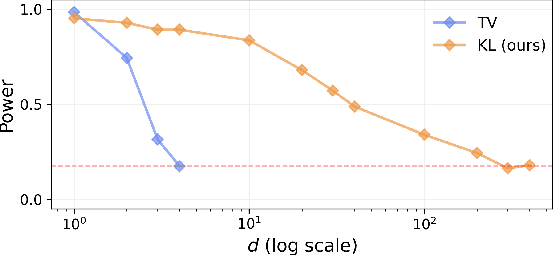

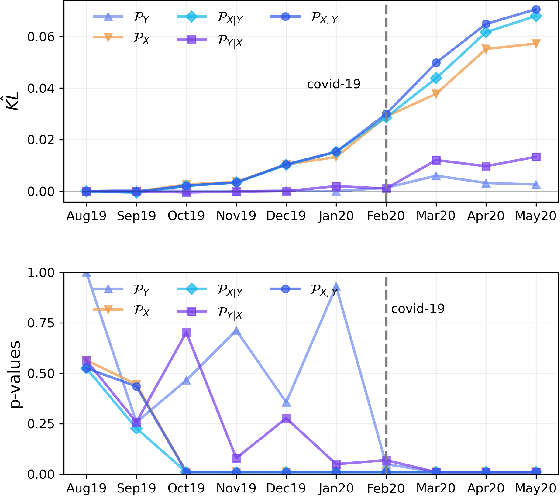

Abstract:Most machine learning (ML) methods assume that the data used in the training phase comes from the distribution of the target population. However, in practice one often faces dataset shift, which, if not properly taken into account, may decrease the predictive performance of the ML models. In general, if the practitioner knows which type of shift is taking place - e.g., covariate shift or label shift - they may apply transfer learning methods to obtain better predictions. Unfortunately, current methods for detecting shift are only designed to detect specific types of shift or cannot formally test their presence. We introduce a general framework that gives insights on how to improve prediction methods by detecting the presence of different types of shift and quantifying how strong they are. Our approach can be used for any data type (tabular/image/text) and both for classification and regression tasks. Moreover, it uses formal hypotheses tests that controls false alarms. We illustrate how our framework is useful in practice using both artificial and real datasets. Our package for dataset shift detection can be found in https://github.com/felipemaiapolo/detectshift.

Effects of personality traits in predicting grade retention of Brazilian students

Jul 12, 2021

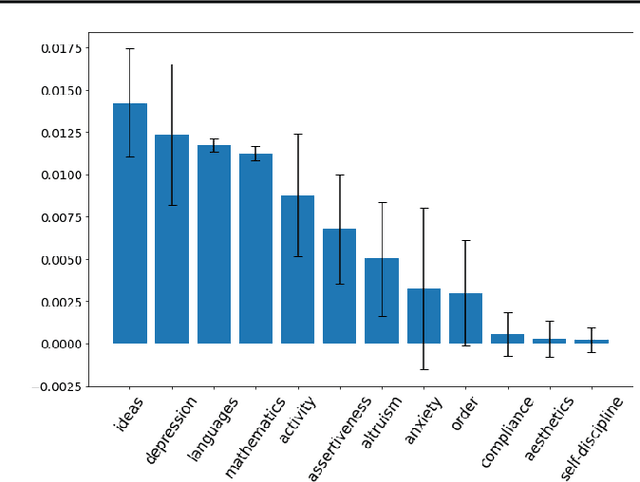

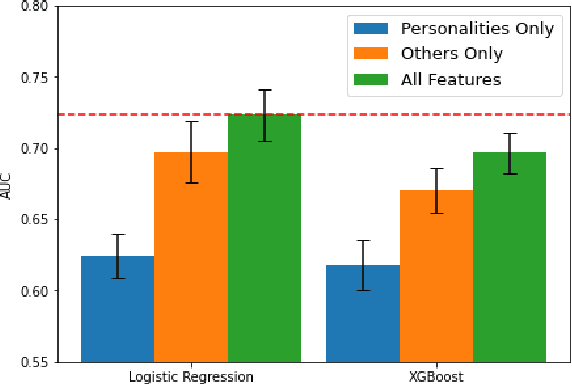

Abstract:Student's grade retention is a key issue faced by many education systems, especially those in developing countries. In this paper, we seek to gauge the relevance of students' personality traits in predicting grade retention in Brazil. For that, we used data collected in 2012 and 2017, in the city of Sertaozinho, countryside of the state of Sao Paulo, Brazil. The surveys taken in Sertaozinho included several socioeconomic questions, standardized tests, and a personality test. Moreover, students were in grades 4, 5, and 6 in 2012. Our approach was based on training machine learning models on the surveys' data to predict grade retention between 2012 and 2017 using information from 2012 or before, and then using some strategies to quantify personality traits' predictive power. We concluded that, besides proving to be fairly better than a random classifier when isolated, personality traits contribute to prediction even when using socioeconomic variables and standardized tests results.

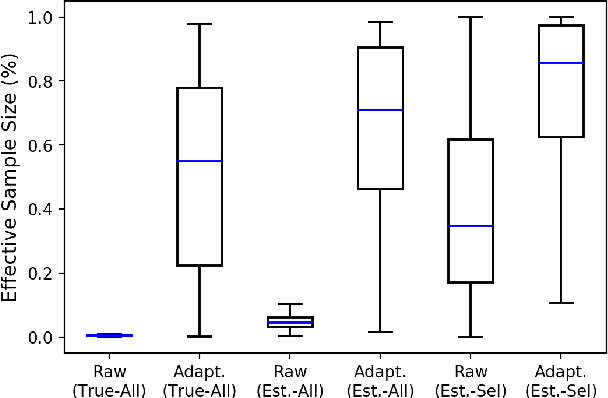

Covariate Shift Adaptation in High-Dimensional and Divergent Distributions

Oct 02, 2020

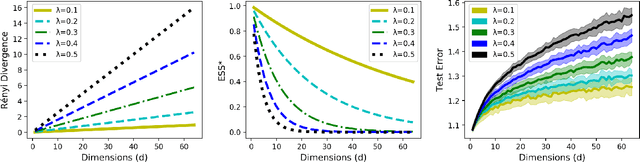

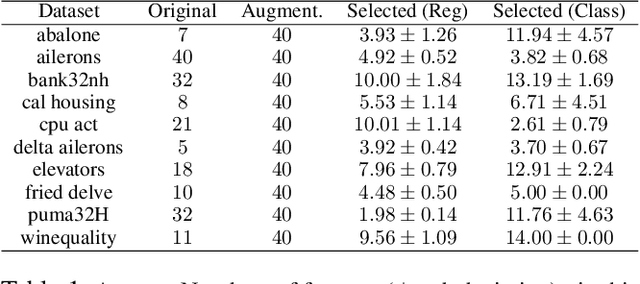

Abstract:In real world applications of supervised learning methods, training and test sets are often sampled from the distinct distributions and we must resort to domain adaptation techniques. One special class of techniques is Covariate Shift Adaptation, which allows practitioners to obtain good generalization performance in the distribution of interest when domains differ only by the marginal distribution of features. Traditionally, Covariate Shift Adaptation is implemented using Importance Weighting which may fail in high-dimensional settings due to small Effective Sample Sizes (ESS). In this paper, we propose (i) a connection between ESS, high-dimensional settings and generalization bounds and (ii) a simple, general and theoretically sound approach to combine feature selection and Covariate Shift Adaptation. The new approach yields good performance with improved ESS.

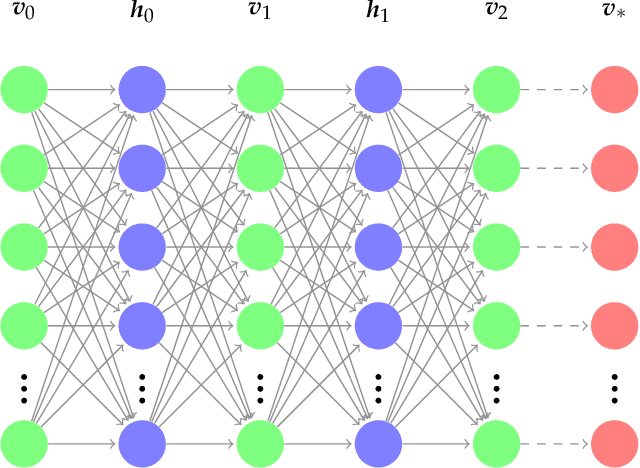

Restricted Boltzmann Machine Flows and The Critical Temperature of Ising models

Jun 17, 2020

Abstract:We explore alternative experimental setups for the iterative sampling (flow) from Restricted Boltzmann Machines (RBM) mapped on the temperature space of square lattice Ising models by a neural network thermometer. This framework has been introduced to explore connections between RBM-based deep neural networks and the Renormalization Group (RG). It has been found that, under certain conditions, the flow of an RBM trained with Ising spin configurations approaches in the temperature space a value around the critical one: $ k_B T_c / J \approx 2.269$. In this paper we consider datasets with no information about model topology to argue that a neural network thermometer is not an accurate way to detect whether the RBM has learned scale invariance or not.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge