Renato Miyagusuku

Stereo Camera Visual SLAM with Hierarchical Masking and Motion-state Classification at Outdoor Construction Sites Containing Large Dynamic Objects

Jan 17, 2021

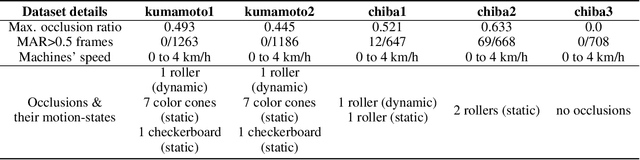

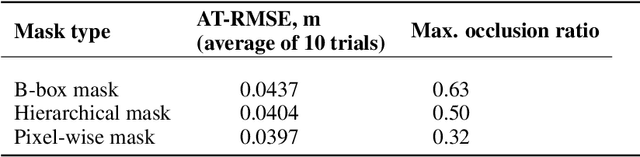

Abstract:At modern construction sites, utilizing GNSS (Global Navigation Satellite System) to measure the real-time location and orientation (i.e. pose) of construction machines and navigate them is very common. However, GNSS is not always available. Replacing GNSS with on-board cameras and visual simultaneous localization and mapping (visual SLAM) to navigate the machines is a cost-effective solution. Nevertheless, at construction sites, multiple construction machines will usually work together and side-by-side, causing large dynamic occlusions in the cameras' view. Standard visual SLAM cannot handle large dynamic occlusions well. In this work, we propose a motion segmentation method to efficiently extract static parts from crowded dynamic scenes to enable robust tracking of camera ego-motion. Our method utilizes semantic information combined with object-level geometric constraints to quickly detect the static parts of the scene. Then, we perform a two-step coarse-to-fine ego-motion tracking with reference to the static parts. This leads to a novel dynamic visual SLAM formation. We test our proposals through a real implementation based on ORB-SLAM2, and datasets we collected from real construction sites. The results show that when standard visual SLAM fails, our method can still retain accurate camera ego-motion tracking in real-time. Comparing to state-of-the-art dynamic visual SLAM methods, ours shows outstanding efficiency and competitive result trajectory accuracy.

* This is an Accepted Manuscript of an article published by Taylor & Francis in Advanced Robotics on Jan. 11th, 2021, available online: https://www.tandfonline.com/doi/full/10.1080/01691864.2020.1869586 [Article DOI:10.1080/01691864.2020.1869586]

Distance Invariant Sparse Autoencoder for Wireless Signal Strength Mapping

Oct 29, 2020

Abstract:Wireless signal strength based localization can enable robust localization for robots using inexpensive sensors. For this, a location-to-signal-strength map has to be learned for each access point in the environment. Due to the ubiquity of Wireless networks in most environments, this can result in tens or hundreds of maps. To reduce the dimensionality of this problem, we employ autoencoders, which are a popular unsupervised approach for feature extraction and data compression. In particular, we propose the use of sparse autoencoders that learn latent spaces that preserve the relative distance between inputs. Distance invariance between input and latent spaces allows our system to successfully learn compact representations that allow precise data reconstruction but also have a low impact on localization performance when using maps from the latent space rather than the input space. We demonstrate the feasibility of our approach by performing experiments in outdoor environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge