Renate A. Schmidt

Signature-Based Abduction for Expressive Description Logics -- Technical Report

Jul 08, 2020

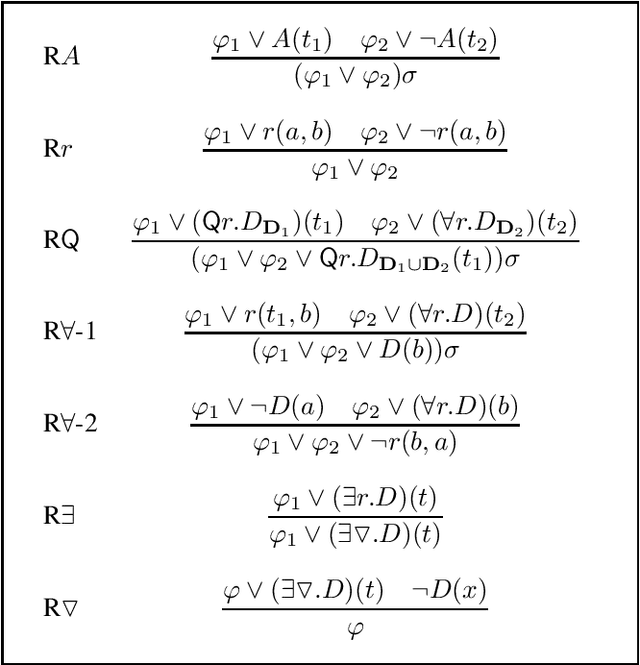

Abstract:Signature-based abduction aims at building hypotheses over a specified set of names, the signature, that explain an observation relative to some background knowledge. This type of abduction is useful for tasks such as diagnosis, where the vocabulary used for observed symptoms differs from the vocabulary expected to explain those symptoms. We present the first complete method solving signature-based abduction for observations expressed in the expressive description logic ALC, which can include TBox and ABox axioms, thereby solving the knowledge base abduction problem. The method is guaranteed to compute a finite and complete set of hypotheses, and is evaluated on a set of realistic knowledge bases.

ABox Abduction via Forgetting in ALC (Long Version)

Nov 13, 2018

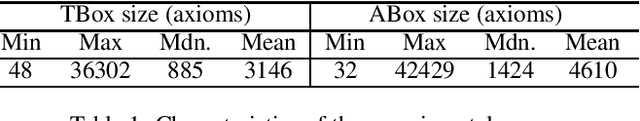

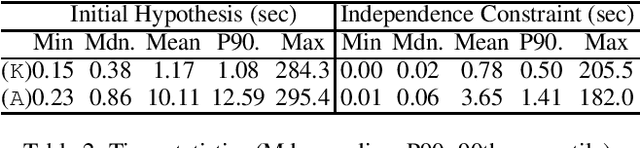

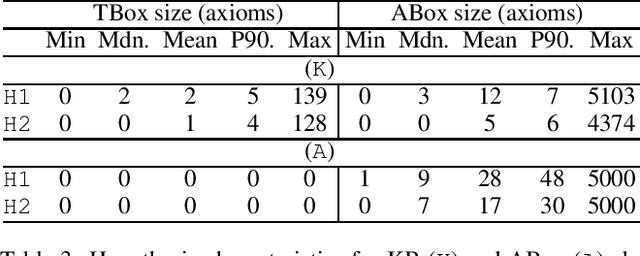

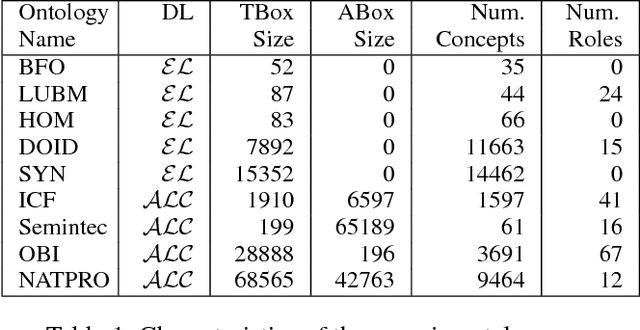

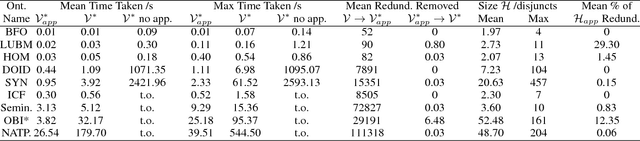

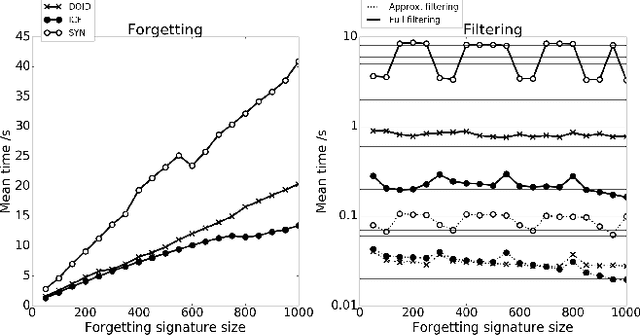

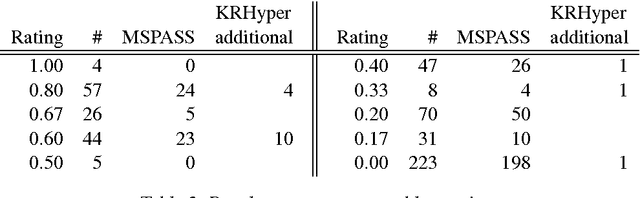

Abstract:Abductive reasoning generates explanatory hypotheses for new observations using prior knowledge. This paper investigates the use of forgetting, also known as uniform interpolation, to perform ABox abduction in description logic (ALC) ontologies. Non-abducibles are specified by a forgetting signature which can contain concept, but not role, symbols. The resulting hypotheses are semantically minimal and each consist of a set of disjuncts. These disjuncts are each independent explanations, and are not redundant with respect to the background ontology or the other disjuncts, representing a form of hypothesis space. The observations and hypotheses handled by the method can contain both atomic or complex ALC concepts, excluding role assertions, and are not restricted to Horn clauses. Two approaches to redundancy elimination are explored for practical use: full and approximate. Using a prototype implementation, experiments were performed over a corpus of real world ontologies to investigate the practicality of both approaches across several settings.

Blocking and Other Enhancements for Bottom-Up Model Generation Methods

Nov 29, 2016

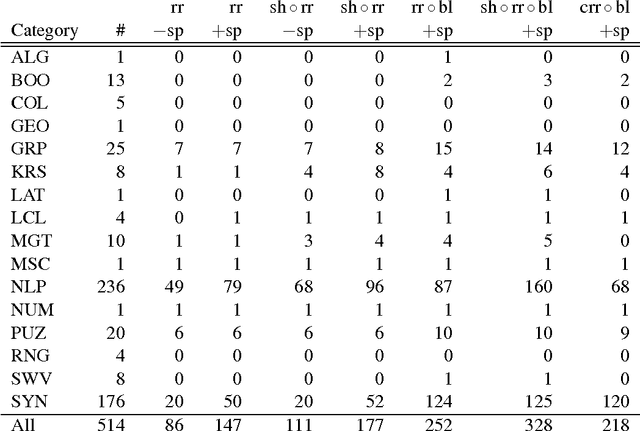

Abstract:Model generation is a problem complementary to theorem proving and is important for fault analysis and debugging of formal specifications of security protocols, programs and terminological definitions. This paper discusses several ways of enhancing the paradigm of bottom-up model generation. The two main contributions are new, generalized blocking techniques and a new range-restriction transformation. The blocking techniques are based on simple transformations of the input set together with standard equality reasoning and redundancy elimination techniques. These provide general methods for finding small, finite models. The range-restriction transformation refines existing transformations to range-restricted clauses by carefully limiting the creation of domain terms. All possible combinations of the introduced techniques and classical range-restriction were tested on the clausal problems of the TPTP Version 6.0.0 with an implementation based on the SPASS theorem prover using a hyperresolution-like refinement. Unrestricted domain blocking gave best results for satisfiable problems showing it is a powerful technique indispensable for bottom-up model generation methods. Both in combination with the new range-restricting transformation, and the classical range-restricting transformation, good results have been obtained. Limiting the creation of terms during the inference process by using the new range restricting transformation has paid off, especially when using it together with a shifting transformation. The experimental results also show that classical range restriction with unrestricted blocking provides a useful complementary method. Overall, the results showed bottom-up model generation methods were good for disproving theorems and generating models for satisfiable problems, but less efficient than SPASS in auto mode for unsatisfiable problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge