Razieh Baradaran

Ensemble Learning-Based Approach for Improving Generalization Capability of Machine Reading Comprehension Systems

Jul 15, 2021

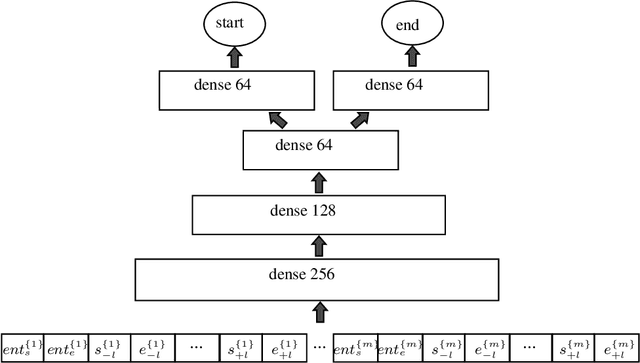

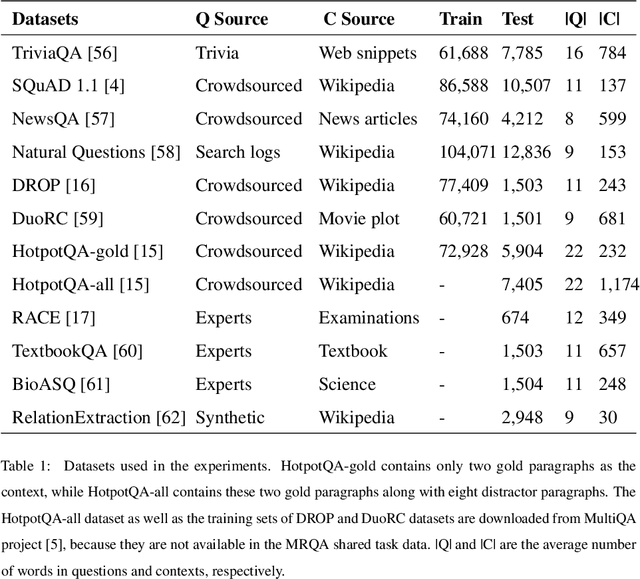

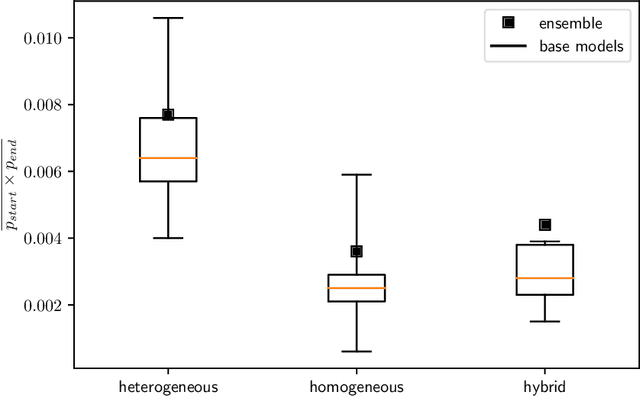

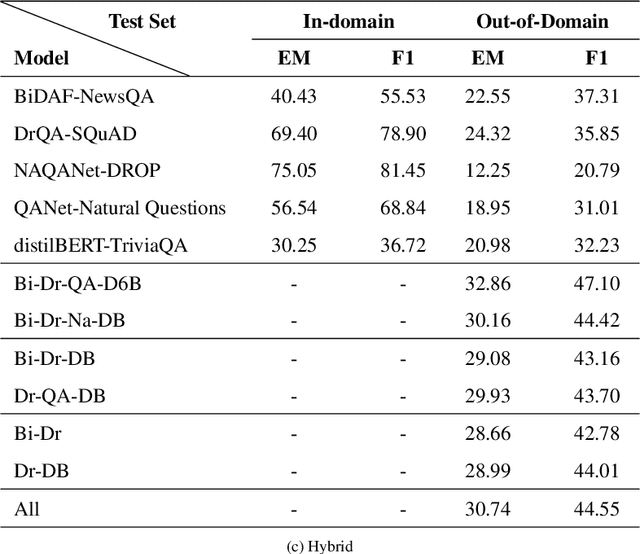

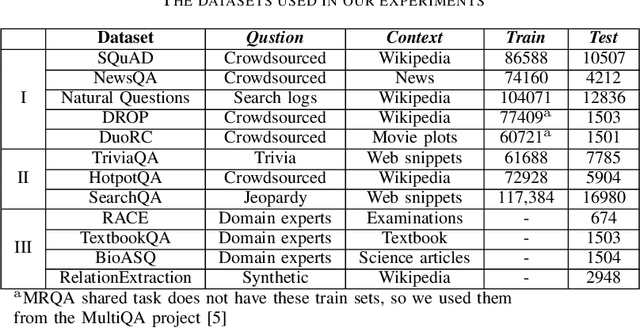

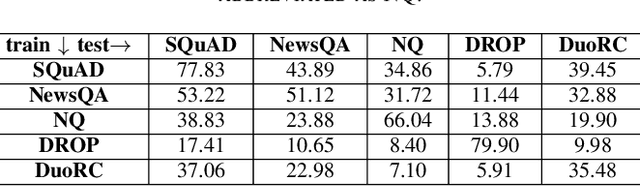

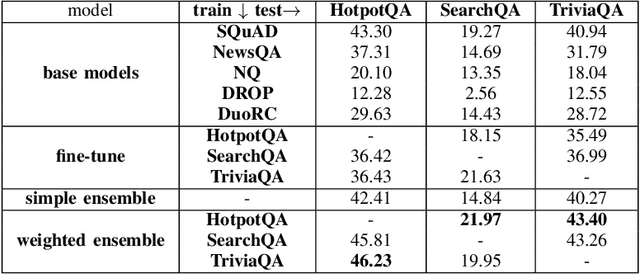

Abstract:Machine Reading Comprehension (MRC) is an active field in natural language processing with many successful developed models in recent years. Despite their high in-distribution accuracy, these models suffer from two issues: high training cost and low out-of-distribution accuracy. Even though some approaches have been presented to tackle the generalization problem, they have high, intolerable training costs. In this paper, we investigate the effect of ensemble learning approach to improve generalization of MRC systems without retraining a big model. After separately training the base models with different structures on different datasets, they are ensembled using weighting and stacking approaches in probabilistic and non-probabilistic settings. Three configurations are investigated including heterogeneous, homogeneous, and hybrid on eight datasets and six state-of-the-art models. We identify the important factors in the effectiveness of ensemble methods. Also, we compare the robustness of ensemble and fine-tuned models against data distribution shifts. The experimental results show the effectiveness and robustness of the ensemble approach in improving the out-of-distribution accuracy of MRC systems, especially when the base models are similar in accuracies.

Zero-Shot Estimation of Base Models' Weights in Ensemble of Machine Reading Comprehension Systems for Robust Generalization

Jun 30, 2021

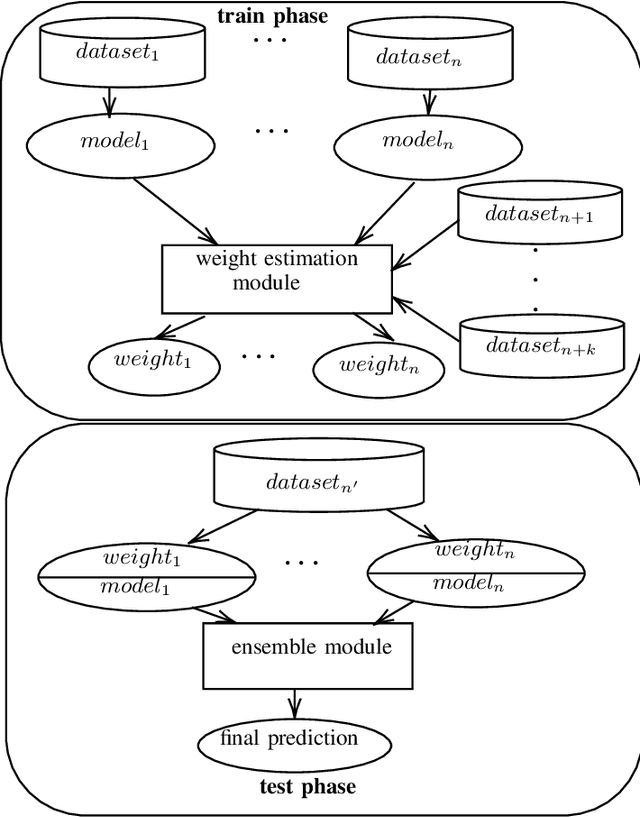

Abstract:One of the main challenges of the machine reading comprehension (MRC) models is their fragile out-of-domain generalization, which makes these models not properly applicable to real-world general-purpose question answering problems. In this paper, we leverage a zero-shot weighted ensemble method for improving the robustness of out-of-domain generalization in MRC models. In the proposed method, a weight estimation module is used to estimate out-of-domain weights, and an ensemble module aggregate several base models' predictions based on their weights. The experiments indicate that the proposed method not only improves the final accuracy, but also is robust against domain changes.

A Survey on Machine Reading Comprehension Systems

Jan 06, 2020

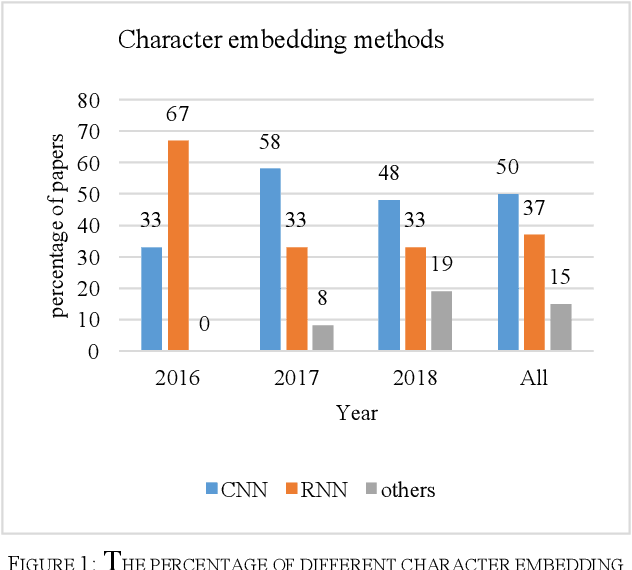

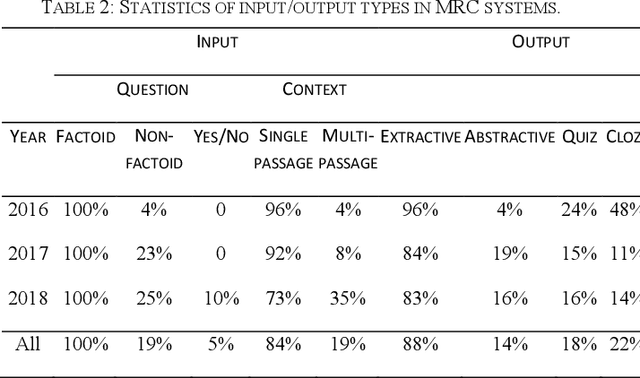

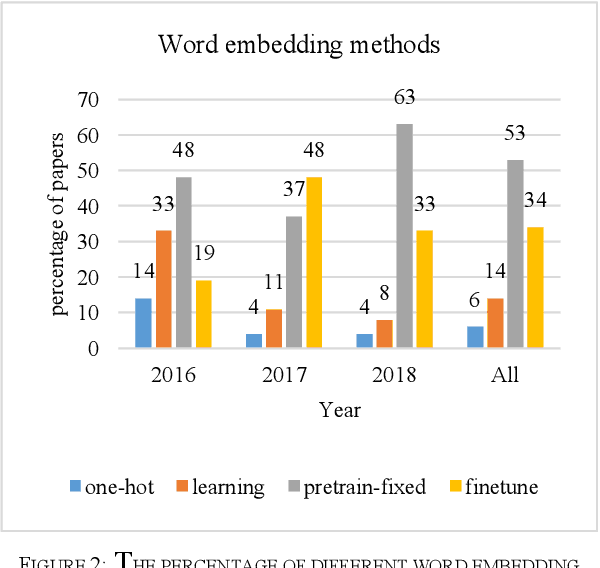

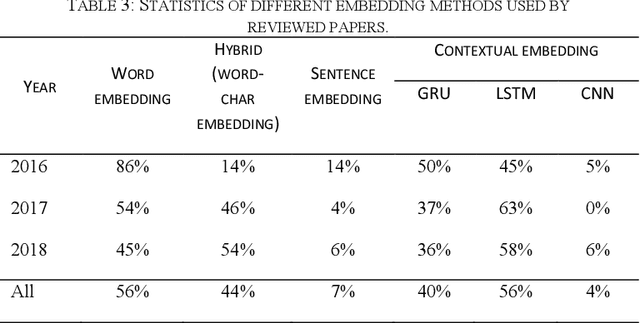

Abstract:Machine reading comprehension is a challenging task and hot topic in natural language processing. Its goal is to develop systems to answer the questions regarding a given context. In this paper, we present a comprehensive survey on different aspects of machine reading comprehension systems, including their approaches, structures, input/outputs, and research novelties. We illustrate the recent trends in this field based on 124 reviewed papers from 2016 to 2018. Our investigations demonstrate that the focus of research has changed in recent years from answer extraction to answer generation, from single to multi-document reading comprehension, and from learning from scratch to using pre-trained embeddings. We also discuss the popular datasets and the evaluation metrics in this field. The paper ends with investigating the most cited papers and their contributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge