Zero-Shot Estimation of Base Models' Weights in Ensemble of Machine Reading Comprehension Systems for Robust Generalization

Paper and Code

Jun 30, 2021

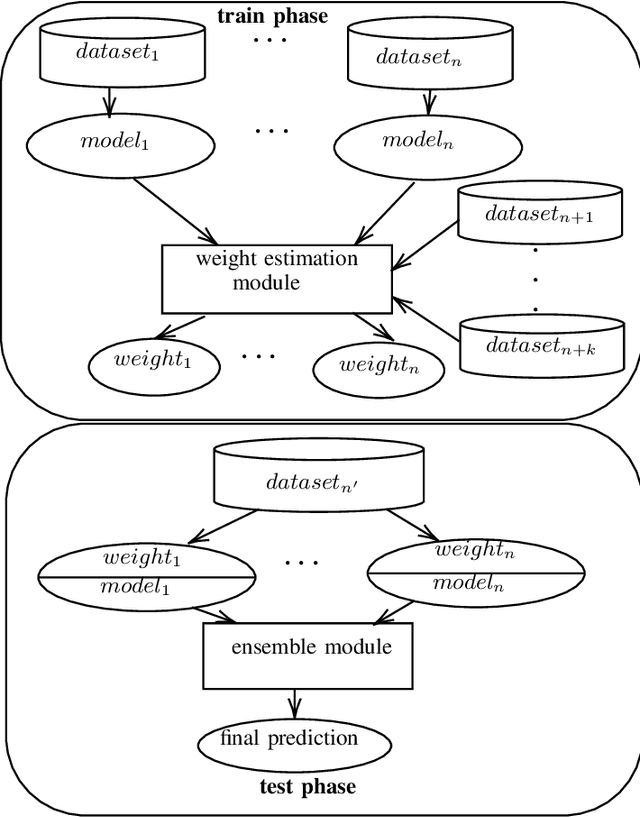

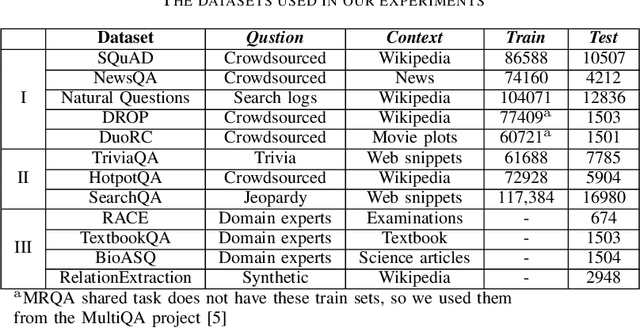

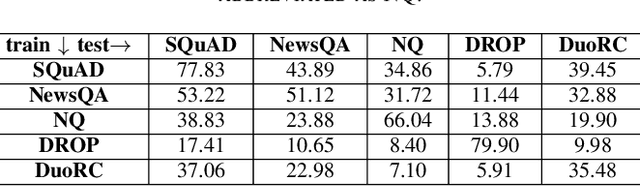

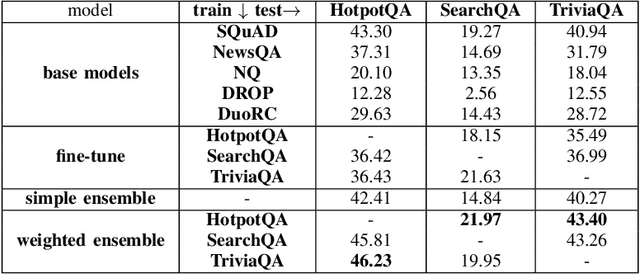

One of the main challenges of the machine reading comprehension (MRC) models is their fragile out-of-domain generalization, which makes these models not properly applicable to real-world general-purpose question answering problems. In this paper, we leverage a zero-shot weighted ensemble method for improving the robustness of out-of-domain generalization in MRC models. In the proposed method, a weight estimation module is used to estimate out-of-domain weights, and an ensemble module aggregate several base models' predictions based on their weights. The experiments indicate that the proposed method not only improves the final accuracy, but also is robust against domain changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge