Razieh Ghiasi

Multi-view graph structure learning using subspace merging on Grassmann manifold

Apr 11, 2022

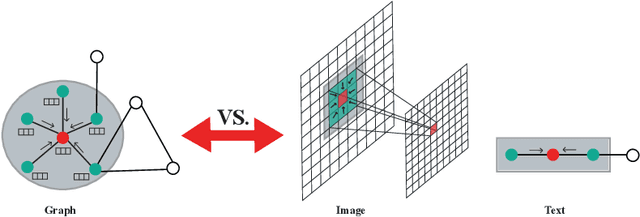

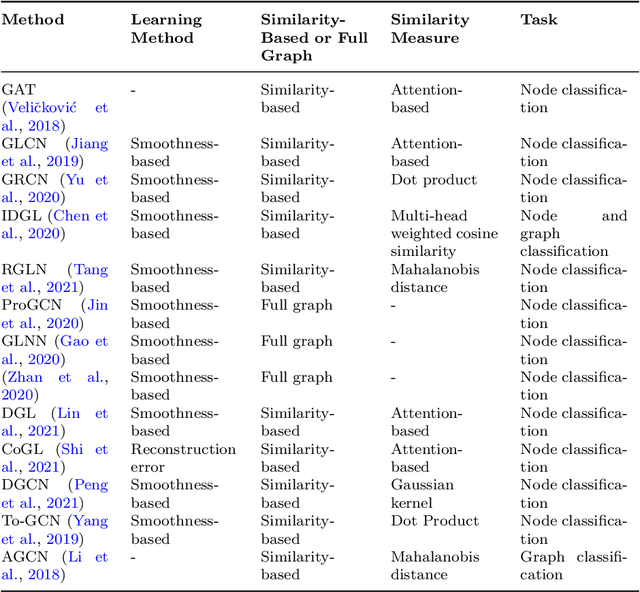

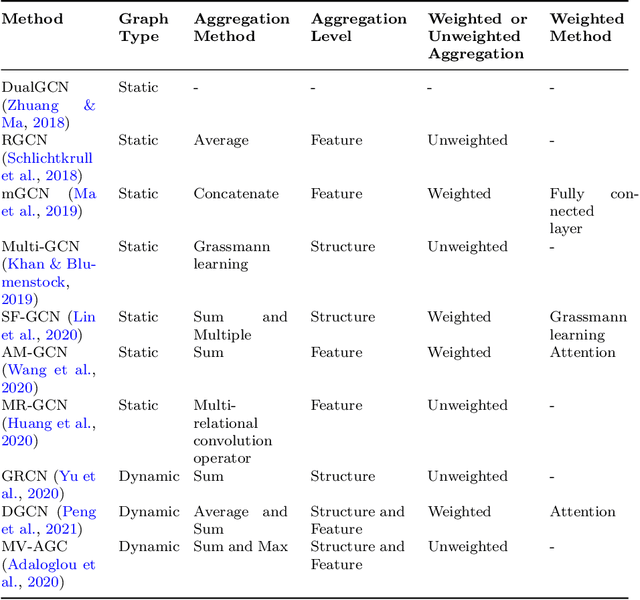

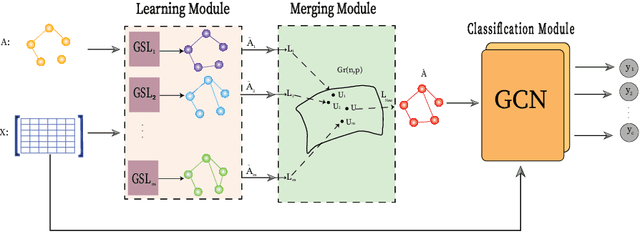

Abstract:Many successful learning algorithms have been recently developed to represent graph-structured data. For example, Graph Neural Networks (GNNs) have achieved considerable successes in various tasks such as node classification, graph classification, and link prediction. However, these methods are highly dependent on the quality of the input graph structure. One used approach to alleviate this problem is to learn the graph structure instead of relying on a manually designed graph. In this paper, we introduce a new graph structure learning approach using multi-view learning, named MV-GSL (Multi-View Graph Structure Learning), in which we aggregate different graph structure learning methods using subspace merging on Grassmann manifold to improve the quality of the learned graph structures. Extensive experiments are performed to evaluate the effectiveness of the proposed method on two benchmark datasets, Cora and Citeseer. Our experiments show that the proposed method has promising performance compared to single and other combined graph structure learning methods.

A Survey on Machine Reading Comprehension Systems

Jan 06, 2020

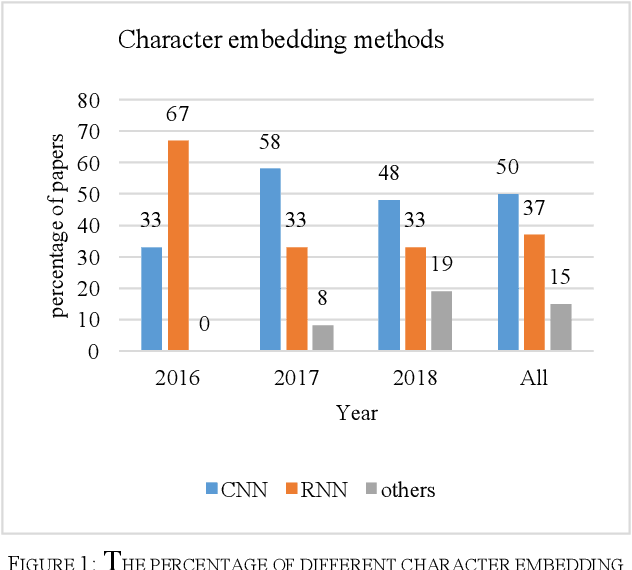

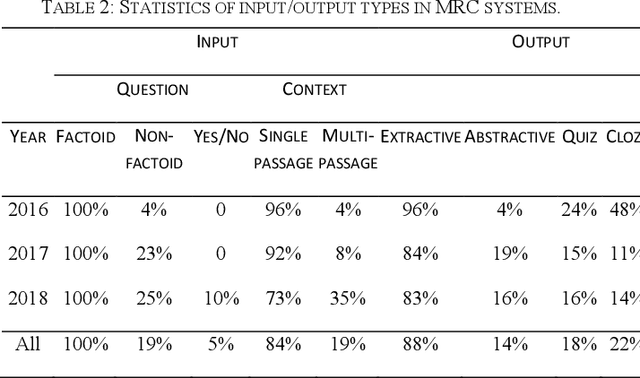

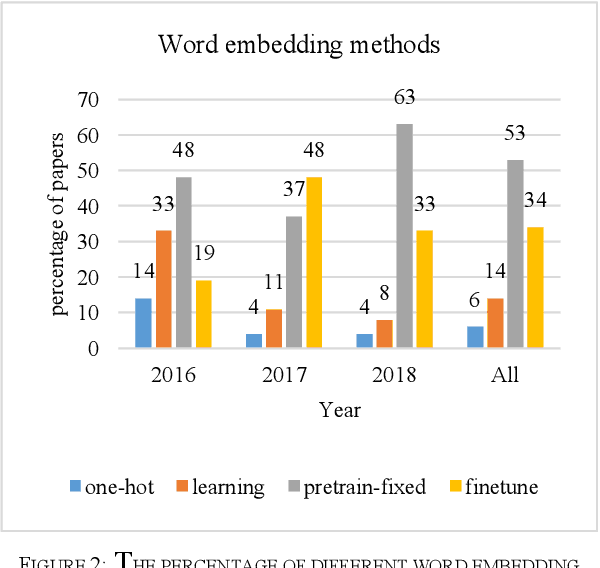

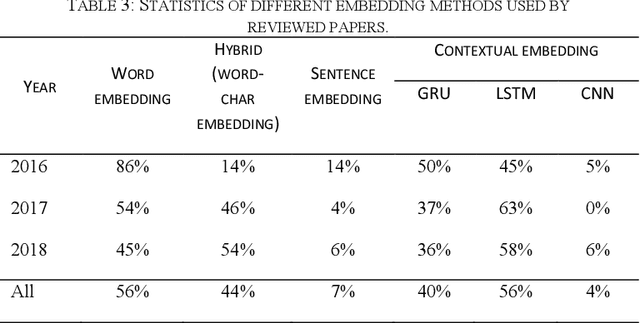

Abstract:Machine reading comprehension is a challenging task and hot topic in natural language processing. Its goal is to develop systems to answer the questions regarding a given context. In this paper, we present a comprehensive survey on different aspects of machine reading comprehension systems, including their approaches, structures, input/outputs, and research novelties. We illustrate the recent trends in this field based on 124 reviewed papers from 2016 to 2018. Our investigations demonstrate that the focus of research has changed in recent years from answer extraction to answer generation, from single to multi-document reading comprehension, and from learning from scratch to using pre-trained embeddings. We also discuss the popular datasets and the evaluation metrics in this field. The paper ends with investigating the most cited papers and their contributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge