Razi Mahmood

Evaluating Automated Radiology Report Quality through Fine-Grained Phrasal Grounding of Clinical Findings

Dec 02, 2024

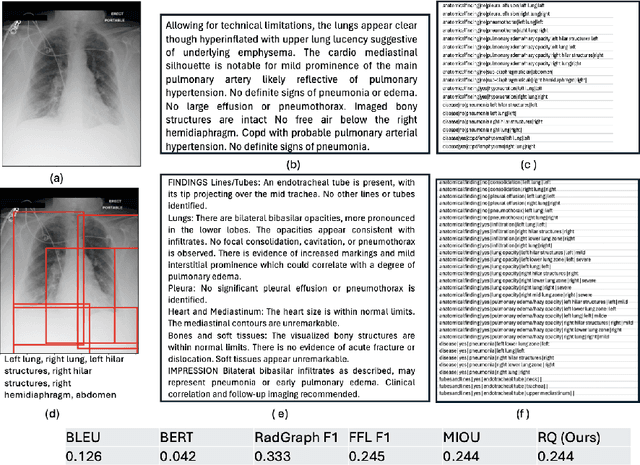

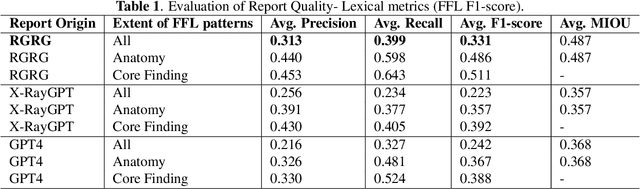

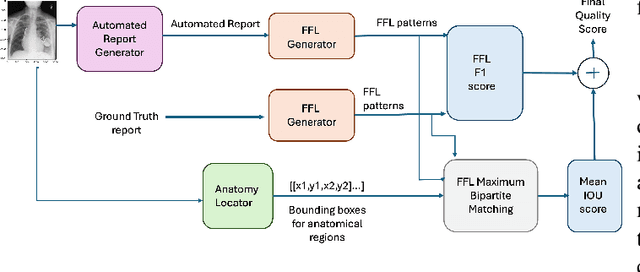

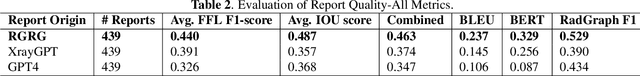

Abstract:Several evaluation metrics have been developed recently to automatically assess the quality of generative AI reports for chest radiographs based only on textual information using lexical, semantic, or clinical named entity recognition methods. In this paper, we develop a new method of report quality evaluation by first extracting fine-grained finding patterns capturing the location, laterality, and severity of a large number of clinical findings. We then performed phrasal grounding to localize their associated anatomical regions on chest radiograph images. The textual and visual measures are then combined to rate the quality of the generated reports. We present results that compare this evaluation metric with other textual metrics on a gold standard dataset derived from the MIMIC collection and show its robustness and sensitivity to factual errors.

Fact-Checking of AI-Generated Reports

Jul 27, 2023Abstract:With advances in generative artificial intelligence (AI), it is now possible to produce realistic-looking automated reports for preliminary reads of radiology images. This can expedite clinical workflows, improve accuracy and reduce overall costs. However, it is also well-known that such models often hallucinate, leading to false findings in the generated reports. In this paper, we propose a new method of fact-checking of AI-generated reports using their associated images. Specifically, the developed examiner differentiates real and fake sentences in reports by learning the association between an image and sentences describing real or potentially fake findings. To train such an examiner, we first created a new dataset of fake reports by perturbing the findings in the original ground truth radiology reports associated with images. Text encodings of real and fake sentences drawn from these reports are then paired with image encodings to learn the mapping to real/fake labels. The utility of such an examiner is demonstrated for verifying automatically generated reports by detecting and removing fake sentences. Future generative AI approaches can use the resulting tool to validate their reports leading to a more responsible use of AI in expediting clinical workflows.

Spatially-Preserving Flattening for Location-Aware Classification of Findings in Chest X-Rays

Apr 19, 2022

Abstract:Chest X-rays have become the focus of vigorous deep learning research in recent years due to the availability of large labeled datasets. While classification of anomalous findings is now possible, ensuring that they are correctly localized still remains challenging, as this requires recognition of anomalies within anatomical regions. Existing deep learning networks for fine-grained anomaly classification learn location-specific findings using architectures where the location and spatial contiguity information is lost during the flattening step before classification. In this paper, we present a new spatially preserving deep learning network that preserves location and shape information through auto-encoding of feature maps during flattening. The feature maps, auto-encoder and classifier are then trained in an end-to-end fashion to enable location aware classification of findings in chest X-rays. Results are shown on a large multi-hospital chest X-ray dataset indicating a significant improvement in the quality of finding classification over state-of-the-art methods.

* 5 pages, Paper presented as a poster at the IEEE International Symposium on Biomedical Imaging, 2022, Paper Number 828

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge