Raul Acuna

Dynamic Markers: UAV landing proof of concept

Jan 09, 2019

Abstract:In this paper, we introduce a dynamic fiducial marker which can change its appearance according to the spatiotemporal requirements of the visual perception task of a mobile robot using a camera as the sensor. We present a control scheme to dynamically change the appearance of the marker in order to increase the range of detection and to assure a better accuracy on the close range. The marker control takes into account the camera to marker distance (which influences the scale of the marker in image coordinates) to select which fiducial markers to display. Hence, we realize a tight coupling between the visual pose control of the mobile robot and the appearance of the dynamic fiducial marker. Additionally, we discuss the practical implications of time delays due to processing time and communication delays between the robot and the marker. Finally, we propose a real-time dynamic marker visual servoing control scheme for quadcopter landing and evaluate the performance on a real-world example.

Robustness of control point configurations for homography and planar pose estimation

Mar 08, 2018

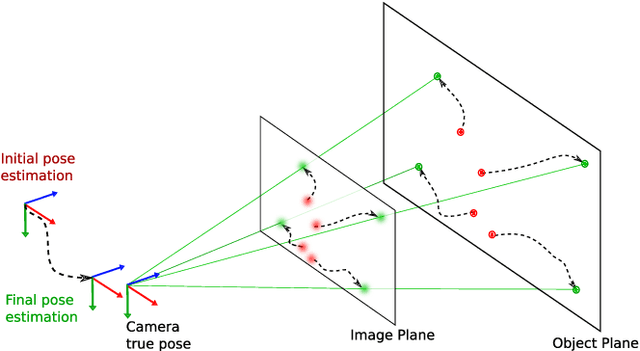

Abstract:In this paper, we investigate the influence of the spatial configuration of a number of $n \geq 4$ control points on the accuracy and robustness of space resection methods, e.g. used by a fiducial marker for pose estimation. We find robust configurations of control points by minimizing the first order perturbed solution of the DLT algorithm which is equivalent to minimizing the condition number of the data matrix. An empirical statistical evaluation is presented verifying that these optimized control point configurations not only increase the performance of the DLT homography estimation but also improve the performance of planar pose estimation methods like IPPE and EPnP, including the iterative minimization of the reprojection error which is the most accurate algorithm. We provide the characteristics of stable control point configurations for real-world noisy camera data that are practically independent on the camera pose and form certain symmetric patterns dependent on the number of points. Finally, we present a comparison of optimized configuration versus the number of control points.

MOMA: Visual Mobile Marker Odometry

Apr 18, 2017

Abstract:In this paper, we present a cooperative odometry scheme based on the detection of mobile markers in line with the idea of cooperative positioning for multiple robots [1]. To this end, we introduce a simple optimization scheme that realizes visual mobile marker odometry via accurate fixed marker-based camera positioning and analyse the characteristics of errors inherent to the method compared to classical fixed marker-based navigation and visual odometry. In addition, we provide a specific UAV-UGV configuration that allows for continuous movements of the UAV without doing stops and a minimal caterpillar-like configuration that works with one UGV alone. Finally, we present a real-world implementation and evaluation for the proposed UAV-UGV configuration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge