Raphaël Dang-Nhu

Evaluating Disentanglement of Structured Latent Representations

Jan 11, 2021

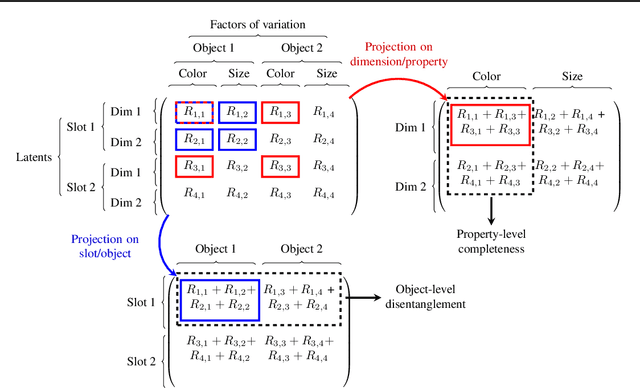

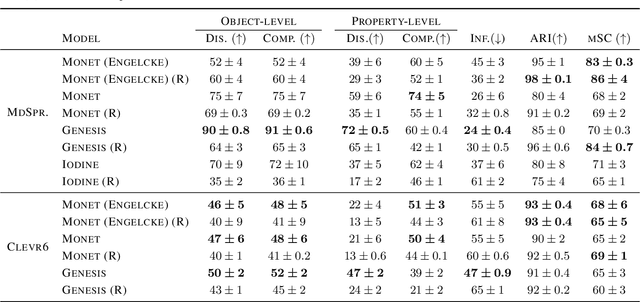

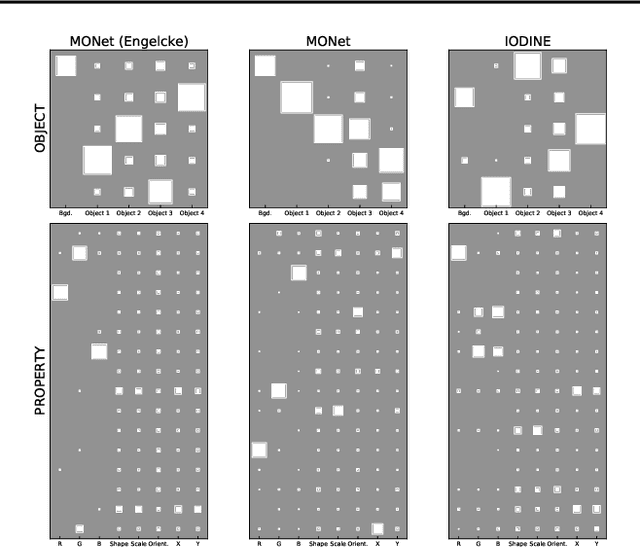

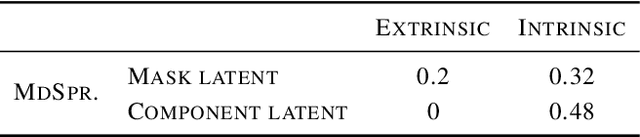

Abstract:We design the first multi-layer disentanglement metric operating at all hierarchy levels of a structured latent representation, and derive its theoretical properties. Applied to object-centric representations, our metric unifies the evaluation of both object separation between latent slots and internal slot disentanglement into a common mathematical framework. It also addresses the problematic dependence on segmentation mask sharpness of previous pixel-level segmentation metrics such as ARI. Perhaps surprisingly, our experimental results show that good ARI values do not guarantee a disentangled representation, and that the exclusive focus on this metric has led to counterproductive choices in some previous evaluations. As an additional technical contribution, we present a new algorithm for obtaining feature importances that handles slot permutation invariance in the representation.

PLANS: Robust Program Learning from Neurally Inferred Specifications

Jun 05, 2020

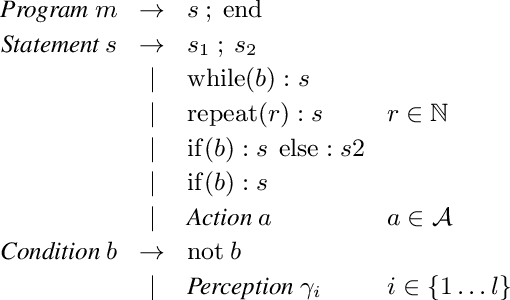

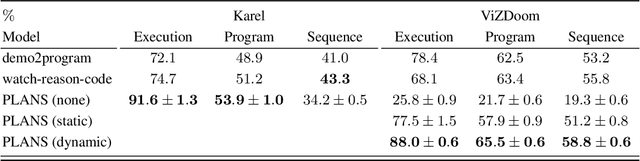

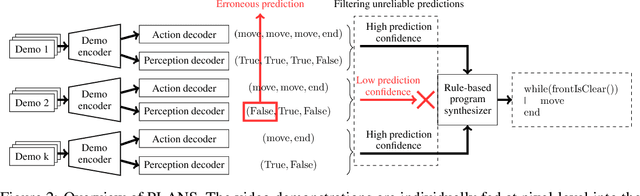

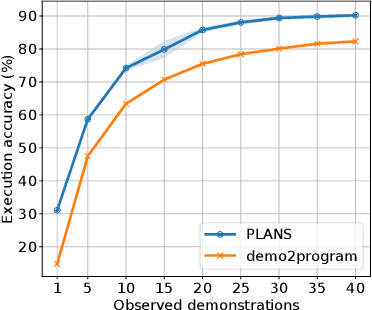

Abstract:Recent years have seen the rise of statistical program learning based on neural models as an alternative to traditional rule-based systems for programming by example. Rule-based approaches offer correctness guarantees in an unsupervised way as they inherently capture logical rules, while neural models are more realistically scalable to raw, high-dimensional input, and provide resistance to noisy I/O specifications. We introduce PLANS (Program LeArning from Neurally inferred Specifications), a hybrid model for program synthesis from visual observations that gets the best of both worlds, relying on (i) a neural architecture trained to extract abstract, high-level information from each raw individual input (ii) a rule-based system using the extracted information as I/O specifications to synthesize a program capturing the different observations. In order to address the key challenge of making PLANS resistant to noise in the network's output, we introduce a filtering heuristic for I/O specifications based on selective classification techniques. We obtain state-of-the-art performance at program synthesis from diverse demonstration videos in the Karel and ViZDoom environments, while requiring no ground-truth program for training. We make our implementation available at github.com/rdang-nhu/PLANS.

Adversarial Attacks on Probabilistic Autoregressive Forecasting Models

Mar 08, 2020

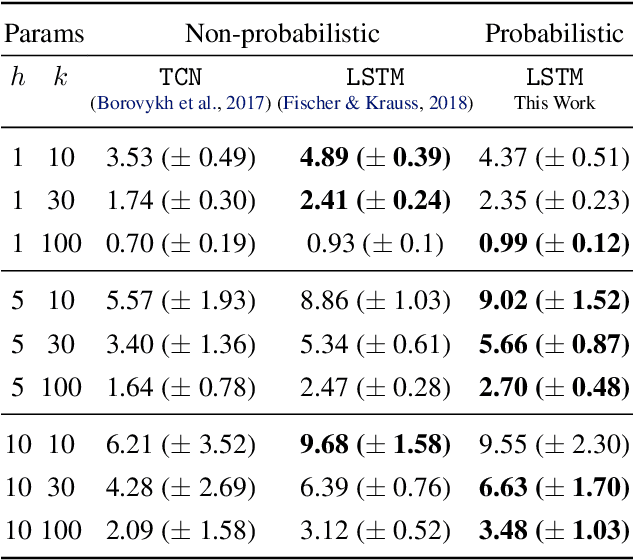

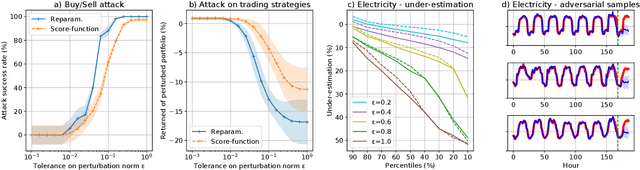

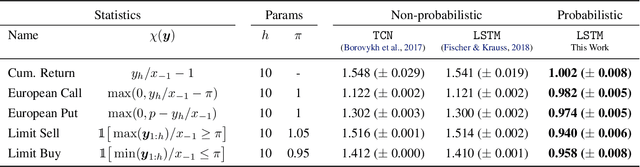

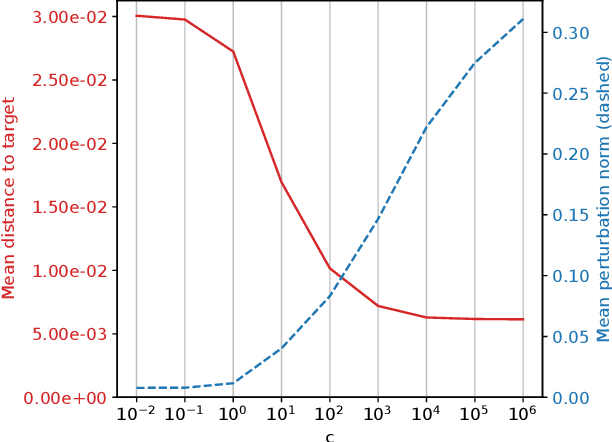

Abstract:We develop an effective generation of adversarial attacks on neural models that output a sequence of probability distributions rather than a sequence of single values. This setting includes the recently proposed deep probabilistic autoregressive forecasting models that estimate the probability distribution of a time series given its past and achieve state-of-the-art results in a diverse set of application domains. The key technical challenge we address is effectively differentiating through the Monte-Carlo estimation of statistics of the joint distribution of the output sequence. Additionally, we extend prior work on probabilistic forecasting to the Bayesian setting which allows conditioning on future observations, instead of only on past observations. We demonstrate that our approach can successfully generate attacks with small input perturbations in two challenging tasks where robust decision making is crucial: stock market trading and prediction of electricity consumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge