Ramanakumar Sankar

TCuPGAN: A novel framework developed for optimizing human-machine interactions in citizen science

Nov 23, 2023Abstract:In the era of big data in scientific research, there is a necessity to leverage techniques which reduce human effort in labeling and categorizing large datasets by involving sophisticated machine tools. To combat this problem, we present a novel, general purpose model for 3D segmentation that leverages patch-wise adversariality and Long Short-Term Memory to encode sequential information. Using this model alongside citizen science projects which use 3D datasets (image cubes) on the Zooniverse platforms, we propose an iterative human-machine optimization framework where only a fraction of the 2D slices from these cubes are seen by the volunteers. We leverage the patch-wise discriminator in our model to provide an estimate of which slices within these image cubes have poorly generalized feature representations, and correspondingly poor machine performance. These images with corresponding machine proposals would be presented to volunteers on Zooniverse for correction, leading to a drastic reduction in the volunteer effort on citizen science projects. We trained our model on ~2300 liver tissue 3D electron micrographs. Lipid droplets were segmented within these images through human annotation via the `Etch A Cell - Fat Checker' citizen science project, hosted on the Zooniverse platform. In this work, we demonstrate this framework and the selection methodology which resulted in a measured reduction in volunteer effort by more than 60%. We envision this type of joint human-machine partnership will be of great use on future Zooniverse projects.

Automated 3D Tumor Segmentation using Temporal Cubic PatchGAN (TCuP-GAN)

Nov 23, 2023Abstract:Development of robust general purpose 3D segmentation frameworks using the latest deep learning techniques is one of the active topics in various bio-medical domains. In this work, we introduce Temporal Cubic PatchGAN (TCuP-GAN), a volume-to-volume translational model that marries the concepts of a generative feature learning framework with Convolutional Long Short-Term Memory Networks (LSTMs), for the task of 3D segmentation. We demonstrate the capabilities of our TCuP-GAN on the data from four segmentation challenges (Adult Glioma, Meningioma, Pediatric Tumors, and Sub-Saharan Africa subset) featured within the 2023 Brain Tumor Segmentation (BraTS) Challenge and quantify its performance using LesionWise Dice similarity and $95\%$ Hausdorff Distance metrics. We demonstrate the successful learning of our framework to predict robust multi-class segmentation masks across all the challenges. This benchmarking work serves as a stepping stone for future efforts towards applying TCuP-GAN on other multi-class tasks such as multi-organelle segmentation in electron microscopy imaging.

From fat droplets to floating forests: cross-domain transfer learning using a PatchGAN-based segmentation model

Nov 08, 2022

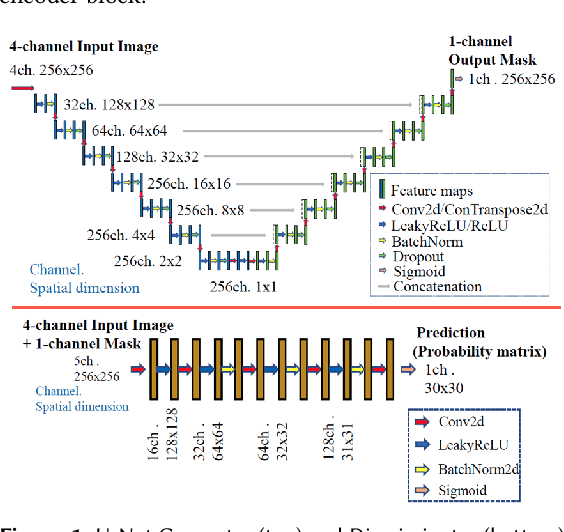

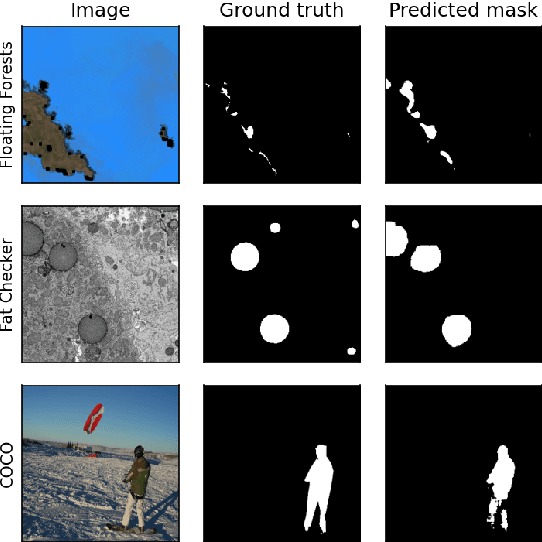

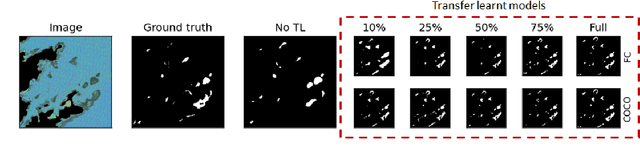

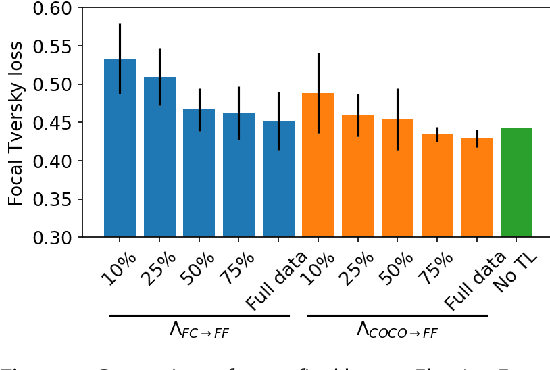

Abstract:Many scientific domains gather sufficient labels to train machine algorithms through human-in-the-loop techniques provided by the Zooniverse.org citizen science platform. As the range of projects, task types and data rates increase, acceleration of model training is of paramount concern to focus volunteer effort where most needed. The application of Transfer Learning (TL) between Zooniverse projects holds promise as a solution. However, understanding the effectiveness of TL approaches that pretrain on large-scale generic image sets vs. images with similar characteristics possibly from similar tasks is an open challenge. We apply a generative segmentation model on two Zooniverse project-based data sets: (1) to identify fat droplets in liver cells (FatChecker; FC) and (2) the identification of kelp beds in satellite images (Floating Forests; FF) through transfer learning from the first project. We compare and contrast its performance with a TL model based on the COCO image set, and subsequently with baseline counterparts. We find that both the FC and COCO TL models perform better than the baseline cases when using >75% of the original training sample size. The COCO-based TL model generally performs better than the FC-based one, likely due to its generalized features. Our investigations provide important insights into usage of TL approaches on multi-domain data hosted across different Zooniverse projects, enabling future projects to accelerate task completion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge