Ralf Gutjahr

Multi-Layer Gaussian Splatting for Immersive Anatomy Visualization

Oct 22, 2024

Abstract:In medical image visualization, path tracing of volumetric medical data like CT scans produces lifelike three-dimensional visualizations. Immersive VR displays can further enhance the understanding of complex anatomies. Going beyond the diagnostic quality of traditional 2D slices, they enable interactive 3D evaluation of anatomies, supporting medical education and planning. Rendering high-quality visualizations in real-time, however, is computationally intensive and impractical for compute-constrained devices like mobile headsets. We propose a novel approach utilizing GS to create an efficient but static intermediate representation of CT scans. We introduce a layered GS representation, incrementally including different anatomical structures while minimizing overlap and extending the GS training to remove inactive Gaussians. We further compress the created model with clustering across layers. Our approach achieves interactive frame rates while preserving anatomical structures, with quality adjustable to the target hardware. Compared to standard GS, our representation retains some of the explorative qualities initially enabled by immersive path tracing. Selective activation and clipping of layers are possible at rendering time, adding a degree of interactivity to otherwise static GS models. This could enable scenarios where high computational demands would otherwise prohibit using path-traced medical volumes.

Limited Parameter Denoising for Low-dose X-ray Computed Tomography Using Deep Reinforcement Learning

Apr 01, 2022

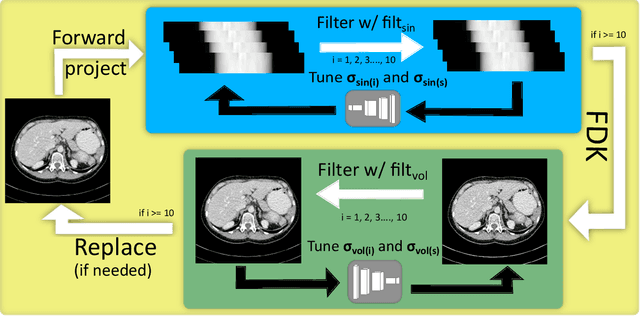

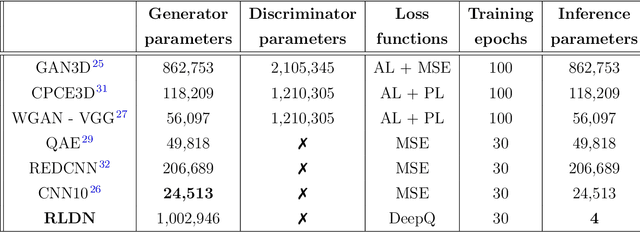

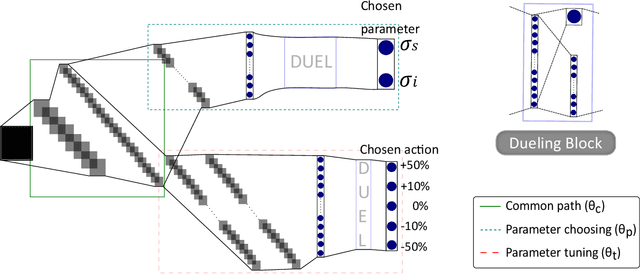

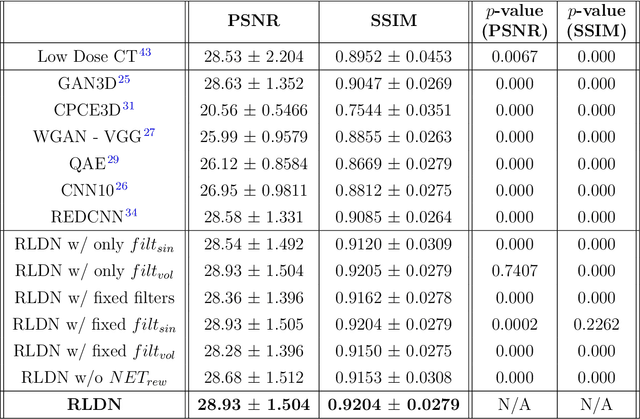

Abstract:The use of deep learning has successfully solved several problems in the field of medical imaging. Deep learning has been applied to the CT denoising problem successfully. However, the use of deep learning requires large amounts of data to train deep convolutional networks (CNNs). Moreover, due to large parameter count, such deep CNNs may cause unexpected results. In this study, we introduce a novel CT denoising framework, which has interpretable behaviour, and provides useful results with limited data. We employ bilateral filtering in both the projection and volume domains to remove noise. To account for non-stationary noise, we tune the $\sigma$ parameters of the volume for every projection view, and for every volume pixel. The tuning is carried out by two deep CNNs. Due to impracticality of labelling, the two deep CNNs are trained via a Deep-Q reinforcement learning task. The reward for the task is generated by using a custom reward function represented by a neural network. Our experiments were carried out on abdominal scans for the Mayo Clinic TCIA dataset, and the AAPM Low Dose CT Grand Challenge. Our denoising framework has excellent denoising performance increasing the PSNR from 28.53 to 28.93, and increasing the SSIM from 0.8952 to 0.9204. We outperform several state-of-the-art deep CNNs, which have several orders of magnitude higher number of parameters (p-value (PSNR) = 0.000, p-value (SSIM) = 0.000). Our method does not introduce any blurring, which is introduced by MSE loss based methods, or any deep learning artifacts, which are introduced by WGAN based models. Our ablation studies show that parameter tuning and using our reward network results in the best possible results.

Low Dose CT Denoising via Joint Bilateral Filtering and Intelligent Parameter Optimization

Jul 09, 2020

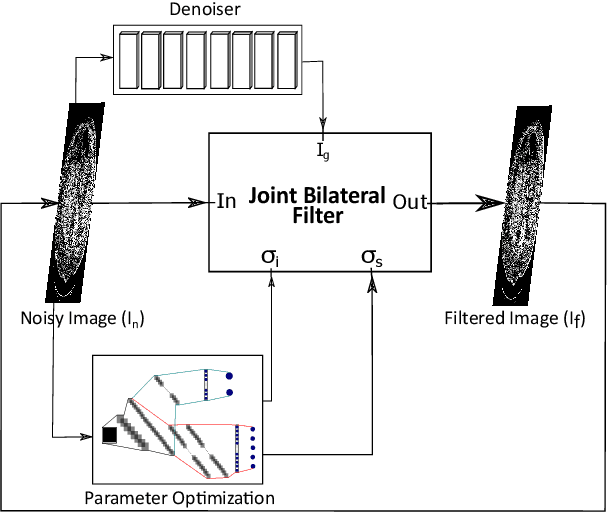

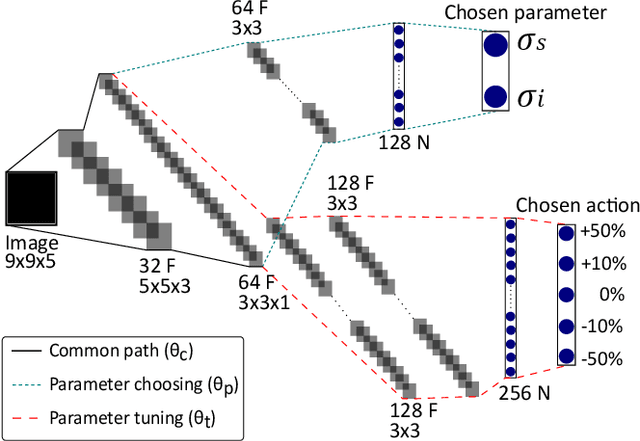

Abstract:Denoising of clinical CT images is an active area for deep learning research. Current clinically approved methods use iterative reconstruction methods to reduce the noise in CT images. Iterative reconstruction techniques require multiple forward and backward projections, which are time-consuming and computationally expensive. Recently, deep learning methods have been successfully used to denoise CT images. However, conventional deep learning methods suffer from the 'black box' problem. They have low accountability, which is necessary for use in clinical imaging situations. In this paper, we use a Joint Bilateral Filter (JBF) to denoise our CT images. The guidance image of the JBF is estimated using a deep residual convolutional neural network (CNN). The range smoothing and spatial smoothing parameters of the JBF are tuned by a deep reinforcement learning task. Our actor first chooses a parameter, and subsequently chooses an action to tune the value of the parameter. A reward network is designed to direct the reinforcement learning task. Our denoising method demonstrates good denoising performance, while retaining structural information. Our method significantly outperforms state of the art deep neural networks. Moreover, our method has only two parameters, which makes it significantly more interpretable and reduces the 'black box' problem. We experimentally measure the impact of our intelligent parameter optimization and our reward network. Our studies show that our current setup yields the best results in terms of structural preservation.

JBFnet -- Low Dose CT Denoising by Trainable Joint Bilateral Filtering

Jul 09, 2020

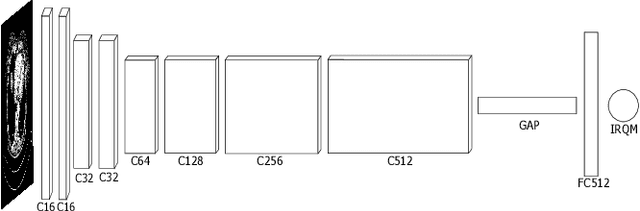

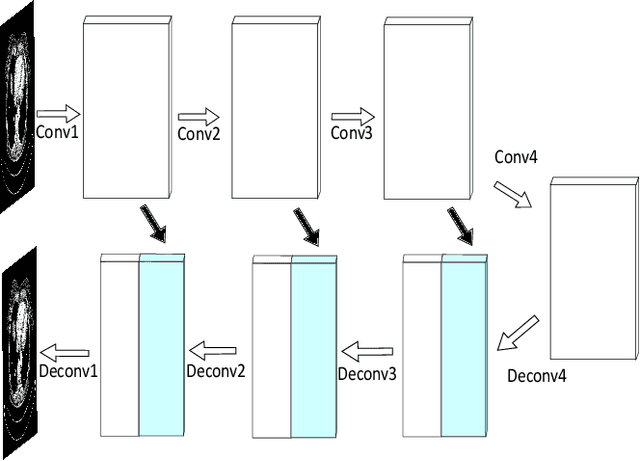

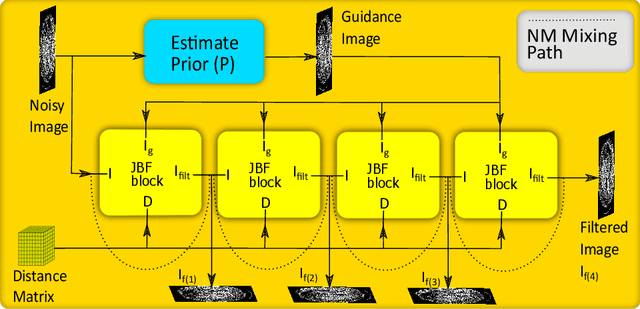

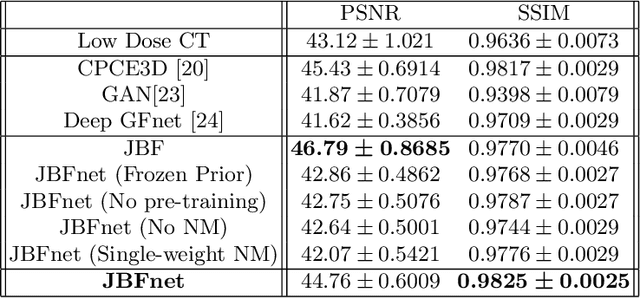

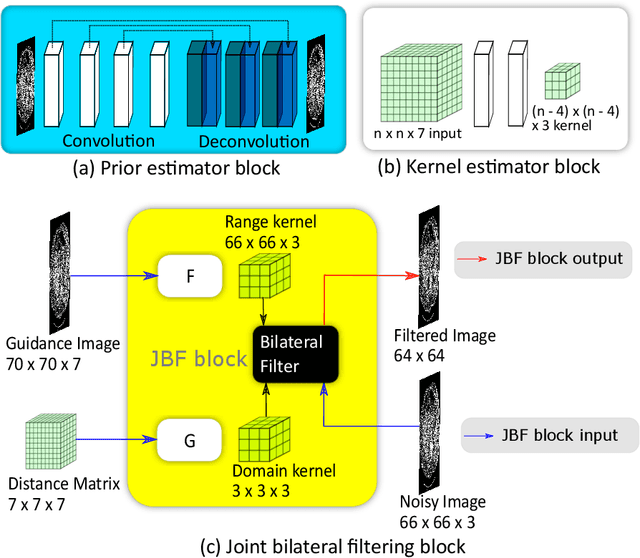

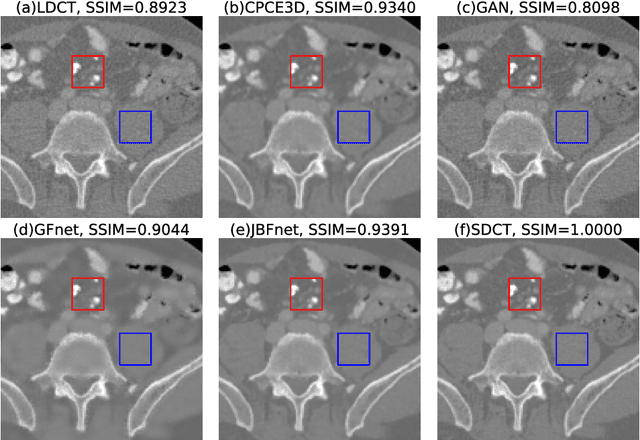

Abstract:Deep neural networks have shown great success in low dose CT denoising. However, most of these deep neural networks have several hundred thousand trainable parameters. This, combined with the inherent non-linearity of the neural network, makes the deep neural network diffcult to understand with low accountability. In this study we introduce JBFnet, a neural network for low dose CT denoising. The architecture of JBFnet implements iterative bilateral filtering. The filter functions of the Joint Bilateral Filter (JBF) are learned via shallow convolutional networks. The guidance image is estimated by a deep neural network. JBFnet is split into four filtering blocks, each of which performs Joint Bilateral Filtering. Each JBF block consists of 112 trainable parameters, making the noise removal process comprehendable. The Noise Map (NM) is added after filtering to preserve high level features. We train JBFnet with the data from the body scans of 10 patients, and test it on the AAPM low dose CT Grand Challenge dataset. We compare JBFnet with state-of-the-art deep learning networks. JBFnet outperforms CPCE3D, GAN and deep GFnet on the test dataset in terms of noise removal while preserving structures. We conduct several ablation studies to test the performance of our network architecture and training method. Our current setup achieves the best performance, while still maintaining behavioural accountability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge