Rajat Kulshreshtha

Preserving Intermediate Objectives: One Simple Trick to Improve Learning for Hierarchical Models

Jun 23, 2017

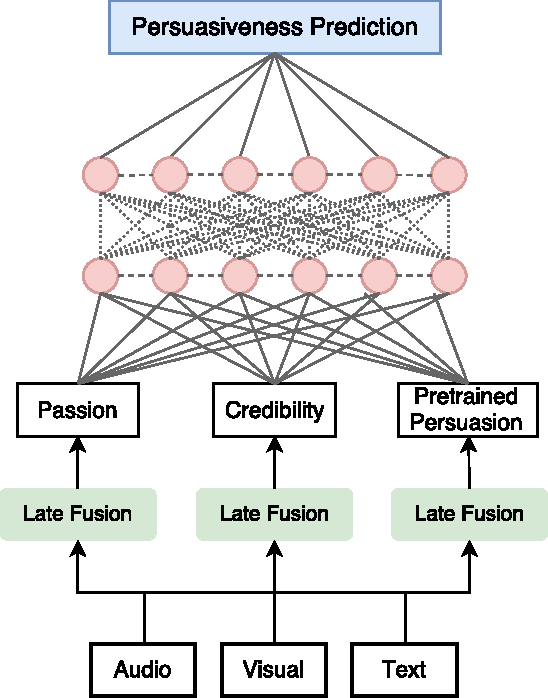

Abstract:Hierarchical models are utilized in a wide variety of problems which are characterized by task hierarchies, where predictions on smaller subtasks are useful for trying to predict a final task. Typically, neural networks are first trained for the subtasks, and the predictions of these networks are subsequently used as additional features when training a model and doing inference for a final task. In this work, we focus on improving learning for such hierarchical models and demonstrate our method on the task of speaker trait prediction. Speaker trait prediction aims to computationally identify which personality traits a speaker might be perceived to have, and has been of great interest to both the Artificial Intelligence and Social Science communities. Persuasiveness prediction in particular has been of interest, as persuasive speakers have a large amount of influence on our thoughts, opinions and beliefs. In this work, we examine how leveraging the relationship between related speaker traits in a hierarchical structure can help improve our ability to predict how persuasive a speaker is. We present a novel algorithm that allows us to backpropagate through this hierarchy. This hierarchical model achieves a 25% relative error reduction in classification accuracy over current state-of-the art methods on the publicly available POM dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge