Rado Gazo

Some Practice for Improving the Search Results of E-commerce

Jul 30, 2022

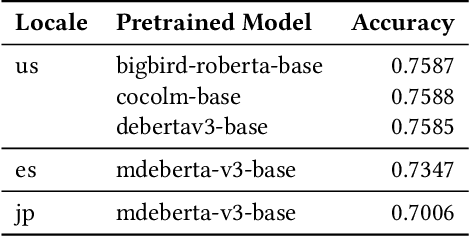

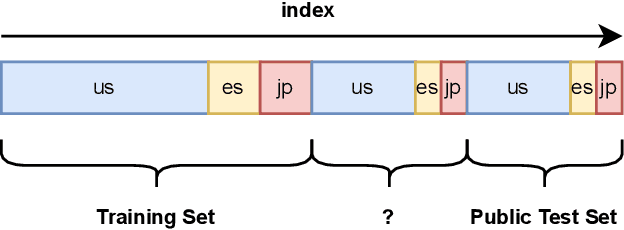

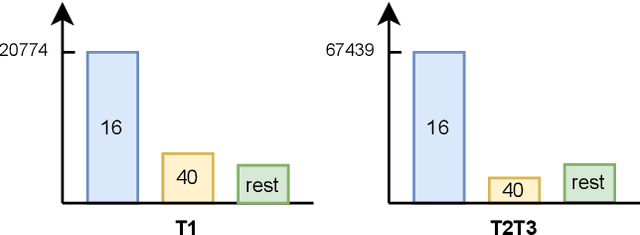

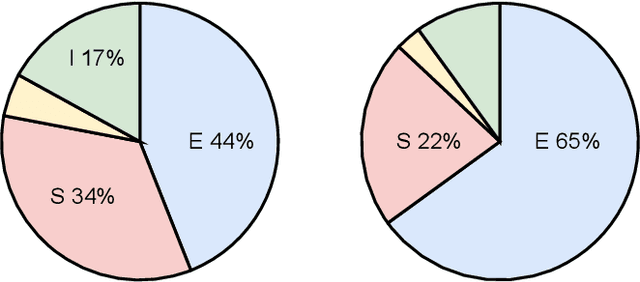

Abstract:In the Amazon KDD Cup 2022, we aim to apply natural language processing methods to improve the quality of search results that can significantly enhance user experience and engagement with search engines for e-commerce. We discuss our practical solution for this competition, ranking 6th in task one, 2nd in task two, and 2nd in task 3. The code is available at https://github.com/wufanyou/KDD-Cup-2022-Amazon.

TLab: Traffic Map Movie Forecasting Based on HR-NET

Nov 17, 2020

Abstract:The problem of the effective prediction for large-scale spatio-temporal traffic data has long haunted researchers in the field of intelligent transportation. Limited by the quantity of data, citywide traffic state prediction was seldom achieved. Hence the complex urban transportation system of an entire city cannot be truly understood. Thanks to the efforts of organizations like IARAI, the massive open data provided by them has made the research possible. In our 2020 Competition solution, we further design multiple variants based on HR-NET and UNet. Through feature engineering, the hand-crafted features are input into the model in a form of channels. It is worth noting that, to learn the inherent attributes of geographical locations, we proposed a novel method called geo-embedding, which contributes to significant improvement in the accuracy of the model. In addition, we explored the influence of the selection of activation functions and optimizers, as well as tricks during model training on the model performance. In terms of prediction accuracy, our solution has won 2nd place in NeurIPS 2020, Traffic4cast Challenge.

Efficient Project Gradient Descent for Ensemble Adversarial Attack

Jun 07, 2019

Abstract:Recent advances show that deep neural networks are not robust to deliberately crafted adversarial examples which many are generated by adding human imperceptible perturbation to clear input. Consider $l_2$ norms attacks, Project Gradient Descent (PGD) and the Carlini and Wagner (C\&W) attacks are the two main methods, where PGD control max perturbation for adversarial examples while C\&W approach treats perturbation as a regularization term optimized it with loss function together. If we carefully set parameters for any individual input, both methods become similar. In general, PGD attacks perform faster but obtains larger perturbation to find adversarial examples than the C\&W when fixing the parameters for all inputs. In this report, we propose an efficient modified PGD method for attacking ensemble models by automatically changing ensemble weights and step size per iteration per input. This method generates smaller perturbation adversarial examples than PGD method while remains efficient as compared to C\&W method. Our method won the first place in IJCAI19 Targeted Adversarial Attack competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge