Qianhui Liang

Uncovering Dominant Social Class in Neighborhoods through Building Footprints: A Case Study of Residential Zones in Massachusetts using Computer Vision

Jun 12, 2019

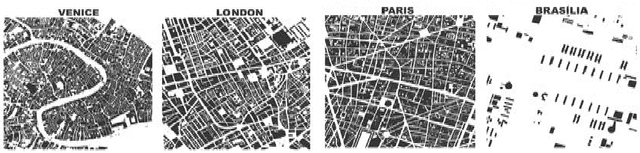

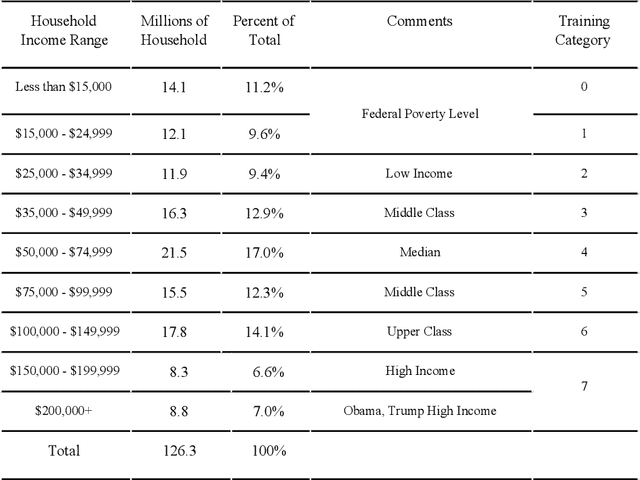

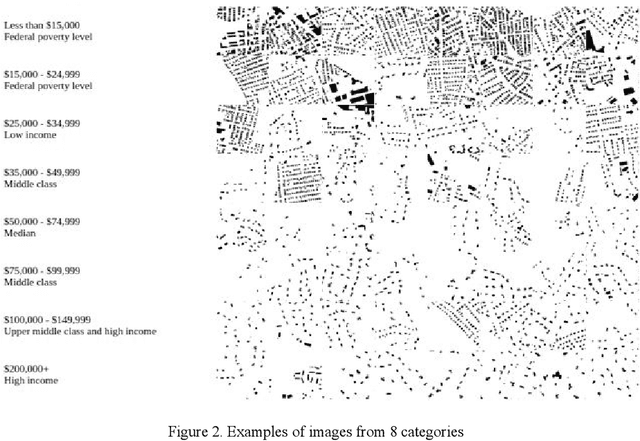

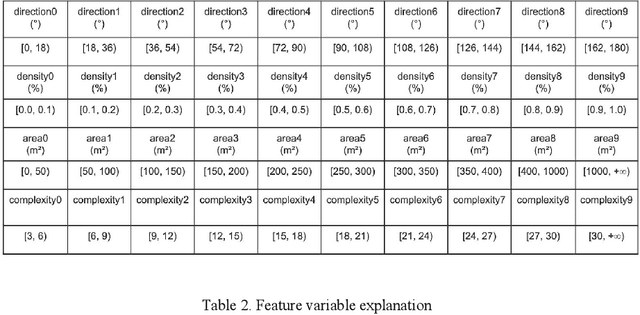

Abstract:In urban theory, urban form is related to social and economic status. This paper explores to uncover zip-code level income through urban form by analyzing figure-ground map, a simple, prevailing and precise representation of urban form in the field of urban study. Deep learning in computer vision enables such representation maps to be studied at a large scale. We propose to train a DCNN model to identify and uncover the internal bridge between social class and urban form. Further, using hand-crafted informative visual features related with urban form properties (building size, building density, etc.), we apply a random forest classifier to interpret how morphological properties are related with social class.

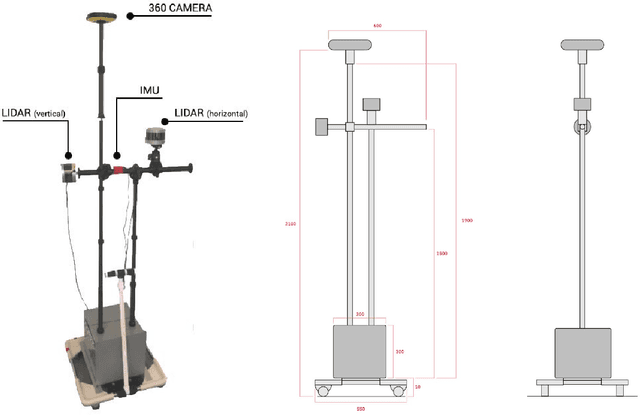

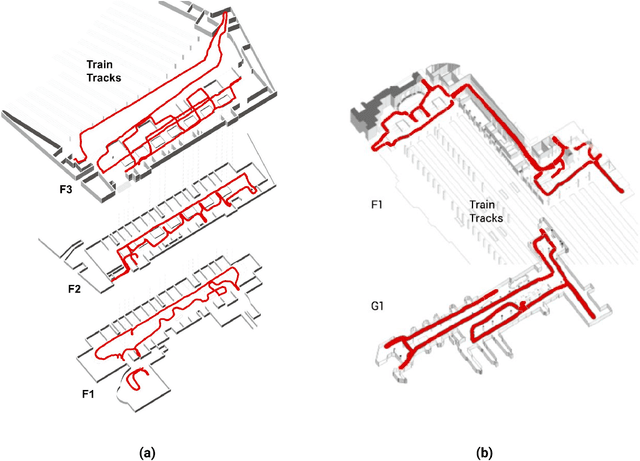

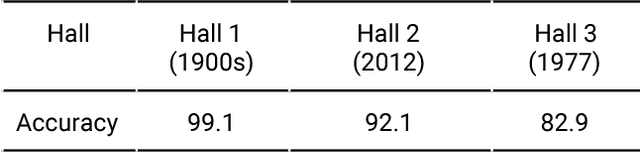

Quantifying Legibility of Indoor Spaces Using Deep Convolutional Neural Networks: Case Studies in Train Stations

Jan 22, 2019

Abstract:Legibility is the extent to which a space can be easily recognized. Evaluating legibility is particularly desirable in indoor spaces, since it has a large impact on human behavior and the efficiency of space utilization. However, indoor space legibility has only been studied through survey and trivial simulations and lacks reliable quantitative measurement. We utilized a Deep Convolutional Neural Network (DCNN), which is structurally similar to a human perception system, to model legibility in indoor spaces. To implement the modeling of legibility for any indoor spaces, we designed an end-to-end processing pipeline from indoor data retrieving to model training to spatial legibility analysis. Although the model performed very well (98% top-1 accuracy) overall, there are still discrepancies in accuracy among different spaces, reflecting legibility differences. To prove the validity of the pipeline, we deployed a survey on Amazon Mechanical Turk, collecting 4,015 samples. The human samples showed a similar behavior pattern and mechanism as the DCNN models. Further, we used model results to visually explain legibility in different architectural programs, building age, building style, visual clusterings of spaces and visual explanations for building age and architectural functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge