Praveen Kumar Chandaliya

LoRA-Enhanced Vision Transformer for Single Image based Morphing Attack Detection via Knowledge Distillation from EfficientNet

Nov 16, 2025Abstract:Face Recognition Systems (FRS) are critical for security but remain vulnerable to morphing attacks, where synthetic images blend biometric features from multiple individuals. We propose a novel Single-Image Morphing Attack Detection (S-MAD) approach using a teacher-student framework, where a CNN-based teacher model refines a ViT-based student model. To improve efficiency, we integrate Low-Rank Adaptation (LoRA) for fine-tuning, reducing computational costs while maintaining high detection accuracy. Extensive experiments are conducted on a morphing dataset built from three publicly available face datasets, incorporating ten different morphing generation algorithms to assess robustness. The proposed method is benchmarked against six state-of-the-art S-MAD techniques, demonstrating superior detection performance and computational efficiency.

Capsule Endoscopy Multi-classification via Gated Attention and Wavelet Transformations

Oct 25, 2024

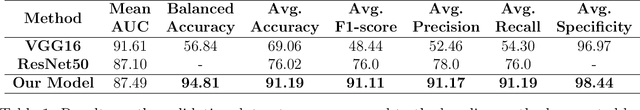

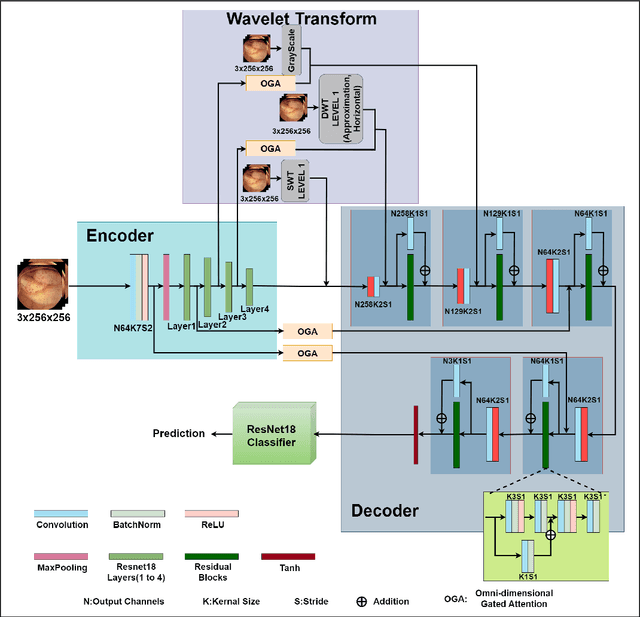

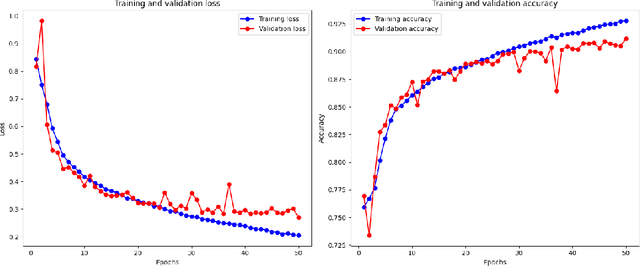

Abstract:Abnormalities in the gastrointestinal tract significantly influence the patient's health and require a timely diagnosis for effective treatment. With such consideration, an effective automatic classification of these abnormalities from a video capsule endoscopy (VCE) frame is crucial for improvement in diagnostic workflows. The work presents the process of developing and evaluating a novel model designed to classify gastrointestinal anomalies from a VCE video frame. Integration of Omni Dimensional Gated Attention (OGA) mechanism and Wavelet transformation techniques into the model's architecture allowed the model to focus on the most critical areas in the endoscopy images, reducing noise and irrelevant features. This is particularly advantageous in capsule endoscopy, where images often contain a high degree of variability in texture and color. Wavelet transformations contributed by efficiently capturing spatial and frequency-domain information, improving feature extraction, especially for detecting subtle features from the VCE frames. Furthermore, the features extracted from the Stationary Wavelet Transform and Discrete Wavelet Transform are concatenated channel-wise to capture multiscale features, which are essential for detecting polyps, ulcerations, and bleeding. This approach improves classification accuracy on imbalanced capsule endoscopy datasets. The proposed model achieved 92.76% and 91.19% as training and validation accuracies respectively. At the same time, Training and Validation losses are 0.2057 and 0.2700. The proposed model achieved a Balanced Accuracy of 94.81%, AUC of 87.49%, F1-score of 91.11%, precision of 91.17%, recall of 91.19% and specificity of 98.44%. Additionally, the model's performance is benchmarked against two base models, VGG16 and ResNet50, demonstrating its enhanced ability to identify and classify a range of gastrointestinal abnormalities accurately.

Towards Inclusive Face Recognition Through Synthetic Ethnicity Alteration

May 02, 2024

Abstract:Numerous studies have shown that existing Face Recognition Systems (FRS), including commercial ones, often exhibit biases toward certain ethnicities due to under-represented data. In this work, we explore ethnicity alteration and skin tone modification using synthetic face image generation methods to increase the diversity of datasets. We conduct a detailed analysis by first constructing a balanced face image dataset representing three ethnicities: Asian, Black, and Indian. We then make use of existing Generative Adversarial Network-based (GAN) image-to-image translation and manifold learning models to alter the ethnicity from one to another. A systematic analysis is further conducted to assess the suitability of such datasets for FRS by studying the realistic skin-tone representation using Individual Typology Angle (ITA). Further, we also analyze the quality characteristics using existing Face image quality assessment (FIQA) approaches. We then provide a holistic FRS performance analysis using four different systems. Our findings pave the way for future research works in (i) developing both specific ethnicity and general (any to any) ethnicity alteration models, (ii) expanding such approaches to create databases with diverse skin tones, (iii) creating datasets representing various ethnicities which further can help in mitigating bias while addressing privacy concerns.

* 8 Pages

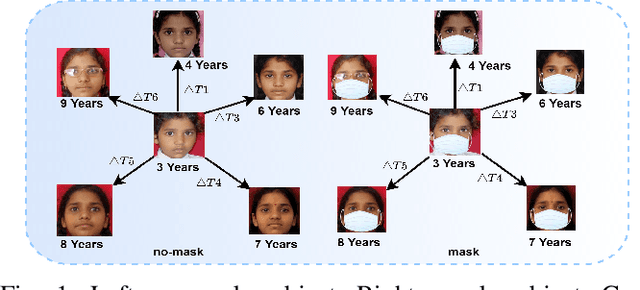

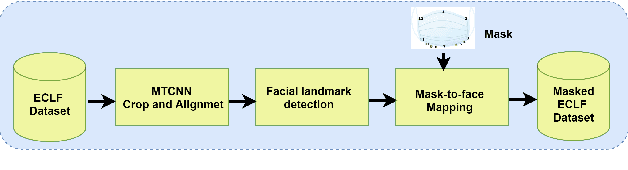

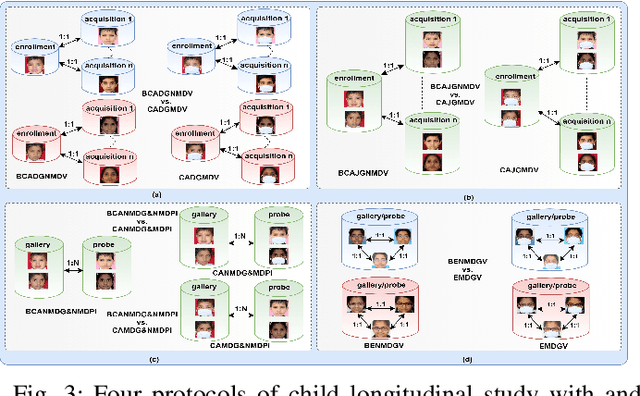

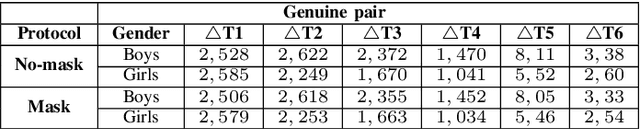

Longitudinal Analysis of Mask and No-Mask on Child Face Recognition

Nov 19, 2021

Abstract:Face is one of the most widely employed traits for person recognition, even in many large-scale applications. Despite technological advancements in face recognition systems, they still face obstacles caused by pose, expression, occlusion, and aging variations. Owing to the COVID-19 pandemic, contactless identity verification has become exceedingly vital. To constrain the pandemic, people have started using face mask. Recently, few studies have been conducted on the effect of face mask on adult face recognition systems. However, the impact of aging with face mask on child subject recognition has not been adequately explored. Thus, the main objective of this study is analyzing the child longitudinal impact together with face mask and other covariates on face recognition systems. Specifically, we performed a comparative investigation of three top performing publicly available face matchers and a post-COVID-19 commercial-off-the-shelf (COTS) system under child cross-age verification and identification settings using our generated synthetic mask and no-mask samples. Furthermore, we investigated the longitudinal consequence of eyeglasses with mask and no-mask. The study exploited no-mask longitudinal child face dataset (i.e., extended Indian Child Longitudinal Face Dataset) that contains $26,258$ face images of $7,473$ subjects in the age group of $[2, 18]$ over an average time span of $3.35$ years. Due to the combined effects of face mask and face aging, the FaceNet, PFE, ArcFace, and COTS face verification system accuracies decrease approximately $25\%$, $22\%$, $18\%$, $12\%$, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge