Praveen Kulkarni

3D Wasserstein generative adversarial network with dense U-Net based discriminator for preclinical fMRI denoising

Nov 28, 2024Abstract:Functional magnetic resonance imaging (fMRI) is extensively used in clinical and preclinical settings to study brain function, however, fMRI data is inherently noisy due to physiological processes, hardware, and external noise. Denoising is one of the main preprocessing steps in any fMRI analysis pipeline. This process is challenging in preclinical data in comparison to clinical data due to variations in brain geometry, image resolution, and low signal-to-noise ratios. In this paper, we propose a structure-preserved algorithm based on a 3D Wasserstein generative adversarial network with a 3D dense U-net based discriminator called, 3D U-WGAN. We apply a 4D data configuration to effectively denoise temporal and spatial information in analyzing preclinical fMRI data. GAN-based denoising methods often utilize a discriminator to identify significant differences between denoised and noise-free images, focusing on global or local features. To refine the fMRI denoising model, our method employs a 3D dense U-Net discriminator to learn both global and local distinctions. To tackle potential over-smoothing, we introduce an adversarial loss and enhance perceptual similarity by measuring feature space distances. Experiments illustrate that 3D U-WGAN significantly improves image quality in resting-state and task preclinical fMRI data, enhancing signal-to-noise ratio without introducing excessive structural changes in existing methods. The proposed method outperforms state-of-the-art methods when applied to simulated and real data in a fMRI analysis pipeline.

Hybrid multi-layer Deep CNN/Aggregator feature for image classification

Mar 13, 2015

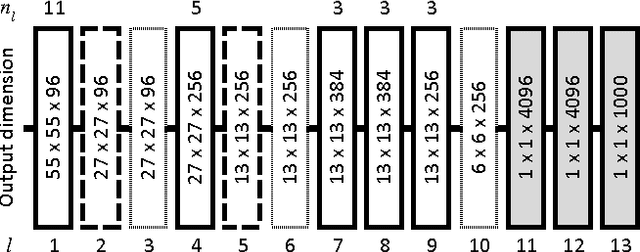

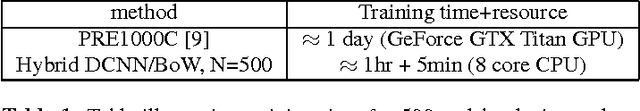

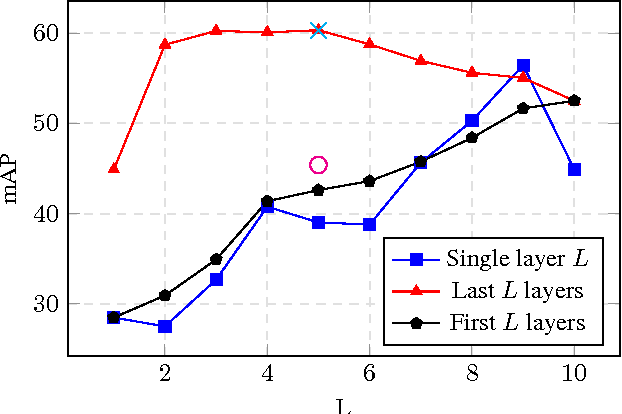

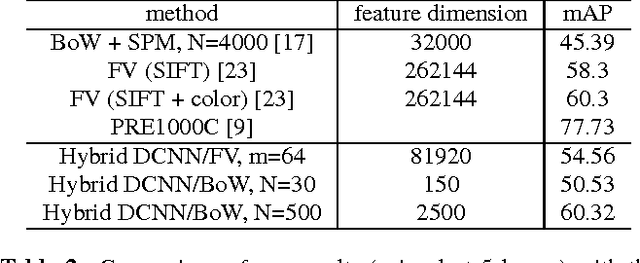

Abstract:Deep Convolutional Neural Networks (DCNN) have established a remarkable performance benchmark in the field of image classification, displacing classical approaches based on hand-tailored aggregations of local descriptors. Yet DCNNs impose high computational burdens both at training and at testing time, and training them requires collecting and annotating large amounts of training data. Supervised adaptation methods have been proposed in the literature that partially re-learn a transferred DCNN structure from a new target dataset. Yet these require expensive bounding-box annotations and are still computationally expensive to learn. In this paper, we address these shortcomings of DCNN adaptation schemes by proposing a hybrid approach that combines conventional, unsupervised aggregators such as Bag-of-Words (BoW), with the DCNN pipeline by treating the output of intermediate layers as densely extracted local descriptors. We test a variant of our approach that uses only intermediate DCNN layers on the standard PASCAL VOC 2007 dataset and show performance significantly higher than the standard BoW model and comparable to Fisher vector aggregation but with a feature that is 150 times smaller. A second variant of our approach that includes the fully connected DCNN layers significantly outperforms Fisher vector schemes and performs comparably to DCNN approaches adapted to Pascal VOC 2007, yet at only a small fraction of the training and testing cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge