Chris Joslin

Advancing 3D Gaussian Splatting Editing with Complementary and Consensus Information

Mar 14, 2025

Abstract:We present a novel framework for enhancing the visual fidelity and consistency of text-guided 3D Gaussian Splatting (3DGS) editing. Existing editing approaches face two critical challenges: inconsistent geometric reconstructions across multiple viewpoints, particularly in challenging camera positions, and ineffective utilization of depth information during image manipulation, resulting in over-texture artifacts and degraded object boundaries. To address these limitations, we introduce: 1) A complementary information mutual learning network that enhances depth map estimation from 3DGS, enabling precise depth-conditioned 3D editing while preserving geometric structures. 2) A wavelet consensus attention mechanism that effectively aligns latent codes during the diffusion denoising process, ensuring multi-view consistency in the edited results. Through extensive experimentation, our method demonstrates superior performance in rendering quality and view consistency compared to state-of-the-art approaches. The results validate our framework as an effective solution for text-guided editing of 3D scenes.

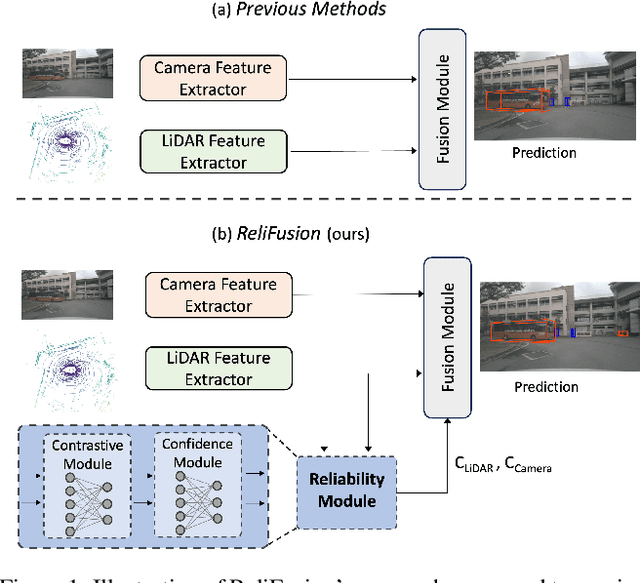

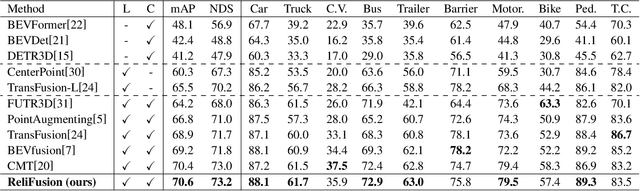

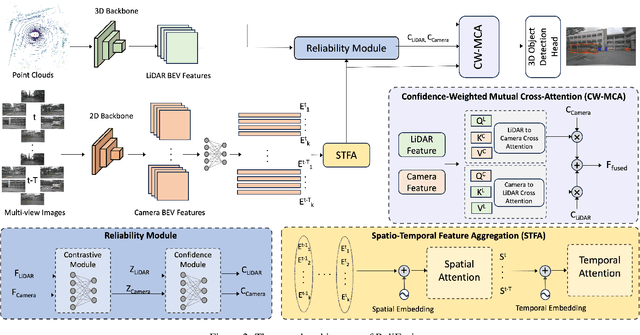

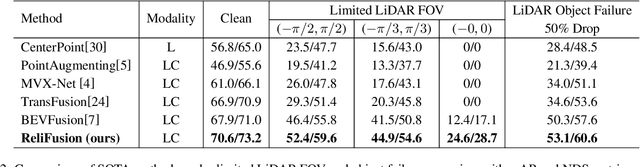

Reliability-Driven LiDAR-Camera Fusion for Robust 3D Object Detection

Feb 03, 2025

Abstract:Accurate and robust 3D object detection is essential for autonomous driving, where fusing data from sensors like LiDAR and camera enhances detection accuracy. However, sensor malfunctions such as corruption or disconnection can degrade performance, and existing fusion models often struggle to maintain reliability when one modality fails. To address this, we propose ReliFusion, a novel LiDAR-camera fusion framework operating in the bird's-eye view (BEV) space. ReliFusion integrates three key components: the Spatio-Temporal Feature Aggregation (STFA) module, which captures dependencies across frames to stabilize predictions over time; the Reliability module, which assigns confidence scores to quantify the dependability of each modality under challenging conditions; and the Confidence-Weighted Mutual Cross-Attention (CW-MCA) module, which dynamically balances information from LiDAR and camera modalities based on these confidence scores. Experiments on the nuScenes dataset show that ReliFusion significantly outperforms state-of-the-art methods, achieving superior robustness and accuracy in scenarios with limited LiDAR fields of view and severe sensor malfunctions.

3D Wasserstein generative adversarial network with dense U-Net based discriminator for preclinical fMRI denoising

Nov 28, 2024Abstract:Functional magnetic resonance imaging (fMRI) is extensively used in clinical and preclinical settings to study brain function, however, fMRI data is inherently noisy due to physiological processes, hardware, and external noise. Denoising is one of the main preprocessing steps in any fMRI analysis pipeline. This process is challenging in preclinical data in comparison to clinical data due to variations in brain geometry, image resolution, and low signal-to-noise ratios. In this paper, we propose a structure-preserved algorithm based on a 3D Wasserstein generative adversarial network with a 3D dense U-net based discriminator called, 3D U-WGAN. We apply a 4D data configuration to effectively denoise temporal and spatial information in analyzing preclinical fMRI data. GAN-based denoising methods often utilize a discriminator to identify significant differences between denoised and noise-free images, focusing on global or local features. To refine the fMRI denoising model, our method employs a 3D dense U-Net discriminator to learn both global and local distinctions. To tackle potential over-smoothing, we introduce an adversarial loss and enhance perceptual similarity by measuring feature space distances. Experiments illustrate that 3D U-WGAN significantly improves image quality in resting-state and task preclinical fMRI data, enhancing signal-to-noise ratio without introducing excessive structural changes in existing methods. The proposed method outperforms state-of-the-art methods when applied to simulated and real data in a fMRI analysis pipeline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge