Pranav Pandey

Integrating Perceptions: A Human-Centered Physical Safety Model for Human-Robot Interaction

Jul 09, 2025Abstract:Ensuring safety in human-robot interaction (HRI) is essential to foster user trust and enable the broader adoption of robotic systems. Traditional safety models primarily rely on sensor-based measures, such as relative distance and velocity, to assess physical safety. However, these models often fail to capture subjective safety perceptions, which are shaped by individual traits and contextual factors. In this paper, we introduce and analyze a parameterized general safety model that bridges the gap between physical and perceived safety by incorporating a personalization parameter, $\rho$, into the safety measurement framework to account for individual differences in safety perception. Through a series of hypothesis-driven human-subject studies in a simulated rescue scenario, we investigate how emotional state, trust, and robot behavior influence perceived safety. Our results show that $\rho$ effectively captures meaningful individual differences, driven by affective responses, trust in task consistency, and clustering into distinct user types. Specifically, our findings confirm that predictable and consistent robot behavior as well as the elicitation of positive emotional states, significantly enhance perceived safety. Moreover, responses cluster into a small number of user types, supporting adaptive personalization based on shared safety models. Notably, participant role significantly shapes safety perception, and repeated exposure reduces perceived safety for participants in the casualty role, emphasizing the impact of physical interaction and experiential change. These findings highlight the importance of adaptive, human-centered safety models that integrate both psychological and behavioral dimensions, offering a pathway toward more trustworthy and effective HRI in safety-critical domains.

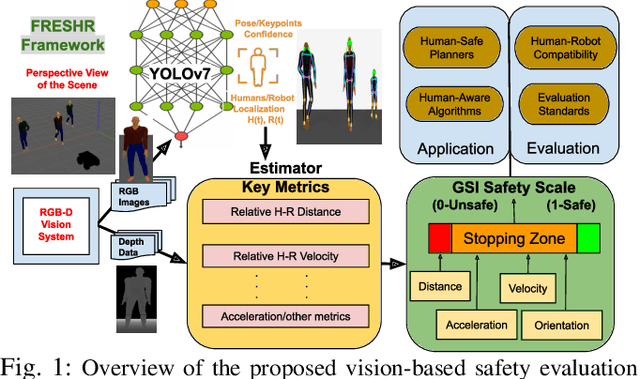

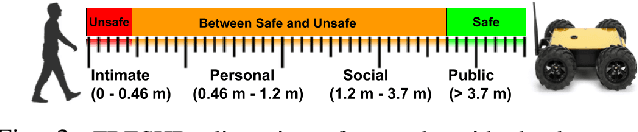

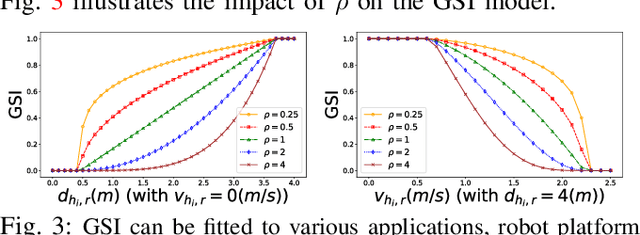

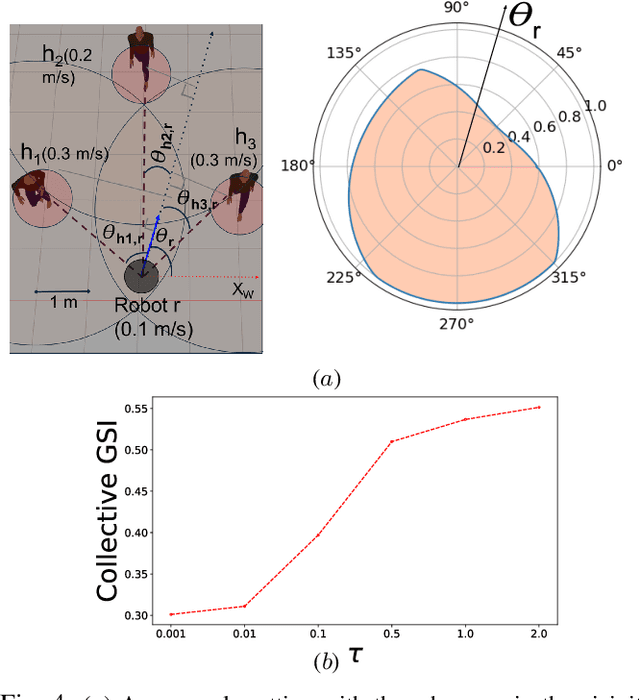

FRESHR-GSI: A Generalized Safety Model and Evaluation Framework for Mobile Robots in Multi-Human Environments

Jan 07, 2025

Abstract:Human safety is critical in applications involving close human-robot interactions (HRI) and is a key aspect of physical compatibility between humans and robots. While measures of human safety in HRI exist, these mainly target industrial settings involving robotic manipulators. Less attention has been paid to settings where mobile robots and humans share the space. This paper introduces a new robot-centered directional framework of human safety. It is particularly useful for evaluating mobile robots as they operate in environments populated by multiple humans. The framework integrates several key metrics, such as each human's relative distance, speed, and orientation. The core novelty lies in the framework's flexibility to accommodate different application requirements while allowing for both the robot-centered and external observer points of view. We instantiate the framework by using RGB-D based vision integrated with a deep learning-based human detection pipeline to yield a generalized safety index (GSI) that instantaneously assesses human safety. We evaluate GSI's capability of producing appropriate, robust, and fine-grained safety measures in real-world experimental scenarios and compare its performance with extant safety models.

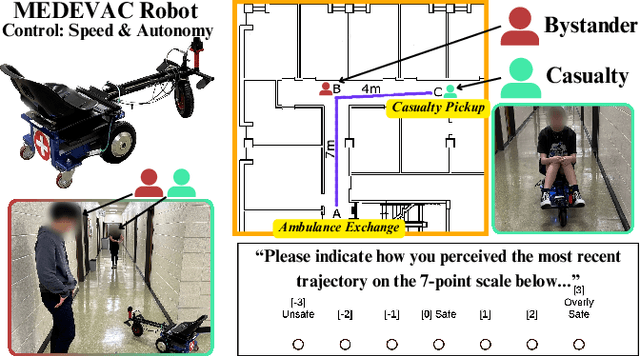

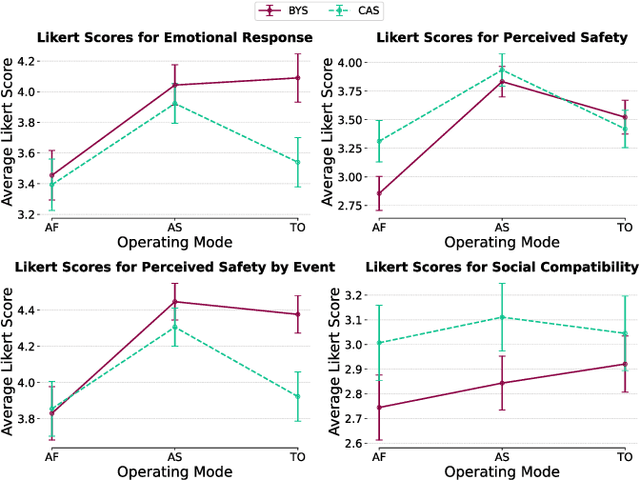

Analyzing Human Perceptions of a MEDEVAC Robot in a Simulated Evacuation Scenario

Oct 24, 2024

Abstract:The use of autonomous systems in medical evacuation (MEDEVAC) scenarios is promising, but existing implementations overlook key insights from human-robot interaction (HRI) research. Studies on human-machine teams demonstrate that human perceptions of a machine teammate are critical in governing the machine's performance. Here, we present a mixed factorial design to assess human perceptions of a MEDEVAC robot in a simulated evacuation scenario. Participants were assigned to the role of casualty (CAS) or bystander (BYS) and subjected to three within-subjects conditions based on the MEDEVAC robot's operating mode: autonomous-slow (AS), autonomous-fast (AF), and teleoperation (TO). During each trial, a MEDEVAC robot navigated an 11-meter path, acquiring a casualty and transporting them to an ambulance exchange point while avoiding an idle bystander. Following each trial, subjects completed a questionnaire measuring their emotional states, perceived safety, and social compatibility with the robot. Results indicate a consistent main effect of operating mode on reported emotional states and perceived safety. Pairwise analyses suggest that the employment of the AF operating mode negatively impacted perceptions along these dimensions. There were no persistent differences between casualty and bystander responses.

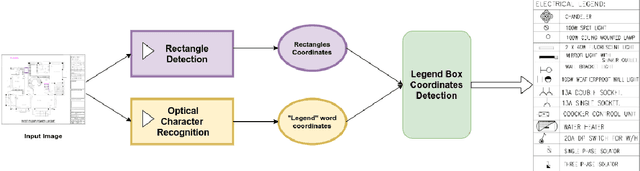

Automatic Detection and Classification of Symbols in Engineering Drawings

Apr 28, 2022

Abstract:A method of finding and classifying various components and objects in a design diagram, drawing, or planning layout is proposed. The method automatically finds the objects present in a legend table and finds their position, count and related information with the help of multiple deep neural networks. The method is pre-trained on several drawings or design templates to learn the feature set that may help in representing the new templates. For a template not seen before, it does not require any training with template dataset. The proposed method may be useful in multiple industry applications such as design validation, object count, connectivity of components, etc. The method is generic and domain independent.

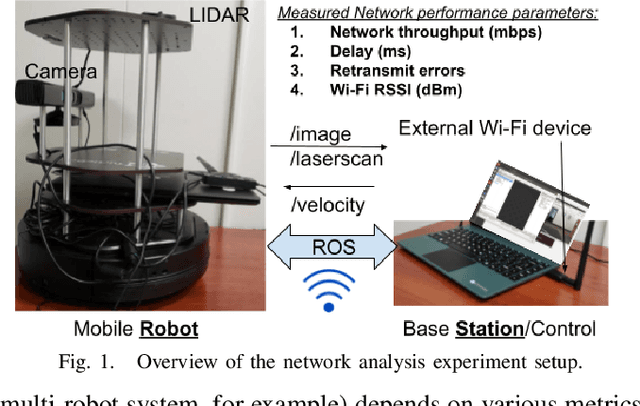

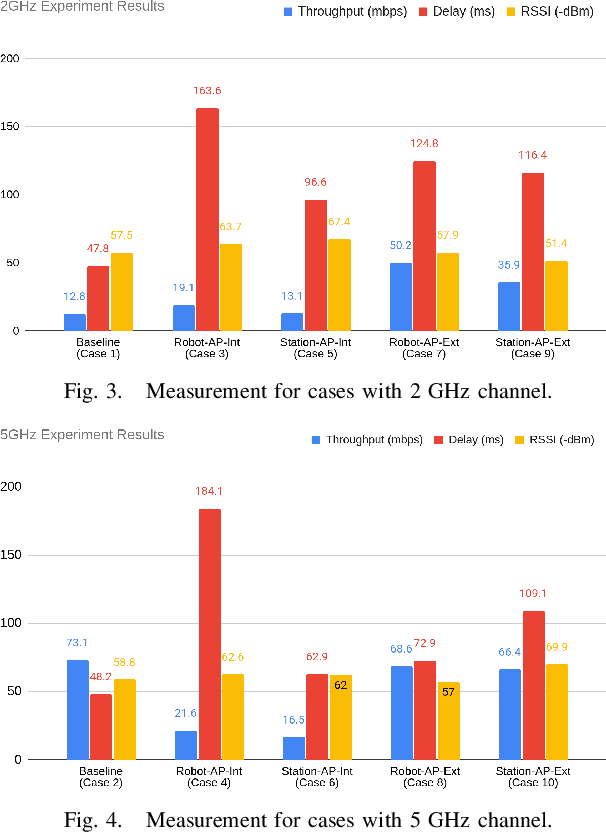

Empirical Analysis of Bi-directional Wi-Fi Network Performance on Mobile Robots and Connected Vehicles

Oct 06, 2021

Abstract:This paper proposes a framework to measure the important metrics (throughput, delay, packet retransmits, signal strength, etc.) to determine Wi-Fi network performance of mobile robots supported by the Robot Operating Systems (ROS) middleware. We analyze the bidirectional network performance of mobile robots and connected vehicles through an experimental setup, where a mobile robot is communicating vital sensor data such as video streaming from the camera(s) and LiDAR scan values to a command station while it navigates an indoor environment through teleoperated velocity commands received from the command station. The experiments evaluate the performance under 2.4 GHz and 5 GHz channels with different placement of Access Points (AP) with up to two network devices on each side. The discussions and insights from this study apply to the general vehicular networks and the field robotics community, where the wireless network plays a key role in enabling the success of robotic missions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge